Profile

|

Sebastian Pape, M.Sc. |

Publications

From Paper to Pixels: Transferring Handwritten Note-Taking Into Virtual Reality

While in the past, Virtual Reality and Augmented Reality needed hardware dedicated exclusively to one or the other, newer Virtual Reality headsets like the Meta Quest 3 combine both functionalities in one. This opens up possibilities to more easily implementable applications that use a combination of VR and AR, namely Augmented Virtuality, where a mostly virtual world is augmented with parts from the real one. We explore the possibilities that Augmented Virtuality can offer to enhance VR applications both from a theoretical perspective on the example of VR in driving automation and by offering a concrete prototype for note-taking in VR. This prototype, PaperVR, uses augmented virtuality concepts to enable handwriting on real paper inside a virtual environment. For that purpose, the front-facing cameras of a Meta Quest 3 were used, together with the MetaXR plugin, to create a tracked passthrough window, revealing the physical paper inside the virtual environment. As a point of comparison, a second prototype was developed which is intended to represent the current standard in handwritten VR note-taking, namely tablet writing. A user study was conducted, to compare both prototypes with each other and their equivalent writing methods outside of VR. It shows that paper writing is superior to tablet-based writing in VR for a synthetic task in our study. Additionally, in a practical note-taking task, it was possible to reach the same objective results inside VR as using physical writing outside a virtual environment, both regarding the number of correct answers and the answering speed per question.

@inproceedings{weiser2025,

title = {From {{Paper}} to {{Pixels}}: {{Transferring Handwritten Note-Taking Into Virtual Reality}}},

shorttitle = {From {{Paper}} to {{Pixels}}},

booktitle = {Human {{Interaction}} and {{Emerging Technologies}} ({{IHIET}} 2025)},

author = {Weiser, Paul and Pape, Sebastian and Rupp, Daniel and Flemisch, Frank},

year = 2025,

volume = {197},

issn = {27710718},

doi = {10.54941/ahfe1006712},

isbn = {978-1-964867-73-1}

}

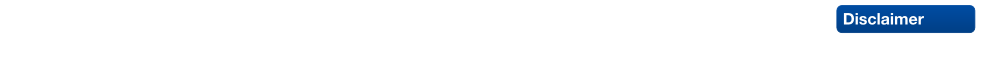

Demo: Webcam-based Hand- and Object-Tracking for a Desktop Workspace in Virtual Reality

As virtual reality overlays the user’s view, challenges arise when interaction with their physical surroundings is still needed. In a seated workspace environment interaction with the physical surroundings can be essential to enable productive working. Interaction with e.g. physical mouse and keyboard can be difficult when no visual reference is given to where they are placed. This demo shows a combination of computer vision-based marker detection with machine-learning-based hand detection to bring users’ hands and arbitrary objects into VR.

@inproceedings{10.1145/3677386.3688879,

author = {Pape, Sebastian and Beierle, Jonathan Heinrich and Kuhlen, Torsten Wolfgang and Weissker, Tim},

title = {Webcam-based Hand- and Object-Tracking for a Desktop Workspace in Virtual Reality},

year = {2024},

isbn = {9798400710889},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3677386.3688879},

doi = {10.1145/3677386.3688879},

abstract = {As virtual reality overlays the user’s view, challenges arise when interaction with their physical surroundings is still needed. In a seated workspace environment interaction with the physical surroundings can be essential to enable productive working. Interaction with e.g. physical mouse and keyboard can be difficult when no visual reference is given to where they are placed. This demo shows a combination of computer vision-based marker detection with machine-learning-based hand detection to bring users’ hands and arbitrary objects into VR.},

booktitle = {Proceedings of the 2024 ACM Symposium on Spatial User Interaction},

articleno = {64},

numpages = {2},

keywords = {Hand-Tracking, Object-Tracking, Physical Props, Virtual Reality, Webcam},

location = {Trier, Germany},

series = {SUI '24}

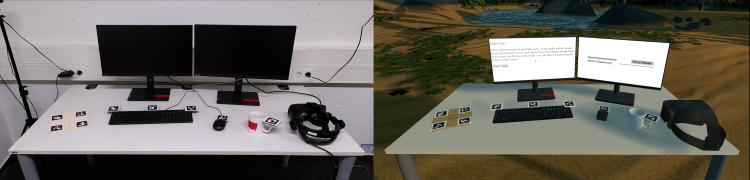

DasherVR: Evaluating a Predictive Text Entry System in Immersive Virtual Reality

Inputting text fluently in virtual reality is a topic still under active research, since many previously presented solutions have drawbacks in either speed, error rate, privacy or accessibility. To address these drawbacks, in this paper we adapted the predictive text entry system "Dasher" into an immersive virtual environment. Our evaluation with 20 participants shows that Dasher offers a good user experience with input speeds similar to other virtual text input techniques in the literature while maintaining low error rates. In combination with positive user feedback, we therefore believe that DasherVR is a promising basis for further research on accessible text input in immersive virtual reality.

» Show BibTeX

@inproceedings{pape2023,

title = {{{DasherVR}}: {{Evaluating}} a {{Predictive Text Entry System}} in {{Immersive Virtual Reality}}},

booktitle = {Towards an {{Inclusive}} and {{Accessible Metaverse}} at {{CHI}}'23},

author = {Pape, Sebastian and Ackermann, Jan Jakub and Weissker, Tim and Kuhlen, Torsten W},

doi = {https://doi.org/10.18154/RWTH-2023-05093},

year = {2023}

}

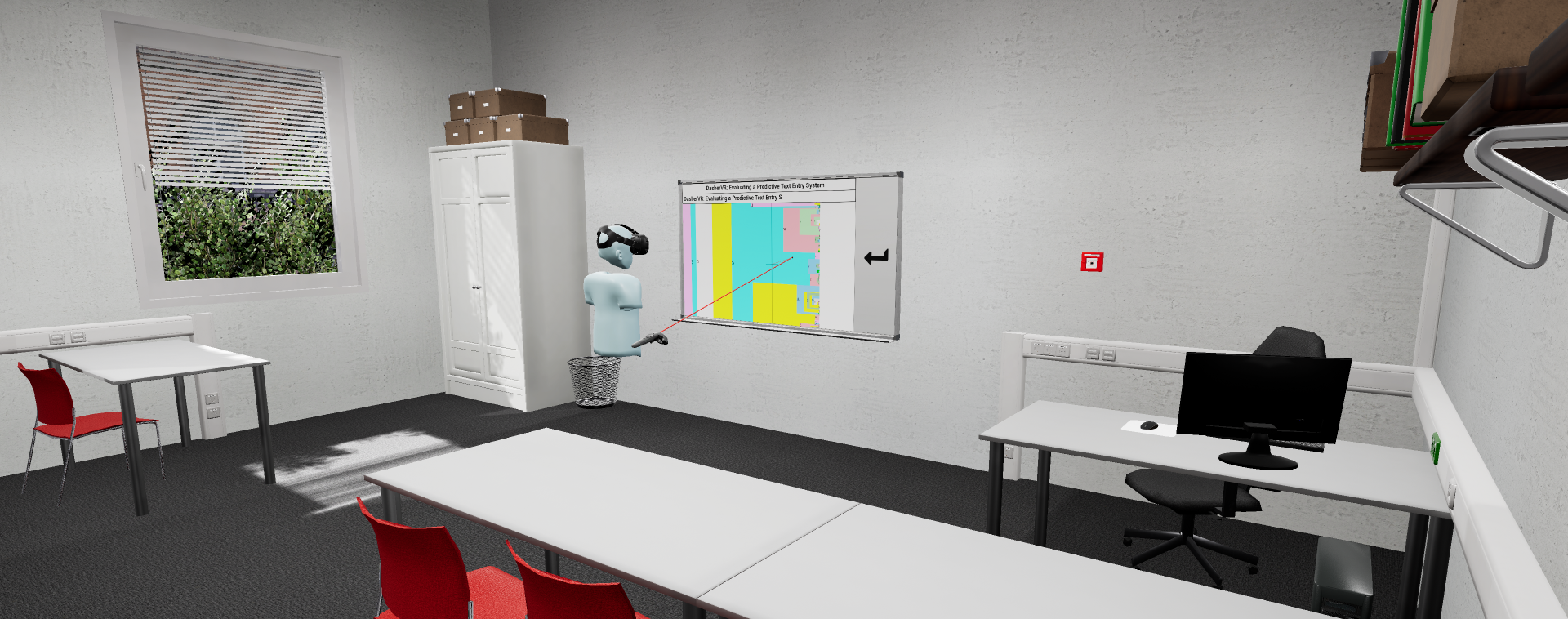

Poster: Virtual Optical Bench: A VR Learning Tool For Optical Design

The design of optical lens assemblies is a difficult process that requires lots of expertise. The teaching of this process today is done on physical optical benches, which are often too expensive for students to purchase. One way of circumventing these costs is to use software to simulate the optical bench. This work presents a virtual optical bench, which leverages real-time ray tracing in combination with VR rendering to create a teaching tool which creates a repeatable, non-hazardous, and feature-rich learning environment. The resulting application was evaluated in an expert review with 6 optical engineers.

» Show BibTeX

@INPROCEEDINGS{Pape2021,

author = {Pape, Sebastian and Bellgardt, Martin and Gilbert, David and König, Georg and Kuhlen, Torsten W.},

booktitle = {2021 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW)},

title = {Virtual Optical Bench: A VR learning tool for optical design},

year = {2021},

volume ={},

number = {},

pages = {635-636},

doi = {10.1109/VRW52623.2021.00200}

}

Calibratio - A Small, Low-Cost, Fully Automated Motion-to-Photon Measurement Device

Since the beginning of the design and implementation of virtual environments, these systems have been built to give the users the best possible experience. One detrimental factor for the user experience was shown to be a high end-to-end latency, here measured as motionto-photon latency, of the system. Thus, a lot of research in the past was focused on the measurement and minimization of this latency in virtual environments. Most existing measurement-techniques require either expensive measurement hardware like an oscilloscope, mechanical components like a pendulum or depend on manual evaluation of samples. This paper proposes a concept of an easy to build, low-cost device consisting of a microcontroller, servo motor and a photo diode to measure the motion-to-photon latency in virtual reality environments fully automatically. It is placed or attached to the system, calibrates itself and is controlled/monitored via a web interface. While the general concept is applicable to a variety of VR technologies, this paper focuses on the context of CAVE-like systems.

» Show BibTeX

@InProceedings{Pape2020a,

author = {Sebastian Pape and Marcel Kr\"{u}ger and Jan M\"{u}ller and Torsten W. Kuhlen},

title = {{Calibratio - A Small, Low-Cost, Fully Automated Motion-to-Photon Measurement Device}},

booktitle = {10th Workshop on Software Engineering and Architectures for Realtime Interactive Systems (SEARIS)},

year = {2020},

month={March}

}