Profile

|

Dipl.-Medieninform. Sascha Gebhardt |

Publications

Interactive Visual Analysis of Multi-dimensional Metamodels

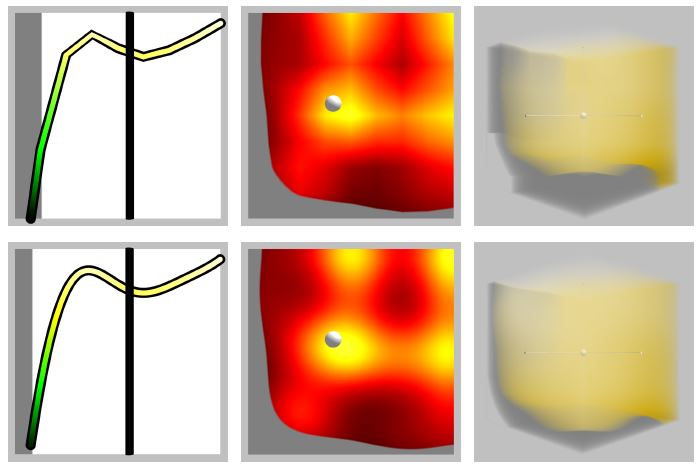

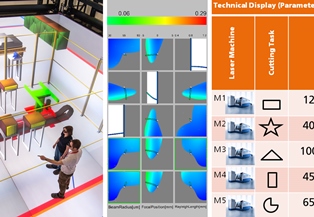

In the simulation of manufacturing processes, complex models are used to examine process properties. To save computation time, so-called metamodels serve as surrogates for the original models. Metamodels are inherently difficult to interpret, because they resemble multi-dimensional functions f : Rn -> Rm that map configuration parameters to production criteria. We propose a multi-view visualization application called memoSlice that composes several visualization techniques, specially adapted to the analysis of metamodels. With our application, we enable users to improve their understanding of a metamodel, but also to easily optimize processes. We put special attention on providing a high level of interactivity by realizing specialized parallelization techniques to provide timely feedback on user interactions. In this paper we outline these parallelization techniques and demonstrate their effectivity by means of micro and high level measurements.

@inproceedings {pgv.20181098,

booktitle = {Eurographics Symposium on Parallel Graphics and Visualization},

editor = {Hank Childs and Fernando Cucchietti},

title = {{Interactive Visual Analysis of Multi-dimensional Metamodels}},

author = {Gebhardt, Sascha and Pick, Sebastian and Hentschel, Bernd and Kuhlen, Torsten Wolfgang},

year = {2018},

publisher = {The Eurographics Association},

ISSN = {1727-348X},

ISBN = {978-3-03868-054-3},

DOI = {10.2312/pgv.20181098}

}

Fluid Sketching — Immersive Sketching Based on Fluid Flow

Fluid artwork refers to works of art based on the aesthetics of fluid motion, such as smoke photography, ink injection into water, and paper marbling. Inspired by such types of art, we created Fluid Sketching as a novel medium for creating 3D fluid artwork in immersive virtual environments. It allows artists to draw 3D fluid-like sketches and manipulate them via six degrees of freedom input devices. Different sets of brush strokes are available, varying different characteristics of the fluid. Because of fluid's nature, the diffusion of the drawn fluid sketch is animated, and artists have control over altering the fluid properties and stopping the diffusion process whenever they are satisfied with the current result. Furthermore, they can shape the drawn sketch by directly interacting with it, either with their hand or by blowing into the fluid. We rely on particle advection via curl-noise as a fast procedural method for animating the fluid flow.

» Show BibTeX

@InProceedings{Eroglu2018,

author = {Eroglu, Sevinc and Gebhardt, Sascha and Schmitz, Patric and Rausch, Dominik and Kuhlen, Torsten Wolfgang},

title = {{Fluid Sketching — Immersive Sketching Based on Fluid Flow}},

booktitle = {Proceedings of IEEE Virtual Reality Conference 2018},

year = {2018}

}

Measuring Insight into Multi-dimensional Data from a Combination of a Scatterplot Matrix and a HyperSlice Visualization

Understanding multi-dimensional data and in particular multi-dimensional dependencies is hard. Information visualization can help to understand this type of data. Still, the problem of how users gain insights from such visualizations is not well understood. Both the visualizations and the users play a role in understanding the data. In a case study, using both, a scatterplot matrix and a HyperSlice with six-dimensional data, we asked 16 participants to think aloud and measured insights during the process of analyzing the data. The amount of insights was strongly correlated with spatial abilities. Interestingly, all users were able to complete an optimization task independently of self-reported understanding of the data.

@Inbook{CaleroValdez2017,

author="Calero Valdez, Andr{\'e}

and Gebhardt, Sascha

and Kuhlen, Torsten W.

and Ziefle, Martina",

editor="Duffy, Vincent G.",

title="Measuring Insight into Multi-dimensional Data from a Combination of a Scatterplot Matrix and a HyperSlice Visualization",

bookTitle="Digital Human Modeling. Applications in Health, Safety, Ergonomics, and Risk Management: Health and Safety: 8th International Conference, DHM 2017, Held as Part of HCI International 2017, Vancouver, BC, Canada, July 9-14, 2017, Proceedings, Part II",

year="2017",

publisher="Springer International Publishing",

address="Cham",

pages="225--236",

isbn="978-3-319-58466-9",

doi="10.1007/978-3-319-58466-9_21",

url="http://dx.doi.org/10.1007/978-3-319-58466-9_21"

}

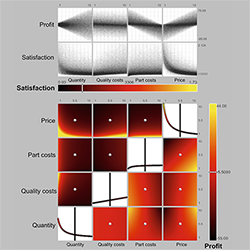

Gistualizer: An Immersive Glyph for Multidimensional Datapoints

Data from diverse workflows is often too complex for an adequate analysis without visualization. One kind of data are multi-dimensional datasets, which can be visualized via a wide array of techniques. For instance, glyphs can be used to visualize individual datapoints. However, glyphs need to be actively looked at to be comprehended. This work explores a novel approach towards visualizing a single datapoint, with the intention of increasing the user’s awareness of it while they are looking at something else. The basic concept is to represent this point by a scene that surrounds the user in an immersive virtual environment. This idea is based on the observation that humans can extract low-detailed information, the so-called gist, from a scene nearly instantly (equal or less 100ms). We aim at providing a first step towards answering the question whether enough information can be encoded in the gist of a scene to represent a point in multi-dimensional space and if this information is helpful to the user’s understanding of this space.

@inproceedings{Bellgardt2017,

author = {Bellgardt, Martin and Gebhardt, Sascha and Hentschel, Bernd and Kuhlen, Torsten W.},

booktitle = {Workshop on Immersive Analytics},

title = {{Gistualizer: An Immersive Glyph for Multidimensional Datapoints}},

year = {2017}

}

Virtual Production Intelligence

The research area Virtual Production Intelligence (VPI) focuses on the integrated support of collaborative planning processes for production systems and products. The focus of the research is on processes for information processing in the design domains Factory and Machine. These processes provide the integration and interactive analysis of emerging, mostly heterogeneous planning information. The demonstrators (flapAssist, memoSlice und VPI platform) that are Information systems serve for the validation of the scientific approaches and aim to realize a continuous and consistent information management in terms of the Digital Factory. Central challenges are the semantic information integration (e.g., by means of metamodelling), the subsequent evaluation as well as the visualization of planning information (e.g., by means of Visual Analytics and Virtual Reality). All scientific and technical work is done within an interdisciplinary team composed of engineers, computer scientists and physicists.

@BOOK{Brecher:683508,

key = {683508},

editor = {Brecher, Christian and Özdemir, Denis},

title = {{I}ntegrative {P}roduction {T}echnology : {T}heory and

{A}pplications},

address = {Cham},

publisher = {Springer International Publishing},

reportid = {RWTH-2017-01369},

isbn = {978-3-319-47451-9},

pages = {XXXIX, 1100 Seiten : Illustrationen},

year = {2017},

cin = {417310 / 080025},

cid = {$I:(DE-82)417310_20140620$ / $I:(DE-82)080025_20140620$},

typ = {PUB:(DE-HGF)3},

doi = {10.1007/978-3-319-47452-6},

url = {http://publications.rwth-aachen.de/record/683508},

}

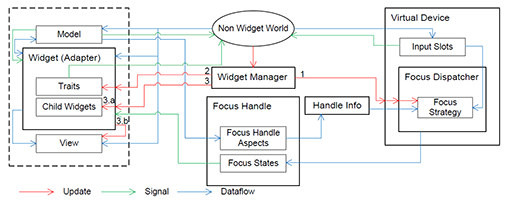

Vista Widgets: A Framework for Designing 3D User Interfaces from Reusable Interaction Building Blocks

Virtual Reality (VR) has been an active field of research for several decades, with 3D interaction and 3D User Interfaces (UIs) as important sub-disciplines. However, the development of 3D interaction techniques and in particular combining several of them to construct complex and usable 3D UIs remains challenging, especially in a VR context. In addition, there is currently only limited reusable software for implementing such techniques in comparison to traditional 2D UIs. To overcome this issue, we present ViSTA Widgets, a software framework for creating 3D UIs for immersive virtual environments. It extends the ViSTA VR framework by providing functionality to create multi-device, multi-focus-strategy interaction building blocks and means to easily combine them into complex 3D UIs. This is realized by introducing a device abstraction layer along sophisticated focus management and functionality to create novel 3D interaction techniques and 3D widgets. We present the framework and illustrate its effectiveness with code and application examples accompanied by performance evaluations.

@InProceedings{Gebhardt2016,

Title = {{Vista Widgets: A Framework for Designing 3D User Interfaces from Reusable Interaction Building Blocks}},

Author = {Gebhardt, Sascha and Petersen-Krau, Till and Pick, Sebastian and Rausch, Dominik and Nowke, Christian and Knott, Thomas and Schmitz, Patric and Zielasko, Daniel and Hentschel, Bernd and Kuhlen, Torsten W.},

Booktitle = {Proceedings of the 22nd ACM Conference on Virtual Reality Software and Technology},

Year = {2016},

Address = {New York, NY, USA},

Pages = {251--260},

Publisher = {ACM},

Series = {VRST '16},

Acmid = {2993382},

Doi = {10.1145/2993369.2993382},

ISBN = {978-1-4503-4491-3},

Keywords = {3D interaction, 3D user interfaces, framework, multi-device, virtual reality},

Location = {Munich, Germany},

Numpages = {10},

Url = {http://doi.acm.org/10.1145/2993369.2993382}

}

An Integrated Approach for the Knowledge Discovery in Computer Simulation Models with a Multi-dimensional Parameter Space

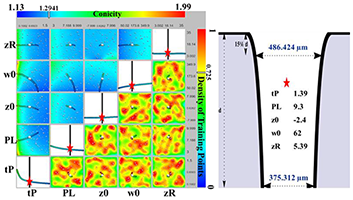

In production industries, parameter identification, sensitivity analysis and multi-dimensional visualization are vital steps in the planning process for achieving optimal designs and gaining valuable information. Sensitivity analysis and visualization can help in identifying the most-influential parameters and quantify their contribution to the model output, reduce the model complexity, and enhance the understanding of the model behavior. Typically, this requires a large number of simulations, which can be both very expensive and time consuming when the simulation models are numerically complex and the number of parameter inputs increases. There are three main constituent parts in this work. The first part is to substitute the numerical, physical model by an accurate surrogate model, the so-called metamodel. The second part includes a multi-dimensional visualization approach for the visual exploration of metamodels. In the third part, the metamodel is used to provide the two global sensitivity measures: i) the Elementary Effect for screening the parameters, and ii) the variance decomposition method for calculating the Sobol indices that quantify both the main and interaction effects. The application of the proposed approach is illustrated with an industrial application with the goal of optimizing a drilling process using a Gaussian laser beam.

@article{:/content/aip/proceeding/aipcp/10.1063/1.4952148,

author = "Khawli, Toufik Al and Gebhardt, Sascha and Eppelt, Urs and Hermanns, Torsten and Kuhlen, Torsten and Schulz, Wolfgang",

title = "An integrated approach for the knowledge discovery in computer simulation models with a multi-dimensional parameter space",

journal = "AIP Conference Proceedings",

year = "2016",

volume = "1738",

number = "1",

eid = 370003,

pages = "",

url = "http://scitation.aip.org/content/aip/proceeding/aipcp/10.1063/1.4952148;jsessionid=jy3FCznaGWpVQVNPYx765REW.x-aip-live-03",

doi = "http://dx.doi.org/10.1063/1.4952148"

}

Poster: Scalable Metadata In- and Output for Multi-platform Data Annotation Applications

Metadata in- and output are important steps within the data annotation process. However, selecting techniques that effectively facilitate these steps is non-trivial, especially for applications that have to run on multiple virtual reality platforms. Not all techniques are applicable to or available on every system, requiring to adapt workflows on a per-system basis. Here, we describe a metadata handling system based on Android's Intent system that automatically adapts workflows and thereby makes manual adaption needless.

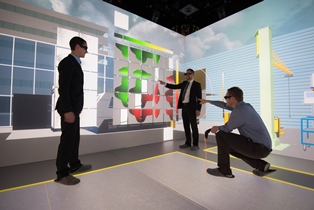

Poster: flapAssist: How the Integration of VR and Visualization Tools Fosters the Factory Planning Process

Virtual Reality (VR) systems are of growing importance to aid decision support in the context of the digital factory, especially factory layout planning. While current solutions either focus on virtual walkthroughs or the visualization of more abstract information, a solution that provides both, does currently not exist. To close this gap, we present a holistic VR application, called Factory Layout Planning Assistant (flapAssist). It is meant to serve as a platform for planning the layout of factories, while also providing a wide range of analysis features. By being scalable from desktops to CAVEs and providing a link to a central integration platform, flapAssist integrates well in established factory planning workflows.

Ein Konzept zur Integration von Virtual Reality Anwendungen zur Verbesserung des Informationsaustauschs im Fabrikplanungsprozess - A Concept for the Integration of Virtual Reality Applications to Improve the Information Exchange within the Factory Planning Process

Factory planning is a highly heterogeneous process that involves various expert groups at the same time. In this context, the communication between different expert groups poses a major challenge. One reason for this lies in the differing domain knowledge of individual groups. However, since decisions made within one domain usually have an effect on others, it is essential to make these domain interactions visible to all involved experts in order to improve the overall planning process. In this paper, we present a concept that facilitates the integration of two separate virtual-reality- and visualization analysis tools for different application domains of the planning process. The concept was developed in context of the Virtual Production Intelligence and aims at creating an approach to making domain interactions visible, such that the aforementioned challenges can be mitigated.

@Article{Pick2015,

Title = {“Ein Konzept zur Integration von Virtual Reality Anwendungen zur Verbesserung des Informationsaustauschs im Fabrikplanungsprozess”},

Author = {S. Pick, S. Gebhardt, B. Hentschel, T. W. Kuhlen, R. Reinhard, C. Büscher, T. Al Khawli, U. Eppelt, H. Voet, and J. Utsch},

Journal = {Tagungsband 12. Paderborner Workshop Augmented \& Virtual Reality in der Produktentstehung},

Year = {2015},

Pages = {139--152}

}

Ein Ansatz zur Softwaretechnischen Integration von Virtual Reality Anwendungen am Beispiel des Fabrikplanungsprozesses - An Approach for the Softwaretechnical Integration of Virtual Reality Applications by the Example of the Factory Planning Process

The integration of independent applications is a complex task from a software engineering perspective. Nonetheless, it entails significant benefits, especially in the context of Virtual Reality (VR) supported factory planning, e.g., to communicate interdependencies between different domains. To emphasize this aspect, we integrated two independent VR and visualization applications into a holistic planning solution. Special focus was put on parallelization and interaction aspects, while also considering more general requirements of such an integration process. In summary, we present technical solutions for the effective integration of several VR applications into a holistic solution with the integration of two applications from the context of factory planning with special focus on parallelism and interaction aspects. The effectiveness of the approach is demonstrated by performance measurements.

@Article{Gebhardt2015,

Title = {“Ein Ansatz zur Softwaretechnischen Integration von Virtual Reality Anwendungen am Beispiel des Fabrikplanungsprozesses”},

Author = {S. Gebhardt, S. Pick, B. Hentschel, T. W. Kuhlen, R. Reinhard, and C. Büscher},

Journal = {Tagungsband 12. Paderborner Workshop Augmented \& Virtual Reality in der Produktentstehung},

Year = {2015},

Pages = {153--166}

}

Advanced Virtual Reality and Visualization Support for Factory Layout Planning

Recently, more and more Virtual Reality (VR) and visualization solutions to support the factory layout planning process have been presented. On the one hand, VR enables planners to create cost-effective virtual prototypes and to perform virtual walkthroughs, e.g., to verify proposed layouts. On the other hand, visualization helps to gain insight into simulation results that, e.g., describe the various interdependencies between machines, such as material flows. In order to create truly effective tools based on VR and visualization, the right techniques have to be chosen and adapted to the specific problem. However, the solutions published so far usually do not exploit these technologies to their full potential.

To address this situation, we present a VR-based planning assistant that offers advanced visualization functionality that furthers the understanding of planning-relevant parameters, while also relying on established techniques. In order to realize a useful approach, the assistant fulfills three central requirements:

- A smooth integration of the assistant into existing workflows is essential in order to not disrupt them. Consequently, existing tools need to be properly integrated and a mechanism for data exchange with these tools has to be provided.

- Visualization is the main means of facilitating insight. Instead of only displaying factory models, advanced techniques to visualize more abstract quantities, like material flows or process chains, have to be provided.

- VR systems vary in the degree of immersion they offer, ranging from non-immersive desktop systems to fully immersive Cave Automatic Virtual Environment (CAVE) systems. Scalability among these systems allows adapting high-end installations as well as cost-effective solutions. However, to ensure good scalability, devising a flexible system abstraction and a unified interaction concept are essential.

The base for our planning assistant is an immersive VR (IVR) system in form of a CAVE. Our solution allows performing virtual walkthroughs and offers additional visualization techniques for planning relevant data.

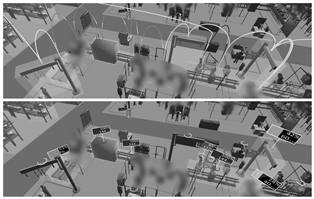

A 3D Collaborative Virtual Environment to Integrate Immersive Virtual Reality into Factory Planning Processes

In the recent past, efforts have been made to adopt immersive virtual reality (IVR) systems as a means for design reviews in factory layout planning. While several solutions for this scenario have been developed, their integration into existing planning workflows has not been discussed yet. From our own experience of developing such a solution, we conclude that the use of IVR systems-like CAVEs-is rather disruptive to existing workflows. One major reason for this is that IVR systems are not available everywhere due to their high costs and large physical footprint. As a consequence, planners have to travel to sites offering such systems which is especially prohibitive as planners are usually geographically dispersed. In this paper, we present a concept for integrating IVR systems into the factory planning process by means of a 3D collaborative virtual environment (3DCVE) without disrupting the underlying planning workflow. The goal is to combine non-immersive and IVR systems to facilitate collaborative walkthrough sessions. However, this scenario poses unique challenges to interactive collaborative work that to the best of our knowledge have not been addressed so far. In this regard, we discuss approaches to viewpoint sharing, telepointing and annotation support that are geared towards distributed heterogeneous 3DCVEs.

An Evaluation of a Smart-Phone-Based Menu System for Immersive Virtual Environments

System control is a crucial task for many virtual reality applications and can be realized in a broad variety of ways, whereat the most common way is the use of graphical menus. These are often implemented as part of the virtual environment, but can also be displayed on mobile devices. Until now, many systems and studies have been published on using mobile devices such as personal digital assistants (PDAs) to realize such menu systems. However, most of these systems have been proposed way before smartphones existed and evolved to everyday companions for many people. Thus, it is worthwhile to evaluate the applicability of modern smartphones as carrier of menu systems for immersive virtual environments. To do so, we implemented a platform-independent menu system for smartphones and evaluated it in two different ways. First, we performed an expert review in order to identify potential design flaws and to test the applicability of the approach for demonstrations of VR applications from a demonstrator's point of view. Second, we conducted a user study with 21 participants to test user acceptance of the menu system. The results of the two studies were contradictory: while experts appreciated the system very much, user acceptance was lower than expected. From these results we could draw conclusions on how smartphones should be used to realize system control in virtual environments and we could identify connecting factors for future research on the topic.

Integration of VR and Visualization Tools to Foster the Factory Planning Process

Recently, virtual reality (VR) and visualization have been increasingly employed to facilitate various tasks in factory planning processes. One major challenge in this context lies in the exchange of information between expert groups concerned with distinct planning tasks in order to make planners aware of inter-dependencies. For example, changes to the configuration of individual machines can have an effect on the overall production performance and vice versa. To this end, we developed VR- and visualization-based planning tools for two distinct planning tasks for which we present an integration concept that facilitates information exchange between these tools. The first application's goal is to facilitate layout planning by means of a CAVE system. The high degree of immersion offered by this system allows users to judge spatial relations in entire factories through cost-effective virtual walkthroughs. Additionally, information like material flow data can be visualized within the virtual environment to further assist planners to comprehensively evaluate the factory layout. Another application focuses on individual machines with the goal to help planners find ideal configurations by providing a visualization solution to explore the multi-dimensional parameter space of a single machine. This is made possible through the use of meta-models of the parameter space that are then visualized by means of the concept of Hyperslice. In this paper we present a concept that shows how these applications can be integrated into one comprehensive planning tool that allows for planning factories while considering factors of different planning levels at the same time. The concept is backed by Virtual Production Intelligence (VPI), which integrates data from different levels of factory processes, while including additional data sources and algorithms to provide further information to be used by the applications. In conclusion, we present an integration concept for VR- and visualization-based software tools that facilitates the communication of interdependencies between different factory planning tasks. As the first steps towards creating a comprehensive factory planning solution, we demonstrate the integration of the aforementioned two use-cases by applying VPI. Finally, we review the proposed concept by discussing its benefits and pointing out potential implementation pitfalls.

Extended Pie Menus for Immersive Virtual Environments

Pie menus are a well-known technique for interacting with 2D environments and so far a large body of research documents their usage and optimizations. Yet, comparatively little research has been done on the usability of pie menus in immersive virtual environments (IVEs). In this paper we reduce this gap by presenting an implementation and evaluation of an extended hierarchical pie menu system for IVEs that can be operated with a six-degrees-of-freedom input device. Following an iterative development process, we first developed and evaluated a basic hierarchical pie menu system. To better understand how pie menus should be operated in IVEs, we tested this system in a pilot user study with 24 participants and focus on item selection. Regarding the results of the study, the system was tweaked and elements like check boxes, sliders, and color map editors were added to provide extended functionality. An expert review with five experts was performed with the extended pie menus being integrated into an existing VR application to identify potential design issues. Overall results indicated high performance and efficient design.

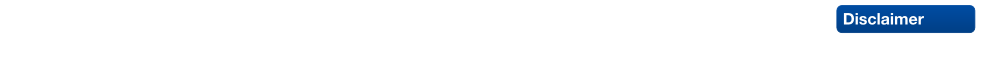

Poster: Hyperslice Visualization of Metamodels for Manufacturing Processes

In modeling and simulation of manufacturing processes, complex models are used to examine and understand the behavior and properties of the product or process. To save computation time, global approximation models, often referred to as metamodels, serve as surrogates for the original complex models. Such metamodels are difficult to interpret, because they usually have multi-dimensional input and output domains. We propose a hyperslice-based visualization approach, that uses hyperslices in combination with direct volume rendering, training point visualization, and gradient trajectory navigation, that helps in understanding such metamodels. Great care was taken to provide a high level of interactivity for the exploration of the data space.