Publications

Efficient Modal Sound Synthesis on GPUs

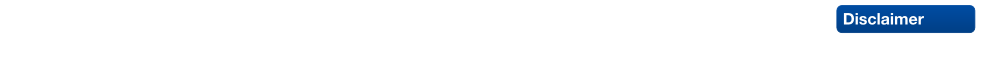

Modal sound synthesis is a useful method to interactively generate sounds for Virtual Environments. Forces acting on objects excite modes, which then have to be accumulated to generate the output sound. Due to the high audio sampling rate, algorithms using the CPU typically can handle only a few actively sounding objects. Additionally, force excitation should be applied at a high sampling rate. We present different algorithms to compute the synthesized sound using a GPU, and compare them to CPU implementations. The GPU algorithms shows a significantly higher performance, and allows many sounding objects simultaneously.

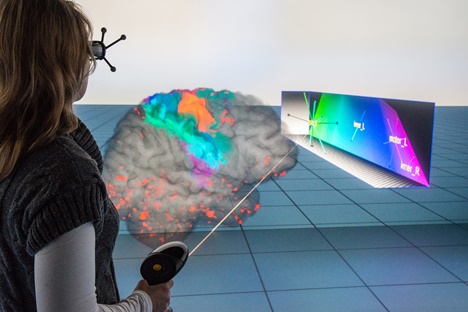

Quo Vadis CAVE – Does Immersive Visualization Still Matter?

More than two decades have passed since the introduction of the CAVE (Cave Automatic Virtual Environment), a landmark in the development of VR.1 The CAVE addressed two major issues with head-mounted displays of the era. First, it provided an unprecedented field of view, greatly improving the Feeling of presence in a virtual environment (VE). Second, this feeling was ampli ed because users didn’t have to rely on a virtual representation of their own bodies or parts thereof. Instead, they could physically enter the virtual space. Scientific visualization had been promulgated as a killer app for VR technology almost from day one. With the CAVE’s inception, it became possible to “put users within their data.” Proponents predicted two key advantages. First, immersive VR promised faster, more comprehensive understanding of complex, spatial relationships owing to head-tracked, stereoscopic rendering. Second, it would provide a more natural user interface, specifically for spatial interaction. In a seminal article, Andy van Dam and his colleagues proposed VR-enabled visualization as a midterm solution to the “accelerating data crisis.”2 That is, the ability to generate data had for some time outpaced the ability to analyze it. Over the years, a number of studies have investigated the effects of VR-based visualizations in speci c application scenarios. Recently, Bireswar Laha and his colleagues provided more general, empirical evidence for its bene fits. Although VR and scienti c visualization have matured and many of the original technical limitations have been resolved, immersive visualization has yet to nd the widespread, everyday use that was claimed in the early days. At the same time, the demand for scalable visualization solutions is greater than ever. If anything, the gap between data generation and analysis capabilities has widened even more. So, two questions arise. What should such scalable solutions look like, and what requirements arise regarding the underlying hardware and software and the overall methodology?

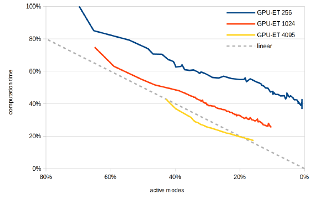

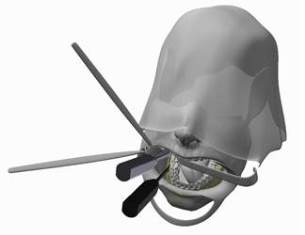

Preliminary Bone Sawing Model for a Virtual Reality-Based Training Simulator of Bilateral Sagittal Split Osteotomy

Successful bone sawing requires a high level of skill and experience, which could be gained by the use of Virtual Reality-based simulators. A key aspect of these medical simulators is realistic force feedback. The aim of this paper is to model the bone sawing process in order to develop a valid training simulator for the bilateral sagittal split osteotomy, the most often applied corrective surgery in case of a malposition of the mandible. Bone samples from a human cadaveric mandible were tested using a designed experimental system. Image processing and statistical analysis were used for the selection of four models for the bone sawing process. The results revealed a polynomial dependency between the material removal rate and the applied force. Differences between the three segments of the osteotomy line and between the cortical and cancellous bone were highlighted.

Virtuelle Realität als Gegenstand und Werkzeug der Wissenschaft

Dieser Beitrag stellt die Disziplin der Virtuellen Realität (VR) als eine wichtige Ausprägung von Virtualität vor. Die VR wird als eine spezielle Form der Mensch-Computer-Schnittstelle verstanden, die mehrere menschliche Sinne in die Interaktion einbezieht und beim Benutzer die Illusion hervorruft, eine computergenerierte künstliche Welt als real wahrzunehmen. Der Beitrag zeigt auf, dass umfangreiche Methodenforschung über mehrere Disziplinen hinweg notwendig ist um dieses ultimative Ziel zu erreichen oder ihm zumindest näher zu kommen. Schließlich werden drei unterschiedliche Anwendungen vorgestellt welche demonstrieren, auf welch vielfältige Art und Weise die VR als Werkzeug in den Wissenschaften eingesetzt werden kann.

Reorientation in Virtual Environments using Interactive Portals

Real walking is the most natural method of navigation in virtual environments. However, physical space limitations often prevent or complicate its continuous use. Thus, many real walking interfaces, among them redirected walking techniques, depend on a reorientation technique that redirects the user away from physical boundaries when they are reached. However, existing reorientation techniques typically actively interrupt the user, or depend on the application of rotation gain that can lead to simulator sickness. In our approach, the user is reoriented using portals. While one portal is placed automatically to guide the user to a safe position, she controls the target selection and physically walks through the portal herself to perform the reorientation. In a formal user study we show that the method does not cause additional simulator sickness, and participants walk more than with point-and-fly navigation or teleportation, at the expense of longer completion times.

Best Technote!

@INPROCEEDINGS{freitag2014,

author={S. Freitag and D. Rausch and T. Kuhlen},

booktitle={2014 IEEE Symposium on 3D User Interfaces (3DUI)},

title={{Reorientation in Virtual Environments Using Interactive Portals}},

year={2014},

pages={119-122},

doi={10.1109/3DUI.2014.6798852},

month={March},

}

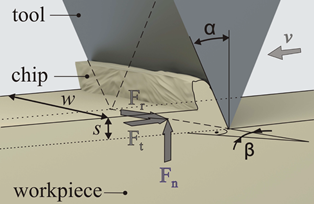

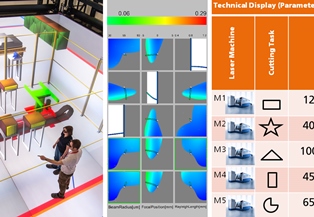

Advanced Virtual Reality and Visualization Support for Factory Layout Planning

Recently, more and more Virtual Reality (VR) and visualization solutions to support the factory layout planning process have been presented. On the one hand, VR enables planners to create cost-effective virtual prototypes and to perform virtual walkthroughs, e.g., to verify proposed layouts. On the other hand, visualization helps to gain insight into simulation results that, e.g., describe the various interdependencies between machines, such as material flows. In order to create truly effective tools based on VR and visualization, the right techniques have to be chosen and adapted to the specific problem. However, the solutions published so far usually do not exploit these technologies to their full potential.

To address this situation, we present a VR-based planning assistant that offers advanced visualization functionality that furthers the understanding of planning-relevant parameters, while also relying on established techniques. In order to realize a useful approach, the assistant fulfills three central requirements:

- A smooth integration of the assistant into existing workflows is essential in order to not disrupt them. Consequently, existing tools need to be properly integrated and a mechanism for data exchange with these tools has to be provided.

- Visualization is the main means of facilitating insight. Instead of only displaying factory models, advanced techniques to visualize more abstract quantities, like material flows or process chains, have to be provided.

- VR systems vary in the degree of immersion they offer, ranging from non-immersive desktop systems to fully immersive Cave Automatic Virtual Environment (CAVE) systems. Scalability among these systems allows adapting high-end installations as well as cost-effective solutions. However, to ensure good scalability, devising a flexible system abstraction and a unified interaction concept are essential.

The base for our planning assistant is an immersive VR (IVR) system in form of a CAVE. Our solution allows performing virtual walkthroughs and offers additional visualization techniques for planning relevant data.

A 3D Collaborative Virtual Environment to Integrate Immersive Virtual Reality into Factory Planning Processes

In the recent past, efforts have been made to adopt immersive virtual reality (IVR) systems as a means for design reviews in factory layout planning. While several solutions for this scenario have been developed, their integration into existing planning workflows has not been discussed yet. From our own experience of developing such a solution, we conclude that the use of IVR systems-like CAVEs-is rather disruptive to existing workflows. One major reason for this is that IVR systems are not available everywhere due to their high costs and large physical footprint. As a consequence, planners have to travel to sites offering such systems which is especially prohibitive as planners are usually geographically dispersed. In this paper, we present a concept for integrating IVR systems into the factory planning process by means of a 3D collaborative virtual environment (3DCVE) without disrupting the underlying planning workflow. The goal is to combine non-immersive and IVR systems to facilitate collaborative walkthrough sessions. However, this scenario poses unique challenges to interactive collaborative work that to the best of our knowledge have not been addressed so far. In this regard, we discuss approaches to viewpoint sharing, telepointing and annotation support that are geared towards distributed heterogeneous 3DCVEs.

Geometrically Limited Constraints for Physics-based Haptic Rendering

In this paper a single-point haptic rendering technique is proposed which uses a constraint-based physics simulation approach. Geometries are sampled using point shell points, each associated with a small disk, that jointly result in a closed surface for the whole shell. The geometric information is incorporated into the constraint-based simulation using newly introduced geometrically limited contact constraints which are active in a restricted region corresponding to the disks in contact. The usage of disk constraints not only creates closed surfaces, which is important for single-point rendering, but also tackles the problem of over-constraint contact situations in convex geometric setups. Furthermore, an iterative solving scheme for dynamic problems under consideration of the proposed constraint type is proposed. Finally, an evaluation of the simulation approach shows the advantages compared to standard contact constraints regarding the quality of the rendered forces.

Data-flow Oriented Software Framework for the Development of Haptic-enabled Physics Simulations

This paper presents a software framework that supports the development of haptic-enabled physics simulations. The framework provides tools aiming to facilitate a fast prototyping process by utilizing component and flow-oriented architectures, while maintaining the capability to create efficient code which fulfills the performance requirements induced by the target applications. We argue that such a framework should not only ease the creation of prototypes but also help to effectively and efficiently evaluate them. To this end, we provide analysis tools and the possibility to build problem oriented evaluation environments based on the described software concepts. As motivating use case, we present a project with the goal to develop a haptic-enabled medical training simulator for a maxillofacial procedure. With this example, we demonstrate how the described framework can be used to create a simulation architecture for a complex haptic simulation and how the tools assist in the prototyping process.

An Evaluation of a Smart-Phone-Based Menu System for Immersive Virtual Environments

System control is a crucial task for many virtual reality applications and can be realized in a broad variety of ways, whereat the most common way is the use of graphical menus. These are often implemented as part of the virtual environment, but can also be displayed on mobile devices. Until now, many systems and studies have been published on using mobile devices such as personal digital assistants (PDAs) to realize such menu systems. However, most of these systems have been proposed way before smartphones existed and evolved to everyday companions for many people. Thus, it is worthwhile to evaluate the applicability of modern smartphones as carrier of menu systems for immersive virtual environments. To do so, we implemented a platform-independent menu system for smartphones and evaluated it in two different ways. First, we performed an expert review in order to identify potential design flaws and to test the applicability of the approach for demonstrations of VR applications from a demonstrator's point of view. Second, we conducted a user study with 21 participants to test user acceptance of the menu system. The results of the two studies were contradictory: while experts appreciated the system very much, user acceptance was lower than expected. From these results we could draw conclusions on how smartphones should be used to realize system control in virtual environments and we could identify connecting factors for future research on the topic.

Integration of VR and Visualization Tools to Foster the Factory Planning Process

Recently, virtual reality (VR) and visualization have been increasingly employed to facilitate various tasks in factory planning processes. One major challenge in this context lies in the exchange of information between expert groups concerned with distinct planning tasks in order to make planners aware of inter-dependencies. For example, changes to the configuration of individual machines can have an effect on the overall production performance and vice versa. To this end, we developed VR- and visualization-based planning tools for two distinct planning tasks for which we present an integration concept that facilitates information exchange between these tools. The first application's goal is to facilitate layout planning by means of a CAVE system. The high degree of immersion offered by this system allows users to judge spatial relations in entire factories through cost-effective virtual walkthroughs. Additionally, information like material flow data can be visualized within the virtual environment to further assist planners to comprehensively evaluate the factory layout. Another application focuses on individual machines with the goal to help planners find ideal configurations by providing a visualization solution to explore the multi-dimensional parameter space of a single machine. This is made possible through the use of meta-models of the parameter space that are then visualized by means of the concept of Hyperslice. In this paper we present a concept that shows how these applications can be integrated into one comprehensive planning tool that allows for planning factories while considering factors of different planning levels at the same time. The concept is backed by Virtual Production Intelligence (VPI), which integrates data from different levels of factory processes, while including additional data sources and algorithms to provide further information to be used by the applications. In conclusion, we present an integration concept for VR- and visualization-based software tools that facilitates the communication of interdependencies between different factory planning tasks. As the first steps towards creating a comprehensive factory planning solution, we demonstrate the integration of the aforementioned two use-cases by applying VPI. Finally, we review the proposed concept by discussing its benefits and pointing out potential implementation pitfalls.

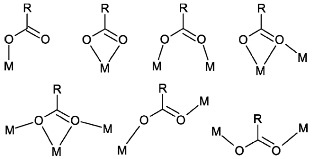

An Unusual Linker and an Unexpected Node: CaCl2 Dumbbells Linked by Proline to Form Square Lattice Networks

Four new structures based on CaCl2 and proline are reported, all with an unusual Cl–Ca–Cl moiety. Depending on the stoichiometry and the chirality of the amino acid, this metal dihalide fragment represents the core of a mononuclear Ca complex or may be linked by the carboxylate to form extended structures. A cisoid coordination of the halide atoms at the calcium cation is encountered in a chain polymer. In the 2D structures, CaCl2 dumbbells act as nodes and are crosslinked by either enantiomerically pure or racemic proline to form square lattice nets. Extensive database searches and topology tests prove that this structure type is rare for MCl2 dumbbells in general and unprecedented for Ca compounds.Four new structures based on CaCl2 and proline are reported, all with an unusual Cl–Ca–Cl moiety. Depending on the stoichiometry and the chirality of the amino acid, this metal dihalide fragment represents the core of a mononuclear Ca complex or may be linked by the carboxylate to form extended structures. A cisoid coordination of the halide atoms at the calcium cation is encountered in a chain polymer. In the 2D structures, CaCl2 dumbbells act as nodes and are crosslinked by either enantiomerically pure or racemic proline to form square lattice nets. Extensive database searches and topology tests prove that this structure type is rare for MCl2 dumbbells in general and unprecedented for Ca compounds.

@Article{Lamberts2014,

Title = {{An Unusual Linker and an Unexpected Node: CaCl2 Dumbbells Linked by Proline to Form Square Lattice Networks}},

Author = {Lamberts, Kevin and Porsche, Sven and Hentschel, Bernd and Kuhlen, Torsten and Englert, Ulli},

Journal = {CrystEngComm},

Year = {2014},

Pages = {3305-3311},

Volume = {16},

Doi = {10.1039/C3CE42357C},

Issue = {16},

Publisher = {The Royal Society of Chemistry},

Url = {http://dx.doi.org/10.1039/C3CE42357C}

}

The Human Brain Project - Chances and Challenges for Cognitive Systems

The Human Brain Project is one of the largest scientific initiatives dedicated to the research of the human brain worldwide. Over 80 research groups from a broad variety of scientific areas, such as neuroscience, simulation science, high performance computing, robotics, and visualization work together in this European research initiative. This work at hand will identify certain chances and challenges for cognitive systems engineering resulting from the HBP research activities. Beside the main goal of the HBP gathering deeper insights into the structure and function of the human brain, cognitive system research can directly benefit from the creation of cognitive architectures, the simulation of neural networks, and the application of these in context of (neuro-)robotics. Nevertheless, challenges arise regarding the utilization and transformation of these research results for cognitive systems, which will be discussed in this paper. Tools necessary to cope with these challenges are visualization techniques helping to understand and gain insights into complex data. Therefore, this paper presents a set of visualization techniques developed at the Virtual Reality Group at the RWTH Aachen University.

@inproceedings{Weyers2014,

author = {Weyers, Benjamin and Nowke, Christian and H{\"{a}}nel, Claudia and Zielasko, Daniel and Hentschel, Bernd and Kuhlen, Torsten},

booktitle = {Workshop Kognitive Systeme: Mensch, Teams, Systeme und Automaten},

title = {{The Human Brain Project – Chances and Challenges for Cognitive Systems}},

year = {2014}

}

Interactive Volume Rendering for Immersive Virtual Environments

Immersive virtual environments (IVEs) are an appropriate platform for 3D data visualization and exploration as, for example, the spatial understanding of these data is facilitated by stereo technology. However, in comparison to desktop setups a lower latency and thus a higher frame rate is mandatory. In this paper we argue that current realizations of direct volume rendering do not allow for a desirable visualization w.r.t. latency and visual quality that do not impair the immersion in virtual environments. To this end, we analyze published acceleration techniques and discuss their potential in IVEs; furthermore, head tracking is considered as a main challenge but also a starting point for specific optimization techniques.

@inproceedings{Hanel2014,

author = {H{\"{a}}nel, Claudia and Weyers, Benjamin and Hentschel, Bernd and Kuhlen, Torsten W.},

booktitle = {IEEE VIS International Workshop on 3DVis: Does 3D really make sense for Data Visualization?},

title = {{Interactive Volume Rendering for Immersive Virtual Environments}},

year = {2014}

}

Visualization of Memory Access Behavior on Hierarchical NUMA Architectures

The available memory bandwidth of existing high performance computing platforms turns out as being more and more the limitation to various applications. Therefore, modern microarchitectures integrate the memory controller on the processor chip, which leads to a non-uniform memory access behavior of such systems. This access behavior in turn entails major challenges in the development of shared memory parallel applications. An improperly implemented memory access functionality results in a bad ratio between local and remote memory access, and causes low performance on such architectures. To address this problem, the developers of such applications rely on tools to make these kinds of performance problems visible. This work presents a new tool for the visualization of performance data of the non-uniform memory access behavior. Because of the visual design of the tool, the developer is able to judge the severity of remote memory access in a time-dependent simulation, which is currently not possible using existing tools.

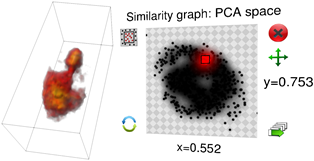

Poster: Visualizing Geothermal Simulation Data with Uncertainty

Simulations of geothermal reservoirs inherently contain uncertainty due to the fact that the underlying physical models are created from sparse data. Moreover, this uncertainty often cannot be completely expressed by simple key measures (e.g., mean and standard deviation), as the distribution of possible values is often not unimodal. Nevertheless, existing visualizations of these simulation data often completely neglect displaying the uncertainty, or are limited to a mean/variance representation. We present an approach to visualize geothermal simulation data that deals with both cases: scalar uncertainties as well as general ensembles of data sets. Users can interactively define two-dimensional transfer functions to visualize data and uncertainty values directly, or browse a 2D scatter plot representation to explore different possibilities in an ensemble.

Poster: Guided Tour Creation in Immersive Virtual Environments

Guided tours have been found to be a good approach to introducing users to previously unknown virtual environments and to allowing them access to relevant points of interest. Two important tasks during the creation of guided tours are the definition of views onto relevant information and their arrangement into an order in which they are to be visited. To allow a maximum of flexibility an interactive approach to these tasks is desirable. To this end, we present and evaluate two approaches to the mentioned interaction tasks in this paper. The first approach is a hybrid 2D/3D interaction metaphor in which a tracked tablet PC is used as a virtual digital camera that allows to specify and order views onto the scene. The second one is a purely 3D version of the first one, which does not require a tablet PC. Both approaches were compared in an initial user study, whose results indicate a superiority of the 3D over the hybrid approach.

@InProceedings{Pick2014,

Title = {{P}oster: {G}uided {T}our {C}reation in {I}mmersive {V}irtual {E}nvironments},

Author = {Sebastian Pick and Andreas B\"{o}nsch and Irene Tedjo-Palczynski and Bernd Hentschel and Torsten Kuhlen},

Booktitle = {IEEE Symposium on 3D User Interfaces (3DUI), 2014},

Year = {2014},

Month = {March},

Pages = {151-152},

Doi = {10.1109/3DUI.2014.6798865},

Url = {http://ieeexplore.ieee.org/xpl/abstractReferences.jsp?arnumber=6798865}

}

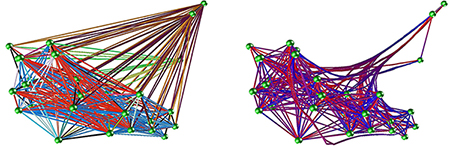

Poster: Interactive 3D Force-Directed Edge Bundling on Clustered Edges

Graphs play an important role in data analysis. Especially, graphs with a natural spatial embedding can benefit from a 3D visualization. But even more then in 2D, graphs visualized as intuitively readable 3D node-link diagrams can become very cluttered. This makes graph exploration and data analysis difficult. For this reason, we focus on the challenge of reducing edge clutter by utilizing edge bundling. In this paper we introduce a parallel, edge cluster based accelerator for the force-directed edge bundling algorithm presented in [Holten2009]. This opens up the possibility for user interaction during and after both the clustering and the bundling.

Interactive Definition of Discrete Color Maps for Volume Rendered Data in Immersive Virtual Environments

The visual discrimination of different structures in one or multiple combined volume data sets is generally done with individual transfer functions that can usually be adapted interactively. Immersive virtual environments support the depth perception and thus the spatial orientation in these volume visualizations. However, complex 2D menus for elaborate transfer function design cannot be easily integrated. We therefore present an approach for changing the color mapping during volume exploration with direct volume interaction and an additional 3D widget. In this way we incorporate the modification of a color mapping for a large number of discretely labeled brain areas in an intuitive way into the virtual environment. We use our approach for the analysis of a patient’s data with a brain tissue degenerating disease to allow for an interactive analysis of affected regions.

@inproceedings{Hanel2014a,

address = {Minneapolis},

author = {H{\"{a}}nel, Claudia and Freitag, Sebastian and Hentschel, Bernd and Kuhlen, Torsten},

booktitle = {2nd International Workshop on Immersive Volumetric Interaction (WIVI 2014) at IEEE Virtual Reality 2014},

editor = {Banic, Amy and O'Leary, Patrick and Laha, Bireswar},

title = {{Interactive Definition of Discrete Color Maps for Volume Rendered Data in Immersive Virtual Environments}},

year = {2014}

}

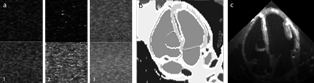

Software Phantom with Realistic Speckle Modeling for Validation of Image Analysis Methods in Echocardiography

Computer-assisted processing and interpretation of medical ultrasound images is one of the most challenging tasks within image analysis. Physical phenomena in ultrasonographic images, e.g., the characteristic speckle noise and shadowing effects, make the majority of standard methods from image analysis non optimal. Furthermore, validation of adapted computer vision methods proves to be difficult due to missing ground truth information. There is no widely accepted software phantom in the community and existing software phantoms are not flexible enough to support the use of specific speckle models for different tissue types, e.g., muscle and fat tissue. In this work we propose an anatomical software phantom with a realistic speckle pattern simulation to fill this gap and provide a flexible tool for validation purposes in medical ultrasound image analysis. We discuss the generation of speckle patterns and perform statistical analysis of the simulated textures to obtain quantitative measures of the realism and accuracy regarding the resulting textures.

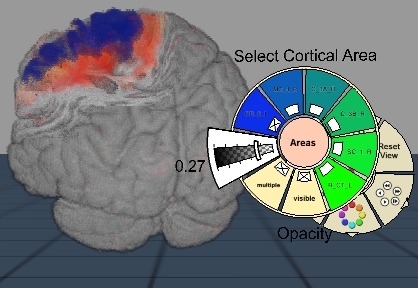

Interactive 3D Visualization of Structural Changes in the Brain of a Person With Corticobasal Syndrome

The visualization of the progression of brain tissue loss in neurodegenerative diseases like corticobasal syndrome (CBS) can provide not only information about the localization and distribution of the volume loss, but also helps to understand the course and the causes of this neurodegenerative disorder. The visualization of such medical imaging data is often based on 2D sections, because they show both internal and external structures in one image. Spatial information, however, is lost. 3D visualization of imaging data is capable to solve this problem, but it faces the difficulty that more internally located structures may be occluded by structures near the surface. Here, we present an application with two designs for the 3D visualization of the human brain to address these challenges. In the first design, brain anatomy is displayed semi-transparently; it is supplemented by an anatomical section and cortical areas for spatial orientation, and the volumetric data of volume loss. The second design is guided by the principle of importance-driven volume rendering: A direct line-of-sight to the relevant structures in the deeper parts of the brain is provided by cutting out a frustum-like piece of brain tissue. The application was developed to run in both, standard desktop environments and in immersive virtual reality environments with stereoscopic viewing for improving the depth perception. We conclude that the presented application facilitates the perception of the extent of brain degeneration with respect to its localization and affected regions.

@article{Hanel2014b,

author = {H{\"{a}}nel, Claudia and Pieperhoff, Peter and Hentschel, Bernd and Amunts, Katrin and Kuhlen, Torsten},

issn = {1662-5196},

journal = {Frontiers in Neuroinformatics},

number = {42},

pmid = {24847243},

title = {{Interactive 3D visualization of structural changes in the brain of a person with corticobasal syndrome.}},

url = {http://journal.frontiersin.org/article/10.3389/fninf.2014.00042/abstract},

volume = {8},

year = {2014}

}

Previous Year (2013)