Profile

|

Dr. Sebastian Pick |

Publications

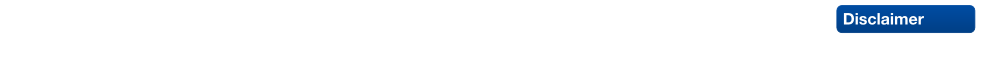

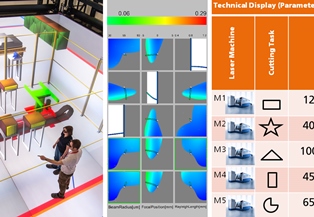

Interactive Visual Analysis of Multi-dimensional Metamodels

In the simulation of manufacturing processes, complex models are used to examine process properties. To save computation time, so-called metamodels serve as surrogates for the original models. Metamodels are inherently difficult to interpret, because they resemble multi-dimensional functions f : Rn -> Rm that map configuration parameters to production criteria. We propose a multi-view visualization application called memoSlice that composes several visualization techniques, specially adapted to the analysis of metamodels. With our application, we enable users to improve their understanding of a metamodel, but also to easily optimize processes. We put special attention on providing a high level of interactivity by realizing specialized parallelization techniques to provide timely feedback on user interactions. In this paper we outline these parallelization techniques and demonstrate their effectivity by means of micro and high level measurements.

@inproceedings {pgv.20181098,

booktitle = {Eurographics Symposium on Parallel Graphics and Visualization},

editor = {Hank Childs and Fernando Cucchietti},

title = {{Interactive Visual Analysis of Multi-dimensional Metamodels}},

author = {Gebhardt, Sascha and Pick, Sebastian and Hentschel, Bernd and Kuhlen, Torsten Wolfgang},

year = {2018},

publisher = {The Eurographics Association},

ISSN = {1727-348X},

ISBN = {978-3-03868-054-3},

DOI = {10.2312/pgv.20181098}

}

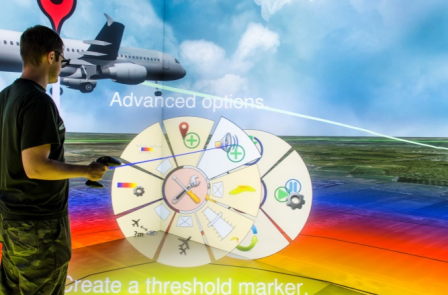

Comparison of a speech-based and a pie-menu-based interaction metaphor for application control

Choosing an adequate system control technique is crucial to support complex interaction scenarios in virtual reality applications. In this work, we compare an existing hierarchical pie-menu-based approach with a speech-recognition-based one in terms of task performance and user experience in a formal user study. As testbed, we use a factory planning application featuring a large set of system control options.

@INPROCEEDINGS{Pick:691795,

author = {Pick, Sebastian and Puika, Andrew S. and Kuhlen, Torsten},

title = {{C}omparison of a speech-based and a pie-menu-based

interaction metaphor for application control},

address = {Piscataway, NJ},

publisher = {IEEE},

reportid = {RWTH-2017-06169},

pages = {381-382},

year = {2017},

comment = {2017 IEEE Virtual Reality (VR) : proceedings : March 18-22,

2017, Los Angeles, CA, USA / Evan Suma Rosenberg, David M.

Krum, Zachary Wartell, Betty Mohler, Sabarish V. Babu, Frank

Steinicke, and Victoria Interrante ; sponsored by IEEE

Computer Society, Visialization and Graphics Technical

Committee},

booktitle = {2017 IEEE Virtual Reality (VR) :

proceedings : March 18-22, 2017, Los

Angeles, CA, USA / Evan Suma Rosenberg,

David M. Krum, Zachary Wartell, Betty

Mohler, Sabarish V. Babu, Frank

Steinicke, and Victoria Interrante ;

sponsored by IEEE Computer Society,

Visialization and Graphics Technical

Committee},

month = {Mar},

date = {2017-03-18},

organization = {2017 IEEE Virtual Reality, Los

Angeles, CA (USA), 18 Mar 2017 - 22 Mar

2017},

cin = {124620 / 120000 / 080025},

cid = {$I:(DE-82)124620_20151124$ / $I:(DE-82)120000_20140620$ /

$I:(DE-82)080025_20140620$},

pnm = {B-1 - Virtual Production Intelligence},

pid = {G:(DE-82)X080025-B-1},

typ = {PUB:(DE-HGF)7 / PUB:(DE-HGF)8},

UT = {WOS:000403149400114},

doi = {10.1109/VR.2017.7892336},

url = {http://publications.rwth-aachen.de/record/691795},

}

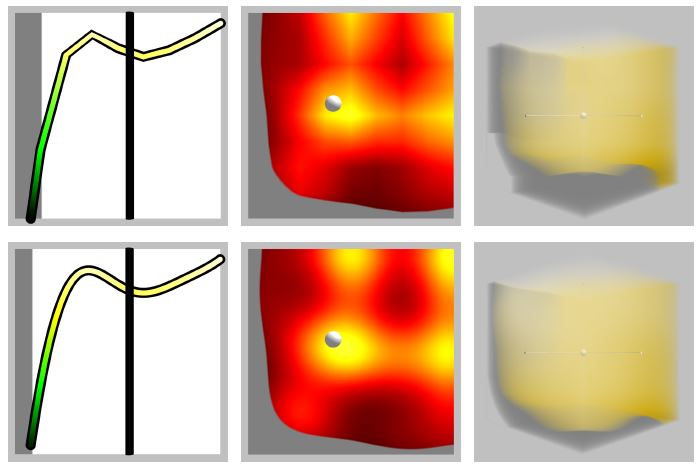

Utilizing Immersive Virtual Reality in Everyday Work

Applications of Virtual Reality (VR) have been repeatedly explored with the goal to improve the data analysis process of users from different application domains, such as architecture and simulation sciences. Unfortunately, making VR available in professional application scenarios or even using it on a regular basis has proven to be challenging. We argue that everyday usage environments, such as office spaces, have introduced constraints that critically affect the design of interaction concepts since well-established techniques might be difficult to use. In our opinion, it is crucial to understand the impact of usage scenarios on interaction design, to successfully develop VR applications for everyday use. To substantiate our claim, we define three distinct usage scenarios in this work that primarily differ in the amount of mobility they allow for. We outline each scenario's inherent constraints but also point out opportunities that may be used to design novel, well-suited interaction techniques for different everyday usage environments. In addition, we link each scenario to a concrete application example to clarify its relevance and show how it affects interaction design.

Remain Seated: Towards Fully-Immersive Desktop VR

In this work we describe the scenario of fully-immersive desktop VR, which serves the overall goal to seamlessly integrate with existing workflows and workplaces of data analysts and researchers, such that they can benefit from the gain in productivity when immersed in their data-spaces. Furthermore, we provide a literature review showing the status quo of techniques and methods available for realizing this scenario under the raised restrictions. Finally, we propose a concept of an analysis framework and the decisions made and the decisions still to be taken, to outline how the described scenario and the collected methods are feasible in a real use case.

Virtual Production Intelligence

The research area Virtual Production Intelligence (VPI) focuses on the integrated support of collaborative planning processes for production systems and products. The focus of the research is on processes for information processing in the design domains Factory and Machine. These processes provide the integration and interactive analysis of emerging, mostly heterogeneous planning information. The demonstrators (flapAssist, memoSlice und VPI platform) that are Information systems serve for the validation of the scientific approaches and aim to realize a continuous and consistent information management in terms of the Digital Factory. Central challenges are the semantic information integration (e.g., by means of metamodelling), the subsequent evaluation as well as the visualization of planning information (e.g., by means of Visual Analytics and Virtual Reality). All scientific and technical work is done within an interdisciplinary team composed of engineers, computer scientists and physicists.

@BOOK{Brecher:683508,

key = {683508},

editor = {Brecher, Christian and Özdemir, Denis},

title = {{I}ntegrative {P}roduction {T}echnology : {T}heory and

{A}pplications},

address = {Cham},

publisher = {Springer International Publishing},

reportid = {RWTH-2017-01369},

isbn = {978-3-319-47451-9},

pages = {XXXIX, 1100 Seiten : Illustrationen},

year = {2017},

cin = {417310 / 080025},

cid = {$I:(DE-82)417310_20140620$ / $I:(DE-82)080025_20140620$},

typ = {PUB:(DE-HGF)3},

doi = {10.1007/978-3-319-47452-6},

url = {http://publications.rwth-aachen.de/record/683508},

}

Design and Evaluation of Data Annotation Workflows for CAVE-like Virtual Environments

Data annotation finds increasing use in Virtual Reality applications with the goal to support the data analysis process, such as architectural reviews. In this context, a variety of different annotation systems for application to immersive virtual environments have been presented. While many interesting interaction designs for the data annotation workflow have emerged from them, important details and evaluations are often omitted. In particular, we observe that the process of handling metadata to interactively create and manage complex annotations is often not covered in detail. In this paper, we strive to improve this situation by focusing on the design of data annotation workflows and their evaluation. We propose a workflow design that facilitates the most important annotation operations, i.e., annotation creation, review, and modification. Our workflow design is easily extensible in terms of supported annotation and metadata types as well as interaction techniques, which makes it suitable for a variety of application scenarios. To evaluate it, we have conducted a user study in a CAVE-like virtual environment in which we compared our design to two alternatives in terms of a realistic annotation creation task. Our design obtained good results in terms of task performance and user experience.

SWIFTER: Design and Evaluation of a Speech-based Text Input Metaphor for Immersive Virtual Environments

Text input is an important part of the data annotation process, where text is used to capture ideas and comments. For text entry in immersive virtual environments, for which standard keyboards usually do not work, various approaches have been proposed. While these solutions have mostly proven effective, there still remain certain shortcomings making further investigations worthwhile. Motivated by recent research, we propose the speech-based multimodal text entry system SWIFTER, which strives for simplicity while maintaining good performance. In an initial user study, we compared our approach to smartphone-based text entry within a CAVE-like virtual environment. Results indicate that SWIFTER reaches an average input rate of 23.6 words per minute and is positively received by users in terms of user experience.

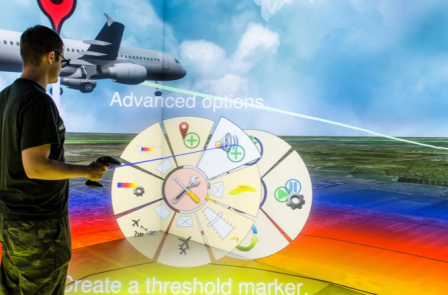

Interactive Simulation of Aircraft Noise in Aural and Visual Virtual Environments

This paper describes a novel aircraft noise simulation technique developed at RWTH Aachen University, which makes use of aircraft noise auralization and 3D visualization to make aircraft noise both heard and seen in immersive Virtual Reality (VR) environments. This technique is intended to be used to increase the residents’ acceptance of aircraft noise by presenting noise changes in a more directly relatable form, and also aid in understanding what contributes to the residents’ subjective annoyance via psychoacoustic surveys. This paper describes the technique as well as some of its initial applications. The reasoning behind the development of such a technique is that the issue of aircraft noise experienced by residents in airport vicinities is one of subjective annoyance. Any efforts at noise abatement have been conventionally presented to residents in terms of noise level reductions in conventional metrics such as A-weighted level or equivalent sound level Leq. This conventional approach however proves insufficient in increasing aircraft noise acceptance due to two main reasons – firstly, the residents have only a rudimentary understanding of changes in decibel and secondly, the conventional metrics do not fully capture what the residents actually find annoying i.e. characteristics of aircraft noise they find least acceptable. In order to allow least resistance to air-traffic expansion, the acceptance of aircraft noise has to be increased, for which such a new approach to noise assessment is required.

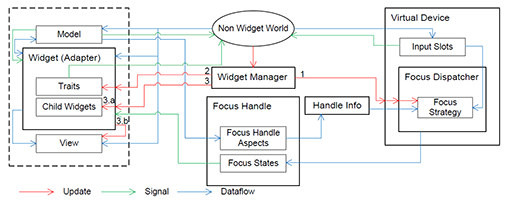

Vista Widgets: A Framework for Designing 3D User Interfaces from Reusable Interaction Building Blocks

Virtual Reality (VR) has been an active field of research for several decades, with 3D interaction and 3D User Interfaces (UIs) as important sub-disciplines. However, the development of 3D interaction techniques and in particular combining several of them to construct complex and usable 3D UIs remains challenging, especially in a VR context. In addition, there is currently only limited reusable software for implementing such techniques in comparison to traditional 2D UIs. To overcome this issue, we present ViSTA Widgets, a software framework for creating 3D UIs for immersive virtual environments. It extends the ViSTA VR framework by providing functionality to create multi-device, multi-focus-strategy interaction building blocks and means to easily combine them into complex 3D UIs. This is realized by introducing a device abstraction layer along sophisticated focus management and functionality to create novel 3D interaction techniques and 3D widgets. We present the framework and illustrate its effectiveness with code and application examples accompanied by performance evaluations.

@InProceedings{Gebhardt2016,

Title = {{Vista Widgets: A Framework for Designing 3D User Interfaces from Reusable Interaction Building Blocks}},

Author = {Gebhardt, Sascha and Petersen-Krau, Till and Pick, Sebastian and Rausch, Dominik and Nowke, Christian and Knott, Thomas and Schmitz, Patric and Zielasko, Daniel and Hentschel, Bernd and Kuhlen, Torsten W.},

Booktitle = {Proceedings of the 22nd ACM Conference on Virtual Reality Software and Technology},

Year = {2016},

Address = {New York, NY, USA},

Pages = {251--260},

Publisher = {ACM},

Series = {VRST '16},

Acmid = {2993382},

Doi = {10.1145/2993369.2993382},

ISBN = {978-1-4503-4491-3},

Keywords = {3D interaction, 3D user interfaces, framework, multi-device, virtual reality},

Location = {Munich, Germany},

Numpages = {10},

Url = {http://doi.acm.org/10.1145/2993369.2993382}

}

A Framework for Developing Flexible Virtual-Reality-centered Annotation Systems

The act of note-taking is an essential part of the data analysis process. It has been realized in form of various annotation systems that have been discussed in many publications. Unfortunately, the focus usually lies on high-level functionality, like interaction metaphors and display strategies. We argue that it is worthwhile to also consider software engineering aspects. Annotation systems often share similar functionality that can potentially be factored into reusable components with the goal to speed up the creation of new annotation systems. At the same time, however, VR-centered annotation systems are not only subject to application-specific requirements, but also to those arising from differences between the various VR platforms, like desktop VR setups or CAVEs. As a result, it is usually necessary to build application-specific VR-centered annotation systems from scratch instead of reusing existing components.

To improve this situation, we present a framework that provides reusable and adaptable building blocks to facilitate the creation of flexible annotation systems for VR applications. We discuss aspects ranging from data representation over persistence to the integration of new data types and interaction metaphors, especially in context of multi-platform applications. To underpin the benefits of such an approach and promote the proposed concepts, we describe how the framework was applied to several of our own projects.

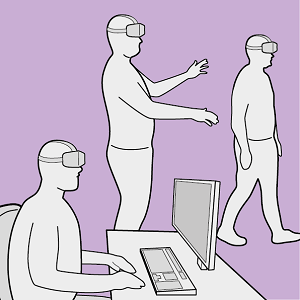

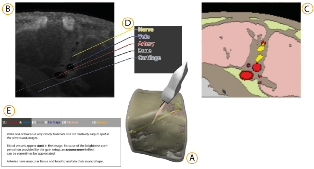

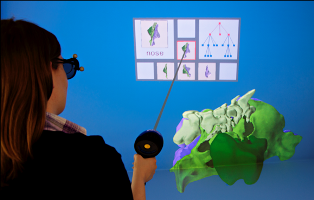

Simulation-based Ultrasound Training Supported by Annotations, Haptics and Linked Multimodal Views

When learning ultrasound (US) imaging, trainees must learn how to recognize structures, interpret textures and shapes, and simultaneously register the 2D ultrasound images to their 3D anatomical mental models. Alleviating the cognitive load imposed by these tasks should free the cognitive resources and thereby improve the learning process. We argue that the amount of cognitive load that is required to mentally rotate the models to match the images to them is too large and therefore negatively impacts the learning process. We present a 3D visualization tool that allows the user to naturally move a 2D slice and navigate around a 3D anatomical model. The slice is displayed in-place to facilitate the registration of the 2D slice in its 3D context. Two duplicates are also shown externally to the model; the first is a simple rendered image showing the outlines of the structures and the second is a simulated ultrasound image. Haptic cues are also provided to the users to help them maneuver around the 3D model in the virtual space. With the additional display of annotations and information of the most important structures, the tool is expected to complement the available didactic material used in the training of ultrasound procedures.

Cirque des Bouteilles: The Art of Blowing on Bottles

Making music by blowing on bottles is fun but challenging. We introduce a novel 3D user interface to play songs on virtual bottles. For this purpose the user blows into a microphone and the stream of air is recreated in the virtual environment and redirected to virtual bottles she is pointing to with her fingers. This is easy to learn and subsequently opens up opportunities for quickly switching between bottles and playing groups of them together to form complex melodies. Furthermore, our interface enables the customization of the virtual environment, by means of moving bottles, changing their type or filling level.

Poster: Scalable Metadata In- and Output for Multi-platform Data Annotation Applications

Metadata in- and output are important steps within the data annotation process. However, selecting techniques that effectively facilitate these steps is non-trivial, especially for applications that have to run on multiple virtual reality platforms. Not all techniques are applicable to or available on every system, requiring to adapt workflows on a per-system basis. Here, we describe a metadata handling system based on Android's Intent system that automatically adapts workflows and thereby makes manual adaption needless.

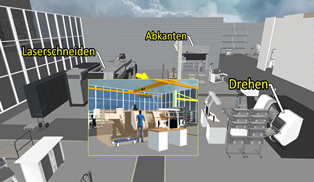

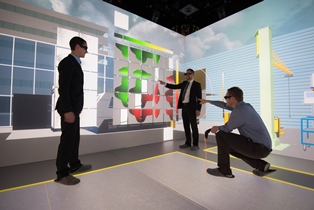

Poster: flapAssist: How the Integration of VR and Visualization Tools Fosters the Factory Planning Process

Virtual Reality (VR) systems are of growing importance to aid decision support in the context of the digital factory, especially factory layout planning. While current solutions either focus on virtual walkthroughs or the visualization of more abstract information, a solution that provides both, does currently not exist. To close this gap, we present a holistic VR application, called Factory Layout Planning Assistant (flapAssist). It is meant to serve as a platform for planning the layout of factories, while also providing a wide range of analysis features. By being scalable from desktops to CAVEs and providing a link to a central integration platform, flapAssist integrates well in established factory planning workflows.

Ein Konzept zur Integration von Virtual Reality Anwendungen zur Verbesserung des Informationsaustauschs im Fabrikplanungsprozess - A Concept for the Integration of Virtual Reality Applications to Improve the Information Exchange within the Factory Planning Process

Factory planning is a highly heterogeneous process that involves various expert groups at the same time. In this context, the communication between different expert groups poses a major challenge. One reason for this lies in the differing domain knowledge of individual groups. However, since decisions made within one domain usually have an effect on others, it is essential to make these domain interactions visible to all involved experts in order to improve the overall planning process. In this paper, we present a concept that facilitates the integration of two separate virtual-reality- and visualization analysis tools for different application domains of the planning process. The concept was developed in context of the Virtual Production Intelligence and aims at creating an approach to making domain interactions visible, such that the aforementioned challenges can be mitigated.

@Article{Pick2015,

Title = {“Ein Konzept zur Integration von Virtual Reality Anwendungen zur Verbesserung des Informationsaustauschs im Fabrikplanungsprozess”},

Author = {S. Pick, S. Gebhardt, B. Hentschel, T. W. Kuhlen, R. Reinhard, C. Büscher, T. Al Khawli, U. Eppelt, H. Voet, and J. Utsch},

Journal = {Tagungsband 12. Paderborner Workshop Augmented \& Virtual Reality in der Produktentstehung},

Year = {2015},

Pages = {139--152}

}

Ein Ansatz zur Softwaretechnischen Integration von Virtual Reality Anwendungen am Beispiel des Fabrikplanungsprozesses - An Approach for the Softwaretechnical Integration of Virtual Reality Applications by the Example of the Factory Planning Process

The integration of independent applications is a complex task from a software engineering perspective. Nonetheless, it entails significant benefits, especially in the context of Virtual Reality (VR) supported factory planning, e.g., to communicate interdependencies between different domains. To emphasize this aspect, we integrated two independent VR and visualization applications into a holistic planning solution. Special focus was put on parallelization and interaction aspects, while also considering more general requirements of such an integration process. In summary, we present technical solutions for the effective integration of several VR applications into a holistic solution with the integration of two applications from the context of factory planning with special focus on parallelism and interaction aspects. The effectiveness of the approach is demonstrated by performance measurements.

@Article{Gebhardt2015,

Title = {“Ein Ansatz zur Softwaretechnischen Integration von Virtual Reality Anwendungen am Beispiel des Fabrikplanungsprozesses”},

Author = {S. Gebhardt, S. Pick, B. Hentschel, T. W. Kuhlen, R. Reinhard, and C. Büscher},

Journal = {Tagungsband 12. Paderborner Workshop Augmented \& Virtual Reality in der Produktentstehung},

Year = {2015},

Pages = {153--166}

}

Immersive Art: Using a CAVE-like Virtual Environment for the Presentation of Digital Works of Art

Digital works of art are often created using some kind of modeling software, like Cinema4D. Usually they are presented in a non-interactive form, like large Diasecs, and can thus only be experienced by passive viewing. To explore alternative, more captivating presentation channels, we investigate the use of a CAVE virtual reality (VR) system as an immersive and interactive presentation platform in this paper. To this end, in a collaboration with an artist, we built an interactive VR experience from one of his existing works. We provide details on our design and report on the results of a qualitative user study.

» Show BibTeX

@Article{Pick2015,

Title = {{Immersive Art: Using a CAVE-like Virtual Environment for the Presentation of Digitial Works of Art}},

Author = {Pick, Sebastian and B\"{o}nsch, Andrea and Scully, Dennis and Kuhlen, Torsten W.},

Journal = {{V}irtuelle und {E}rweiterte {R}ealit\"at, 12. {W}orkshop der {GI}-{F}achgruppe {VR}/{AR}},

Year = {2015},

Pages = {10-21},

ISSN = {978-3-8440-3868-2},

Publisher = {Shaker Verlag}

}

Advanced Virtual Reality and Visualization Support for Factory Layout Planning

Recently, more and more Virtual Reality (VR) and visualization solutions to support the factory layout planning process have been presented. On the one hand, VR enables planners to create cost-effective virtual prototypes and to perform virtual walkthroughs, e.g., to verify proposed layouts. On the other hand, visualization helps to gain insight into simulation results that, e.g., describe the various interdependencies between machines, such as material flows. In order to create truly effective tools based on VR and visualization, the right techniques have to be chosen and adapted to the specific problem. However, the solutions published so far usually do not exploit these technologies to their full potential.

To address this situation, we present a VR-based planning assistant that offers advanced visualization functionality that furthers the understanding of planning-relevant parameters, while also relying on established techniques. In order to realize a useful approach, the assistant fulfills three central requirements:

- A smooth integration of the assistant into existing workflows is essential in order to not disrupt them. Consequently, existing tools need to be properly integrated and a mechanism for data exchange with these tools has to be provided.

- Visualization is the main means of facilitating insight. Instead of only displaying factory models, advanced techniques to visualize more abstract quantities, like material flows or process chains, have to be provided.

- VR systems vary in the degree of immersion they offer, ranging from non-immersive desktop systems to fully immersive Cave Automatic Virtual Environment (CAVE) systems. Scalability among these systems allows adapting high-end installations as well as cost-effective solutions. However, to ensure good scalability, devising a flexible system abstraction and a unified interaction concept are essential.

The base for our planning assistant is an immersive VR (IVR) system in form of a CAVE. Our solution allows performing virtual walkthroughs and offers additional visualization techniques for planning relevant data.

A 3D Collaborative Virtual Environment to Integrate Immersive Virtual Reality into Factory Planning Processes

In the recent past, efforts have been made to adopt immersive virtual reality (IVR) systems as a means for design reviews in factory layout planning. While several solutions for this scenario have been developed, their integration into existing planning workflows has not been discussed yet. From our own experience of developing such a solution, we conclude that the use of IVR systems-like CAVEs-is rather disruptive to existing workflows. One major reason for this is that IVR systems are not available everywhere due to their high costs and large physical footprint. As a consequence, planners have to travel to sites offering such systems which is especially prohibitive as planners are usually geographically dispersed. In this paper, we present a concept for integrating IVR systems into the factory planning process by means of a 3D collaborative virtual environment (3DCVE) without disrupting the underlying planning workflow. The goal is to combine non-immersive and IVR systems to facilitate collaborative walkthrough sessions. However, this scenario poses unique challenges to interactive collaborative work that to the best of our knowledge have not been addressed so far. In this regard, we discuss approaches to viewpoint sharing, telepointing and annotation support that are geared towards distributed heterogeneous 3DCVEs.

An Evaluation of a Smart-Phone-Based Menu System for Immersive Virtual Environments

System control is a crucial task for many virtual reality applications and can be realized in a broad variety of ways, whereat the most common way is the use of graphical menus. These are often implemented as part of the virtual environment, but can also be displayed on mobile devices. Until now, many systems and studies have been published on using mobile devices such as personal digital assistants (PDAs) to realize such menu systems. However, most of these systems have been proposed way before smartphones existed and evolved to everyday companions for many people. Thus, it is worthwhile to evaluate the applicability of modern smartphones as carrier of menu systems for immersive virtual environments. To do so, we implemented a platform-independent menu system for smartphones and evaluated it in two different ways. First, we performed an expert review in order to identify potential design flaws and to test the applicability of the approach for demonstrations of VR applications from a demonstrator's point of view. Second, we conducted a user study with 21 participants to test user acceptance of the menu system. The results of the two studies were contradictory: while experts appreciated the system very much, user acceptance was lower than expected. From these results we could draw conclusions on how smartphones should be used to realize system control in virtual environments and we could identify connecting factors for future research on the topic.

Integration of VR and Visualization Tools to Foster the Factory Planning Process

Recently, virtual reality (VR) and visualization have been increasingly employed to facilitate various tasks in factory planning processes. One major challenge in this context lies in the exchange of information between expert groups concerned with distinct planning tasks in order to make planners aware of inter-dependencies. For example, changes to the configuration of individual machines can have an effect on the overall production performance and vice versa. To this end, we developed VR- and visualization-based planning tools for two distinct planning tasks for which we present an integration concept that facilitates information exchange between these tools. The first application's goal is to facilitate layout planning by means of a CAVE system. The high degree of immersion offered by this system allows users to judge spatial relations in entire factories through cost-effective virtual walkthroughs. Additionally, information like material flow data can be visualized within the virtual environment to further assist planners to comprehensively evaluate the factory layout. Another application focuses on individual machines with the goal to help planners find ideal configurations by providing a visualization solution to explore the multi-dimensional parameter space of a single machine. This is made possible through the use of meta-models of the parameter space that are then visualized by means of the concept of Hyperslice. In this paper we present a concept that shows how these applications can be integrated into one comprehensive planning tool that allows for planning factories while considering factors of different planning levels at the same time. The concept is backed by Virtual Production Intelligence (VPI), which integrates data from different levels of factory processes, while including additional data sources and algorithms to provide further information to be used by the applications. In conclusion, we present an integration concept for VR- and visualization-based software tools that facilitates the communication of interdependencies between different factory planning tasks. As the first steps towards creating a comprehensive factory planning solution, we demonstrate the integration of the aforementioned two use-cases by applying VPI. Finally, we review the proposed concept by discussing its benefits and pointing out potential implementation pitfalls.

Poster: Guided Tour Creation in Immersive Virtual Environments

Guided tours have been found to be a good approach to introducing users to previously unknown virtual environments and to allowing them access to relevant points of interest. Two important tasks during the creation of guided tours are the definition of views onto relevant information and their arrangement into an order in which they are to be visited. To allow a maximum of flexibility an interactive approach to these tasks is desirable. To this end, we present and evaluate two approaches to the mentioned interaction tasks in this paper. The first approach is a hybrid 2D/3D interaction metaphor in which a tracked tablet PC is used as a virtual digital camera that allows to specify and order views onto the scene. The second one is a purely 3D version of the first one, which does not require a tablet PC. Both approaches were compared in an initial user study, whose results indicate a superiority of the 3D over the hybrid approach.

@InProceedings{Pick2014,

Title = {{P}oster: {G}uided {T}our {C}reation in {I}mmersive {V}irtual {E}nvironments},

Author = {Sebastian Pick and Andreas B\"{o}nsch and Irene Tedjo-Palczynski and Bernd Hentschel and Torsten Kuhlen},

Booktitle = {IEEE Symposium on 3D User Interfaces (3DUI), 2014},

Year = {2014},

Month = {March},

Pages = {151-152},

Doi = {10.1109/3DUI.2014.6798865},

Url = {http://ieeexplore.ieee.org/xpl/abstractReferences.jsp?arnumber=6798865}

}

Virtual Air Traffic System Simulation - Aiding the Communication of Air Traffic Effects

A key aspect of air traffic infrastructure projects is the communication between stakeholders during the approval process regarding their environmental impact. Yet, established means of communication have been found to be rather incomprehensible. In this paper we present an application that addresses these communication issues by enabling the exploration of airplane noise emissions in the vicinity of airports in a virtual environment (VE). The VE is composed of a model of the airport area and flight movement data. We combine a real-time 3D auralization approach with visualization techniques to allow for an intuitive access to noise emissions. Specifically designed interaction techniques help users to easily explore and compare air traffic scenarios.

Extended Pie Menus for Immersive Virtual Environments

Pie menus are a well-known technique for interacting with 2D environments and so far a large body of research documents their usage and optimizations. Yet, comparatively little research has been done on the usability of pie menus in immersive virtual environments (IVEs). In this paper we reduce this gap by presenting an implementation and evaluation of an extended hierarchical pie menu system for IVEs that can be operated with a six-degrees-of-freedom input device. Following an iterative development process, we first developed and evaluated a basic hierarchical pie menu system. To better understand how pie menus should be operated in IVEs, we tested this system in a pilot user study with 24 participants and focus on item selection. Regarding the results of the study, the system was tweaked and elements like check boxes, sliders, and color map editors were added to provide extended functionality. An expert review with five experts was performed with the extended pie menus being integrated into an existing VR application to identify potential design issues. Overall results indicated high performance and efficient design.

Efficiently Navigating Data Sets Using the Hierarchy Browser

A major challenge in Virtual Reality is to enable users to efficiently explore virtual environments, regardless of prior knowledge. This is particularly true for complex virtual scenes containing a huge amount of potential areas of interest. Providing the user convenient access to these areas is of prime importance, just like supporting her to orient herself in the virtual scene. There exist techniques for either aspect, but combining these techniques into one holistic system is not trivial. To address this issue, we present the Hierarchy Browser. It supports the user in creating a mental image of the scene. This is done by offering a well-arranged, hierarchical visual representation of the scene structure as well as interaction techniques to browse it. Additional interaction allows to trigger a scene manipulation, e.g. an automated travel to a desired area of interest. We evaluate the Hierarchy Browser by means of an expert walkthrough.

@Article{Boensch2011,

Title = {{E}fficiently {N}avigating {D}ata {S}ets {U}sing the {H}ierarchy {B}rowser},

Author = {Andrea B\"{o}nsch and Sebastian Pick and Bernd Hentschel and Torsten Kuhlen},

Journal = {{V}irtuelle und {E}rweiterte {R}ealit\"at, 8. {W}orkshop der {GI}-{F}achgruppe {VR}/{AR}},

Year = {2011},

Pages = {37-48},

ISSN = {978-3-8440-0394-9}

Publisher = {Shaker Verlag}

}