Publications

Insite: A Pipeline Enabling In-Transit Visualization and Analysis for Neuronal Network Simulations

Neuronal network simulators are central to computational neuroscience, enabling the study of the nervous system through in-silico experiments. Through the utilization of high-performance computing resources, these simulators are capable of simulating increasingly complex and large networks of neurons today. Yet, the increased capabilities introduce a challenge to the analysis and visualization of the simulation results. In this work, we propose a pipeline for in-transit analysis and visualization of data produced by neuronal network simulators. The pipeline is able to couple with simulators, enabling querying, filtering, and merging data from multiple simulation instances. Additionally, the architecture allows user-defined plugins that perform analysis tasks in the pipeline. The pipeline applies traditional REST API paradigms and utilizes data formats such as JSON to provide easy access to the generated data for visualization and further processing. We present and assess the proposed architecture in the context of neuronal network simulations generated by the NEST simulator.

@InProceedings{10.1007/978-3-031-23220-6_20,

author="Kr{\"u}ger, Marcel and Oehrl, Simon and Demiralp, Ali C. and Spreizer, Sebastian and Bruchertseifer, Jens and Kuhlen, Torsten W. and Gerrits, Tim and Weyers, Benjamin",

editor="Anzt, Hartwig and Bienz, Amanda and Luszczek, Piotr and Baboulin, Marc",

title="Insite: A Pipeline Enabling In-Transit Visualization and Analysis for Neuronal Network Simulations",

booktitle="High Performance Computing. ISC High Performance 2022 International Workshops",

year="2022",

publisher="Springer International Publishing",

address="Cham",

pages="295--305",

isbn="978-3-031-23220-6"

}

Performance Assessment of Diffusive Load Balancing for Distributed Particle Advection

Particle advection is the approach for the extraction of integral curves from vector fields. Efficient parallelization of particle advection is a challenging task due to the problem of load imbalance, in which processes are assigned unequal workloads, causing some of them to idle as the others are performing computing. Various approaches to load balancing exist, yet they all involve trade-offs such as increased inter-process communication, or the need for central control structures. In this work, we present two local load balancing methods for particle advection based on the family of diffusive load balancing. Each process has access to the blocks of its neighboring processes, which enables dynamic sharing of the particles based on a metric defined by the workload of the neighborhood. The approaches are assessed in terms of strong and weak scaling as well as load imbalance. We show that the methods reduce the total run-time of advection and are promising with regard to scaling as they operate locally on isolated process neighborhoods.

Astray: A Performance-Portable Geodesic Ray Tracer

Geodesic ray tracing is the numerical method to compute the motion of matter and radiation in spacetime. It enables visualization of the geometry of spacetime and is an important tool to study the gravitational fields in the presence of astrophysical phenomena such as black holes. Although the method is largely established, solving the geodesic equation remains a computationally demanding task. In this work, we present Astray; a high-performance geodesic ray tracing library capable of running on a single or a cluster of computers equipped with compute or graphics processing units. The library is able to visualize any spacetime given its metric tensor and contains optimized implementations of a wide range of spacetimes, including commonly studied ones such as Schwarzschild and Kerr. The performance of the library is evaluated on standard consumer hardware as well as a compute cluster through strong and weak scaling benchmarks. The results indicate that the system is capable of reaching interactive frame rates with increasing use of high-performance computing resources. We further introduce a user interface capable of remote rendering on a cluster for interactive visualization of spacetimes.

@inproceedings {10.2312:vmv.20221208,

booktitle = {Vision, Modeling, and Visualization},

editor = {Bender, Jan and Botsch, Mario and Keim, Daniel A.},

title = {{Astray: A Performance-Portable Geodesic Ray Tracer}},

author = {Demiralp, Ali Can and Krüger, Marcel and Chao, Chu and Kuhlen, Torsten W. and Gerrits, Tim},

year = {2022},

publisher = {The Eurographics Association},

ISBN = {978-3-03868-189-2},

DOI = {10.2312/vmv.20221208}

}

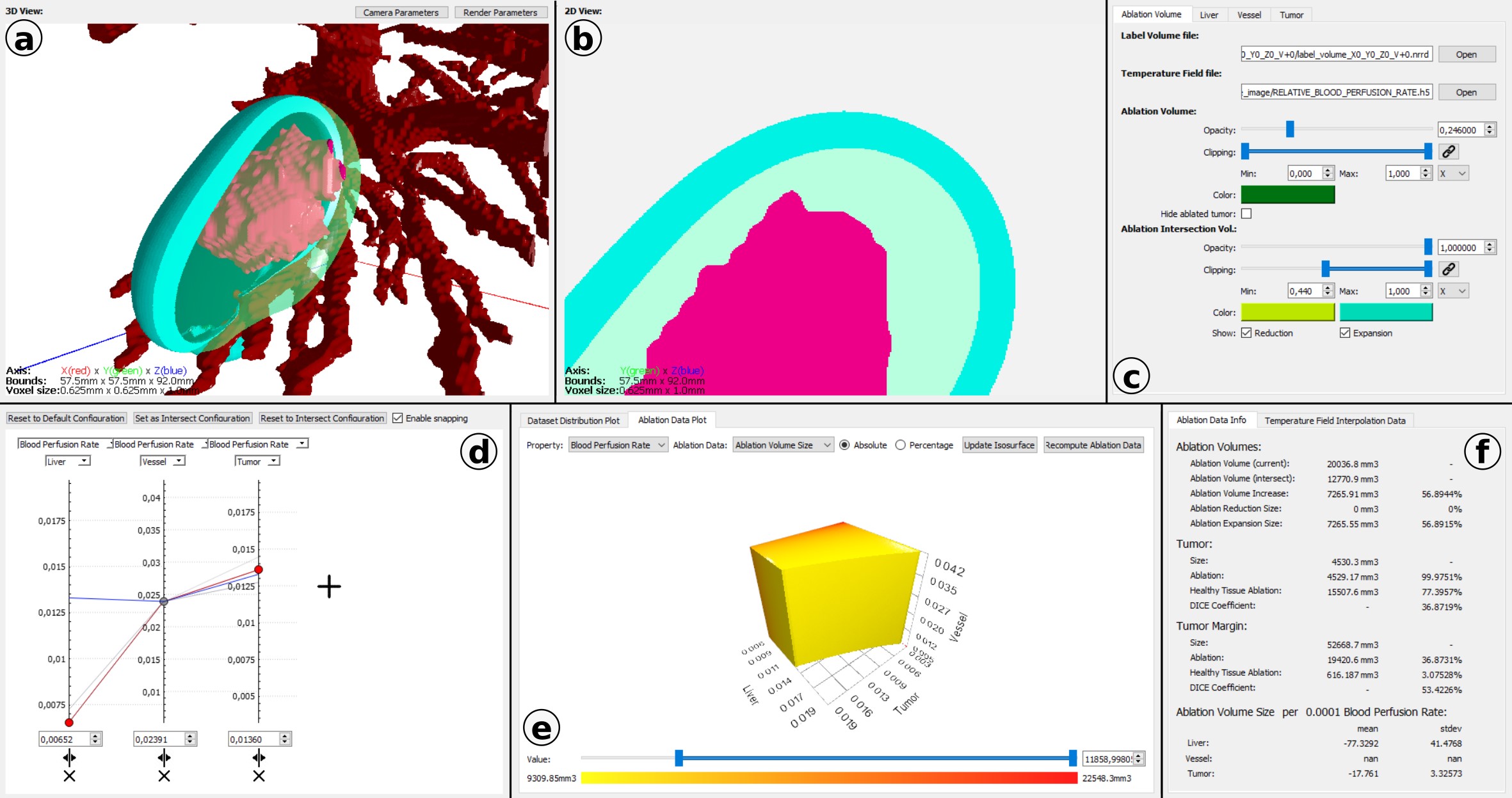

Studying the Effect of Tissue Properties on Radiofrequency Ablation by Visual Simulation Ensemble Analysis

Radiofrequency ablation is a minimally invasive, needle-based medical treatment to ablate tumors by heating due to absorption of radiofrequency electromagnetic waves. To ensure the complete target volume is destroyed, radiofrequency ablation simulations are required for treatment planning. However, the choice of tissue properties used as parameters during simulation induce a high uncertainty, as the tissue properties are strongly patient-dependent. To capture this uncertainty, a simulation ensemble can be created. Understanding the dependency of the simulation outcome on the input parameters helps to create improved simulation ensembles by focusing on the main sources of uncertainty and, thus, reducing computation costs. We present an interactive visual analysis tool for radiofrequency ablation simulation ensembles to target this objective. Spatial 2D and 3D visualizations allow for the comparison of ablation results of individual simulation runs and for the quantification of differences. Simulation runs can be interactively selected based on a parallel coordinates visualization of the parameter space. A 3D parameter space visualization allows for the analysis of the ablation outcome when altering a selected tissue property for the three tissue types involved in the ablation process. We discuss our approach with domain experts working on the development of new simulation models and demonstrate the usefulness of our approach for analyzing the influence of different tissue properties on radiofrequency ablations.

Honorable Mention Award!@inproceedings {10.2312:vcbm.20221187,

booktitle = {Eurographics Workshop on Visual Computing for Biology and Medicine},

editor = {Renata G. Raidou and Björn Sommer and Torsten W. Kuhlen and Michael Krone and Thomas Schultz and Hsiang-Yun Wu},

title = {{Studying the Effect of Tissue Properties on Radiofrequency Ablation by Visual Simulation Ensemble Analysis}},

author = {Heimes, Karl and Evers, Marina and Gerrits, Tim and Gyawali, Sandeep and Sinden, David and Preusser, Tobias and Linsen, Lars},

year = {2022},

publisher = {The Eurographics Association},

ISSN = {2070-5786},

ISBN = {978-3-03868-177-9},

DOI = {10.2312/vcbm.20221187}

}

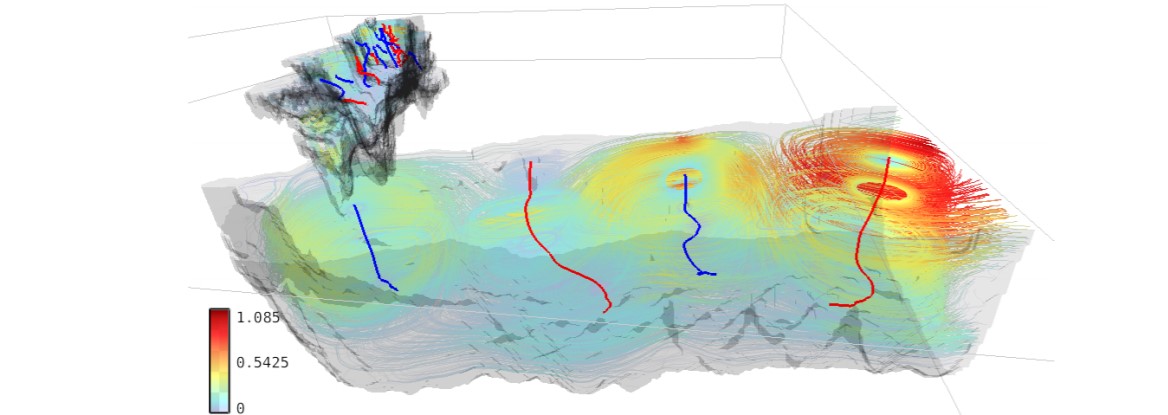

Multifaceted Visual Analysis of Oceanographic Simulation Ensemble Data

The analysis of multirun oceanographic simulation data imposes various challenges ranging from visualizing multifield spatio-temporal data over properly identifying and depicting vortices to visually representing uncertainties. We present an integrated interactive visual analysis tool that enables us to overcome these challenges by employing multiple coordinated views of different facets of the data at different levels of aggregation.

@ARTICLE {9495240,

author = {H. Rave and J. Fincke and S. Averkamp and B. Tangerding and L. P. Wehrenberg and T. Gerrits and K. Huesmann and S. Leistikow and L. Linsen},

journal = {IEEE Computer Graphics and Applications},

title = {Multifaceted Visual Analysis of Oceanographic Simulation Ensemble Data},

year = {2022},

volume = {42},

number = {04},

issn = {1558-1756},

pages = {80-88},

abstract = {The analysis of multirun oceanographic simulation data imposes various challenges ranging from visualizing multifield spatio-temporal data over properly identifying and depicting vortices to visually representing uncertainties. We present an integrated interactive visual analysis tool that enables us to overcome these challenges by employing multiple coordinated views of different facets of the data at different levels of aggregation.}

keywords = {visualization;data visualization;data models;uncertainty;salinity (geophysical);correlation;rendering (computer graphics)},

doi = {10.1109/MCG.2021.3098096},

publisher = {IEEE Computer Society},

address = {Los Alamitos, CA, USA},

month = {jul}

}

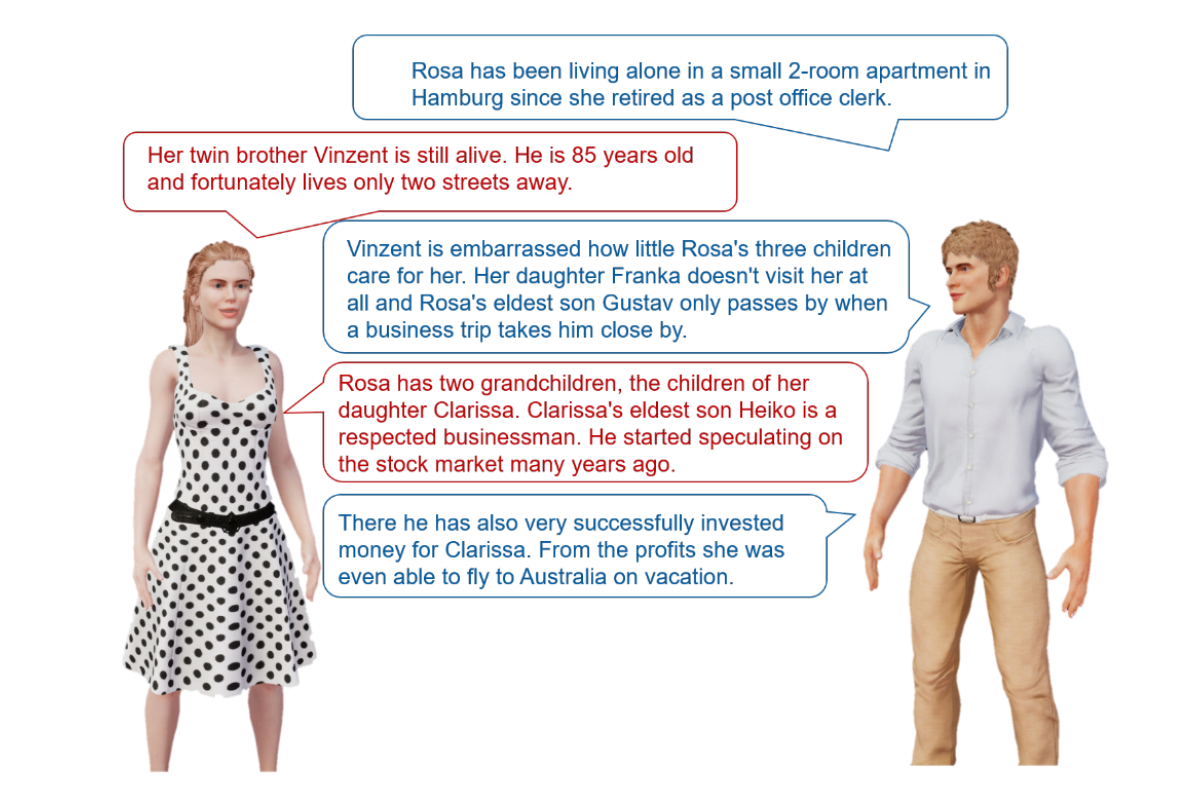

Verbal Interactions with Embodied Conversational Agents

Embedding virtual humans into virtual reality (VR) applications can fulfill diverse needs. These, so-called, embodied conversational agents (ECAs) can simply enliven the virtual environments, act for example as training partners, tutors, or therapists, or serve as advanced (emotional) user interfaces to control immersive systems. The latter case is of special interest since we as human users are specifically good at interpreting other humans. ECAs can enhance their verbal communication with non-verbal behavior and thereby make communication more efficient. For example, backchannels, like nodding or signaling not understanding, can be used to give feedback while a user is speaking. Furthermore, gestures, gaze, posture, proxemics, and many more non-verbal behaviors can be applied. Additionally, turn-taking can be streamlined when the ECA understands when to take over the turn and signals willingness to yield it once done. While many of these aspects are already under investigation from very different disciplines, operationalizing those into versatile, virtually embodied human-computer interfaces remains an open challenge.

To this end, I conducted several studies investigating acoustical effects of ECAs' speech, both with regard to the auralization in the virtual environment and the speech signals used. Furthermore, I want to find guidelines for expressing both turn-taking and various backchannels that make interactions with such advanced embodied interfaces more efficient and pleasant, both when the ECA is speaking and during listening. Additionally, measuring social presence (i.e., the feeling of being there and interacting with a ``real'' person) is an important instrument for this kind of research, since I want to facilitate exactly those subconscious processes of understanding other humans, which we as humans are particularly good at. Therefore, I want to investigate objective measures for social presence.

@inproceedings{Ehret2022a,

author = {Ehret, Jonathan},

booktitle = {Doctoral Consortium at the 22nd ACM International Conference on

Intelligent Virtual Agents},

title = {{Doctoral Consortium : Verbal Interactions with Embodied

Conversational Agents}},

year = {2022}

}

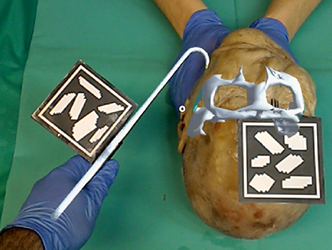

Augmented Reality-Based Surgery on the Human Cadaver Using a New Generation of Optical Head-Mounted Displays: Development and Feasibility Study

**Background:** Although nearly one-third of the world’s disease burden requires surgical care, only a small proportion of digital health applications are directly used in the surgical field. In the coming decades, the application of augmented reality (AR) with a new generation of optical-see-through head-mounted displays (OST-HMDs) like the HoloLens (Microsoft Corp) has the potential to bring digital health into the surgical field. However, for the application to be performed on a living person, proof of performance must first be provided due to regulatory requirements. In this regard, cadaver studies could provide initial evidence.

**Objective:** The goal of the research was to develop an open-source system for AR-based surgery on human cadavers using freely available technologies.

**Methods:** We tested our system using an easy-to-understand scenario in which fractured zygomatic arches of the face had to be repositioned with visual and auditory feedback to the investigators using a HoloLens. Results were verified with postoperative imaging and assessed in a blinded fashion by 2 investigators. The developed system and scenario were qualitatively evaluated by consensus interview and individual questionnaires.

**Results:** The development and implementation of our system was feasible and could be realized in the course of a cadaver study. The AR system was found helpful by the investigators for spatial perception in addition to the combination of visual as well as auditory feedback. The surgical end point could be determined metrically as well as by assessment.

**Conclusions:** The development and application of an AR-based surgical system using freely available technologies to perform OST-HMD–guided surgical procedures in cadavers is feasible. Cadaver studies are suitable for OST-HMD–guided interventions to measure a surgical end point and provide an initial data foundation for future clinical trials. The availability of free systems for researchers could be helpful for a possible translation process from digital health to AR-based surgery using OST-HMDs in the operating theater via cadaver studies.

@article{puladi2022augmented,

title={Augmented Reality-Based Surgery on the Human Cadaver Using a New Generation of Optical Head-Mounted Displays: Development and Feasibility Study},

author={Puladi, Behrus and Ooms, Mark and Bellgardt, Martin and Cesov, Mark and Lipprandt, Myriam and Raith, Stefan and Peters, Florian and M{\"o}hlhenrich, Stephan Christian and Prescher, Andreas and H{\"o}lzle, Frank and others},

journal={JMIR Serious Games},

volume={10},

number={2},

pages={e34781},

year={2022},

publisher={JMIR Publications Inc., Toronto, Canada}

}

Quantitative Mapping of Keratin Networks in 3D

Mechanobiology requires precise quantitative information on processes taking place in specific 3D microenvironments. Connecting the abundance of microscopical, molecular, biochemical, and cell mechanical data with defined topologies has turned out to be extremely difficult. Establishing such structural and functional 3D maps needed for biophysical modeling is a particular challenge for the cytoskeleton, which consists of long and interwoven filamentous polymers coordinating subcellular processes and interactions of cells with their environment. To date, useful tools are available for the segmentation and modeling of actin filaments and microtubules but comprehensive tools for the mapping of intermediate filament organization are still lacking. In this work, we describe a workflow to model and examine the complete 3D arrangement of the keratin intermediate filament cytoskeleton in canine, murine, and human epithelial cells both, in vitro and in vivo. Numerical models are derived from confocal Airyscan high-resolution 3D imaging of fluorescence-tagged keratin filaments. They are interrogated and annotated at different length scales using different modes of visualization including immersive virtual reality. In this way, information is provided on network organization at the subcellular level including mesh arrangement, density, and isotropic configuration as well as details on filament morphology such as bundling, curvature, and orientation. We show that the comparison of these parameters helps to identify, in quantitative terms, similarities and differences of keratin network organization in epithelial cell types defining subcellular domains, notably basal, apical, lateral, and perinuclear systems. The described approach and the presented data are pivotal for generating mechanobiological models that can be experimentally tested.

@article {Windoffer2022,

article_type = {journal},

title = {{Quantitative Mapping of Keratin Networks in 3D}},

author = {Windoffer, Reinhard and Schwarz, Nicole and Yoon, Sungjun and Piskova, Teodora and Scholkemper, Michael and Stegmaier, Johannes and Bönsch, Andrea and Di Russo, Jacopo and Leube, Rudolf},

editor = {Coulombe, Pierre},

volume = 11,

year = 2022,

month = {feb},

pub_date = {2022-02-18},

pages = {e75894},

citation = {eLife 2022;11:e75894},

doi = {10.7554/eLife.75894},

url = {https://doi.org/10.7554/eLife.75894},

journal = {eLife},

issn = {2050-084X},

publisher = {eLife Sciences Publications, Ltd},

}

MODE: A modern ordinary differential equation solver for C++ and CUDA

Ordinary differential equations (ODE) are used to describe the evolution of one or more dependent variables using their derivatives with respect to an independent variable. They arise in various branches of natural sciences and engineering. We present a modern, efficient, performance-oriented ODE solving library built in C++20. The library implements a broad range of multi-stage and multi-step methods, which are generated at compile-time from their tableau representations, avoiding runtime overhead. The solvers can be instantiated and iterated on the CPU and the GPU using identical code. This work introduces the prominent features of the library with examples

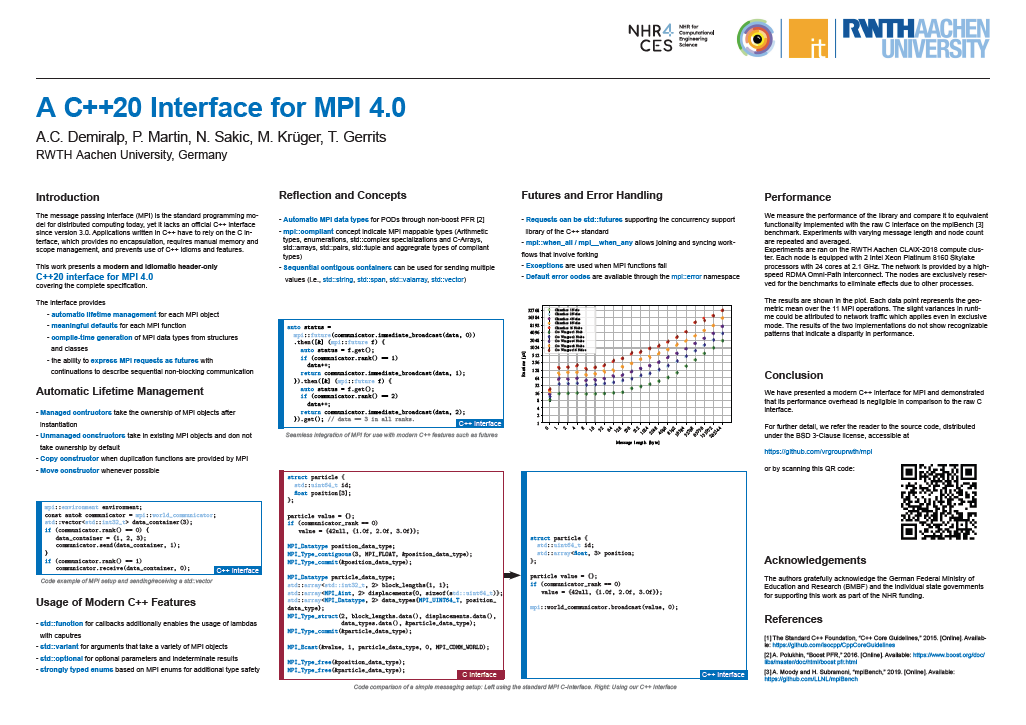

Poster: A C++20 Interface for MPI 4.0

We present a modern C++20 interface for MPI 4.0. The interface utilizes recent language features to ease development of MPI applications. An aggregate reflection system enables generation of MPI data types from user-defined classes automatically. Immediate and persistent operations are mapped to futures, which can be chained to describe sequential asynchronous operations and task graphs in a concise way. This work introduces the prominent features of the interface with examples. We further measure its performance overhead with respect to the raw C interface.

@misc{demiralp2023c20,

title={A C++20 Interface for MPI 4.0},

author={Ali Can Demiralp and Philipp Martin and Niko Sakic and Marcel Krüger and Tim Gerrits},

year={2023},

eprint={2306.11840},

archivePrefix={arXiv},

primaryClass={cs.DC}

}

Poster: Measuring Listening Effort in Adverse Listening Conditions: Testing Two Dual Task Paradigms for Upcoming Audiovisual Virtual Reality Experiments

Listening to and remembering the content of conversations is a highly demanding task from a cognitive-psychological perspective. Particularly, in adverse listening conditions cognitive resources available for higher-level processing of speech are reduced since increased listening effort consumes more of the overall available cognitive resources. Applying audiovisual Virtual Reality (VR) environments to listening research could be highly beneficial for exploring cognitive performance for overheard content. In this study, we therefore evaluated two (secondary) tasks concerning their suitability for measuring cognitive spare capacity as an indicator of listening effort in audiovisual VR environments. In two experiments, participants were administered a dual-task paradigm including a listening primary) task in which a conversation between two talkers is presented, and an unrelated secondary task each. Both experiments were carried out without additional background noise and under continuous noise. We discuss our results in terms of guidance for future experimental studies, especially in audiovisual VR environments.

@InProceedings{ Mohanathasan2022ESCoP,

author = { Chinthusa Mohanathasan, Jonathan Ehret, Cosima A.

Ermert, Janina Fels, Torsten Wolfgang Kuhlen and Sabine J. Schlittmeier},

booktitle = { 22. Conference of the European Society for Cognitive

Psychology , Lille , France , ESCoP},

title = { Measuring Listening Effort in Adverse Listening

Conditions: Testing Two Dual Task Paradigms for Upcoming Audiovisual Virtual

Reality Experiments},

year = {2022},

}

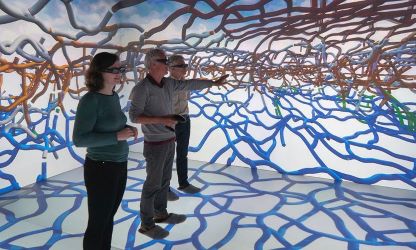

The aixCAVE at RWTH Aachen University

At a large technical university like RWTH Aachen, there is enormous potential to use VR as a tool in research. In contrast to applications from the entertainment sector, many scientific application scenarios - for example, a 3D analysis of result data from simulated flows - not only depend on a high degree of immersion, but also on a high resolution and excellent image quality of the display. In addition, the visual analysis of scientific data is often carried out and discussed in smaller teams. For these reasons, but also for simple ergonomic aspects (comfort, cybersickness), many technical and scientific VR applications cannot just be implemented on the basis of head-mounted displays. To this day, in VR Labs of universities and research institutions, it is therefore desirable to install immersive large-screen rear projection systems (CAVEs) in order to adequately support the scientists. Due to the high investment costs, such systems are used at larger universities such as Aachen, Cologne, Munich, or Stuttgart, often operated by the computing centers as a central infrastructure accessible to all scientists at the university.

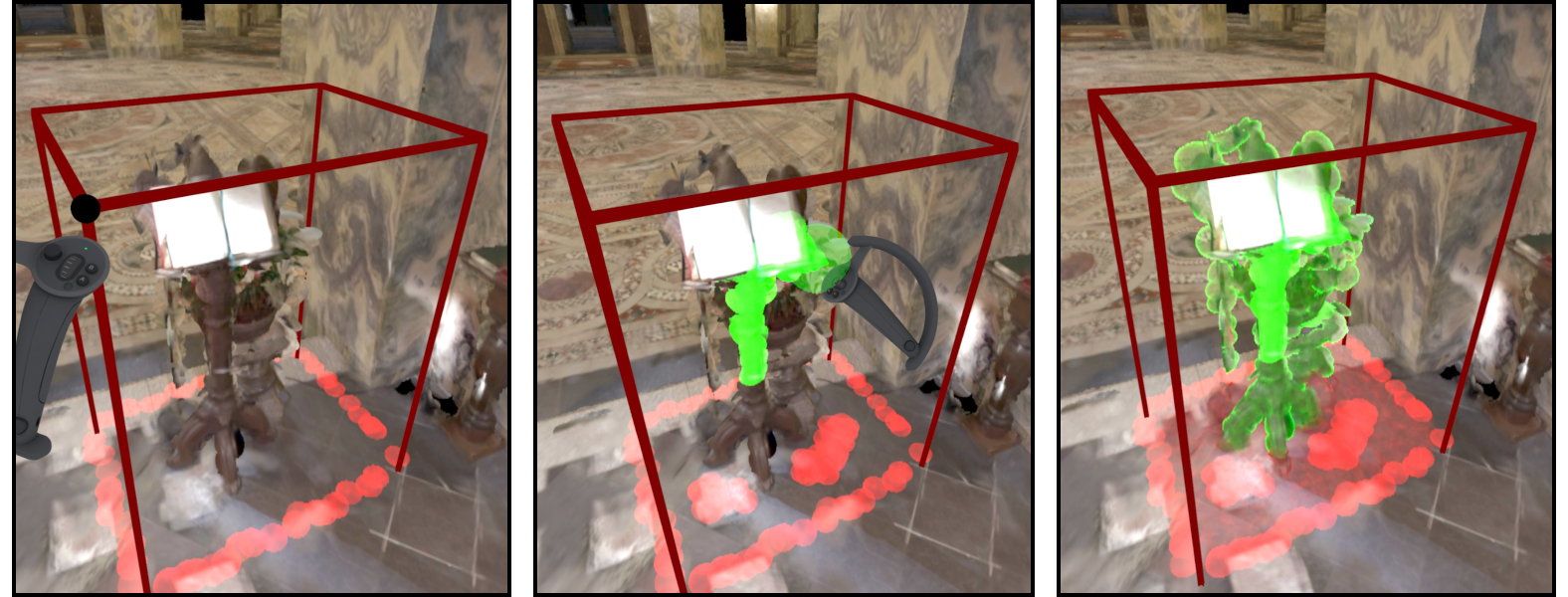

Interactive Segmentation of Textured Point Clouds

We present a method for the interactive segmentation of textured 3D point clouds. The problem is formulated as a minimum graph cut on a k-nearest neighbor graph and leverages the rich information contained in high-resolution photographs as the discriminative feature. We demonstrate that the achievable segmentation accuracy is significantly improved compared to using an average color per point as in prior work. The method is designed to work efficiently on large datasets and yields results at interactive rates. This way, an interactive workflow can be realized in an immersive virtual environment, which supports the segmentation task by improved depth perception and the use of tracked 3D input devices. Our method enables to create high-quality segmentations of textured point clouds fast and conveniently.

» Show BibTeX

@inproceedings {10.2312:vmv.20221200,

booktitle = {Vision, Modeling, and Visualization},

editor = {Bender, Jan and Botsch, Mario and Keim, Daniel A.},

title = {{Interactive Segmentation of Textured Point Clouds}},

author = {Schmitz, Patric and Suder, Sebastian and Schuster, Kersten and Kobbelt, Leif},

year = {2022},

publisher = {The Eurographics Association},

ISBN = {978-3-03868-189-2},

DOI = {10.2312/vmv.20221200}

}

Late-Breaking Report: Natural Turn-Taking with Embodied Conversational Agents

Adding embodied conversational agents (ECAs) to immersive virtual environments (IVEs) becomes relevant in various application scenarios, for example, conversational systems. For successful interactions with these ECAs, they have to behave naturally, i.e. in the way a user would expect a real human to behave. Teaming up with acousticians and psychologists, we strive to explore turn-taking in VR-based interactions between either two ECAs or an ECA and a human user.

Late-Breaking Report: An Embodied Conversational Agent Supporting Scene Exploration by Switching between Guiding and Accompanying

In this late-breaking report, we first motivate the requirement of an embodied conversational agent (ECA) who combines characteristics of a virtual tour guide and a knowledgeable companion in order to allow users an interactive and adaptable, however, structured exploration of an unknown immersive, architectural environment. Second, we roughly outline our proposed ECA’s behavioral design followed by a teaser on the planned user study.

Previous Year (2021)