Research Areas

While selected projects of our past research can be found here, this page presents some examples of our current research projects. If you are interested in one of the topics please contact the indicated person for further detailed information and check out our publications page where you can find papers and additional download material.

Our work is characterized by basic as well as application-oriented research in collaboration with other RWTH institutes from multiple faculties, Forschungszentrum Jülich, industrial companies, and other research groups from around the world in largely third-party funded interdisciplinary joint projects.

Virtual Reality (VR) has proven its potential to provide an innovative human-computer interface, from which multiple application areas can profit. The VR application fields we are working on comprise architecture, mechanical engineering, medicine, life science, psychology, and more.

Specifically, we perform research in multimodal 3D interaction technology, immersive 3D visualization of complex simulation data, parallel visualization algorithms, and virtual product development. In combination with our service portfolio, we are thus able to inject VR technology and methodology as a powerful tool into scientific and industrial workflows and to create synergy effects by promoting collaborations between institutes with similar areas of VR applications.

If you are interested in a cooperation or in using our VR infrastructure, please contact us via e-mail: info@vr.rwth-aachen.de

Examples of Past Research Projects

Selected projects of our past research can be found here.

Examples of Current Research Projects

Arts

Collaborative VR

Foundational Research

Higher Education

HMD Technologies

- Advanced Rendering Techniques for Virtual Reality

- Low Latency Streaming and Advanced Reprojection Techniques for Wireless VR Experiences

Neuroscience

- The Human Brain Project - Interactive Visualization, Analysis, and Control

- Parallel Particle Advection and Finite-Time Lyapunov Field Extraction on Brain Data

- Dynamic Load Balancing for Parallel Particle Advection

Production Technology & Engineering

- BugWright2 - Autonomous Robotic Inspection and Maintenance on Ship Hulls

and Storage Tanks - The Cluster of Excellence: Internet of Production - Immersive Visualization of

Artificial Neural Networks - VITAMINE_5G: VIrtual realiTy environment for Additive ManufacturINg Enabled by 5G

Social VR & Psychology

- AUDICTIVE - Listening to, and Remembering Conversations Between two Talkers

- VoLAix - A VR-based Investigation of the Speaker’s Voice and Dynamically Embedded Background Sounds on Listening Effort in University Students

- Social Locomotion with Virtual Agents

- Speech and Gestures of Embodied Conversational Agents

- Personal Space in Social Virtual Reality

| funding | German Research Council DFG |

| contact person | Martin Bellgardt |

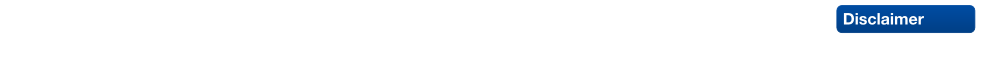

Within the Cluster of Excellence: Internet of Production, a major point of focus for us are ANNs. They have sparked great interest in nearly all scientific disciplines in the recent years. They achieve unprecedented accuracy in almost any modeling task they are applied to. Unfortunately, their main downside is the so called "black box problem", which means that it is not yet possible to extract the knowledge learned by ANNs, to gain insights into the underlying processes.

We approach this problem through immersive visualization, by drawing the network as a node-link diagram in three dimension. Investigating techniques to layout, filter and interact with ANNs and data in immersive environments, we hope to achieve an interactive visual representations that will allow for meaningful insights into their inner workings. Potential benefits might be a better understanding of ANNs and their hyperparameters, a better explainability of the reasons for their decisions, or even insights into physical processes, underlying the tasks they solve

|

| ANN as a node-link diagram in three dimensions |

Back to project list - Back to top

| funding | Ministry of Culture and Science of the State of North Rhine-Westphalia |

| contact person | Tim Weissker |

Additive manufacturing is a relatively new and unconventional method in the field of production technology that has attracted more and more interest in recent years. It is increasingly used in various stages of the product life cycle such as initial manufacturing, post-processing, and repair. At present, however, additive manufacturing relies heavily on experts because processes are (1) not yet based on broad historical experience as with other technologies and (2) difficult to access for interim inspections and adjustments.

To address these challenges, the project "VITAMINE_5G" develops novel methods for monitoring, understanding, and adapting additive manufacturing processes. Process engineers are provided with a digital twin of the machine in Virtual Reality, which is controlled by sensor data collected in the real counterpart. Additional situated as well as embedded data visualizations complement the digital twin, providing further insights into the process that could not be gained in the real world. To transmit the large amounts of data required for interactive visualization in real time, 5G wireless network technology will serve as the backbone to ensure high data rates, low latency, and low jitter no matter where the process engineer is located.

This interdisciplinary project combines experts from research and industry in the fields of production engineering, sensor technology, networking, optimization, and human-computer interaction. The results provide guidelines for process monitoring in virtual reality and benchmark data on the performance of current communication technologies, which will serve as a basis for future developments by other researchers and engineers.

|

| Virtual reality enables the immersive exploration of a digital twin representing an ongoing additive manufacturing process |

Back to project list - Back to top

| funding | German Research Foundation (Deutsche Forschungsgemeinschaft, DFG) |

| contact person | Tim Weißker |

Teleportation has emerged as one of the most widely adopted forms of travel through immersive virtual environments as it minimizes the occurrence of sickness symptoms for many users. Despite its significance, however, several major research questions on the foundational building blocks that make up these techniques remain unanswered, with many people resorting to ground-restricted teleportation with default parameters as offered by their development platform. In this research project, we deepen our understanding of teleportation interfaces by investigating different target specification metaphors and pre-travel information and their effects on travel precision, efficiency, usability, and predictability. Based on these results, we seamlessly extend conventional teleportation techniques to enable users to gain novel perspectives on the scene, travel to targets more expeditiously, and maneuver around objects of interest more efficiently. Based on the already widespread use of basic teleportation in head-mounted displays, these foundational research insights will have a profound impact on a large variety of academic and industrial virtual reality applications.

|

Back to project list - Back to top

| funding | internal |

| contact person | Sevinc Eroglu |

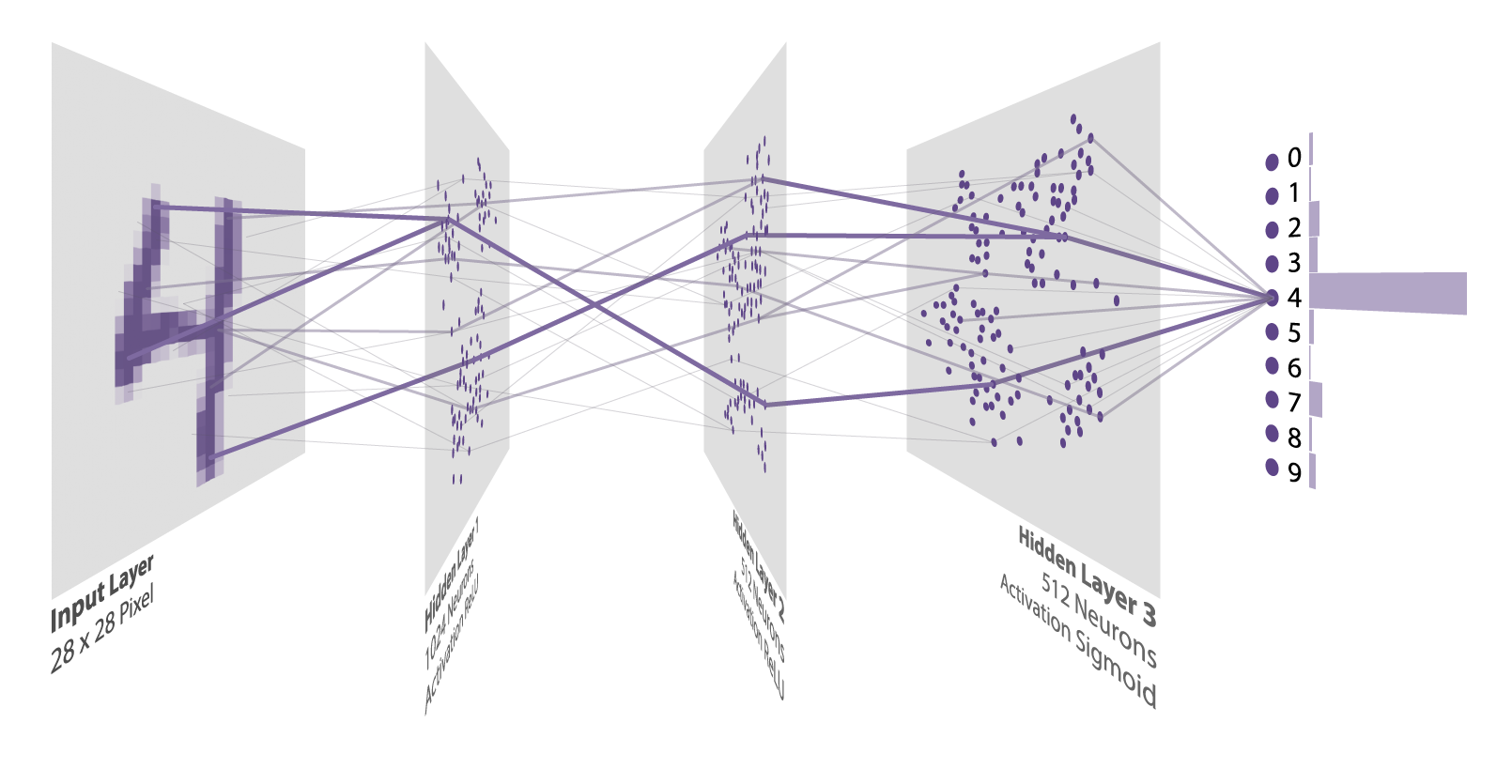

Artists' demand for VR as an art medium is growing. Artistic creation while being fully immersed in the virtual art piece is an exciting new possibility that opens new research opportunities with challenging user interface design questions. Painting and sculpting applications in VR are emerging, which enable artists to create their artwork directly in VR and resemble common 3D modeling tools. However, to create new 3D compositions in VR out of existing 2D artworks, artists are bound to employ existing desktop-based content creation tools. This requires manual work and sufficient knowledge of expert modeling environments.

To close this gap, we created "Rilievo", a virtual authoring environment that enables the effortless conversion of 2D images into volumetric 3D objects. Artistic elements in the input material are extracted with a convenient VR-based segmentation tool. Relief sculpting is then performed by interactively mixing different height maps. These are automatically generated from the input image structure and appearance. A prototype of the tool is showcased in an analog-virtual artistic workflow in collaboration with a traditional painter. It combines the expressiveness of analog painting and sculpting with the creative freedom of spatial arrangement in VR.

|

Back to project list - Back to top

| funding | internal |

| contact person | Sevinc Eroglu |

Our research investigates the potential of VR as a medium of artistic expression that enables artists to create their work in a new perspective and with new expressive possibilities. To this end, we created "Fluid Sketching", an immersive 3D drawing environment that is inspired by traditional marbling art. It allows artists to draw 3D fluid-like sketches and manipulate them via six degrees of freedom input devices. Different brush stroke settings are available, varying the characteristics of the fluid. Because of fluids’ nature, the diffusion of the drawn fluid sketch is animated, and artists have control over altering the fluid properties and stopping the diffusion process whenever they are satisfied with the current result. Furthermore, they can shape the drawn sketch by directly interacting with it, either with their hand or by blowing into the fluid. We rely on particle advection via curl-noise as a fast procedural method for animating the fluid flow.

|

Back to project list - Back to top

| funding | internal |

| contact person | Tim Weißker |

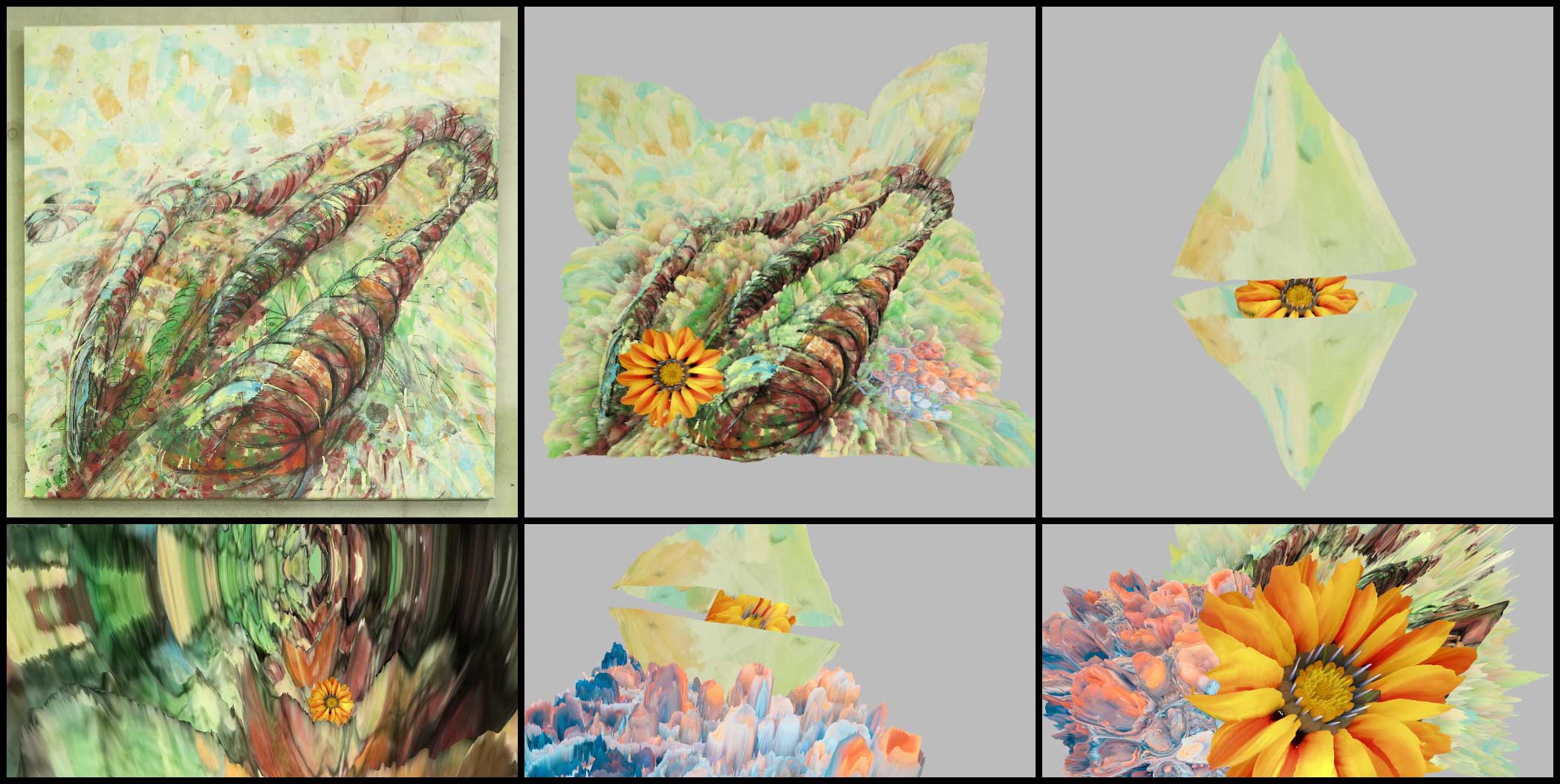

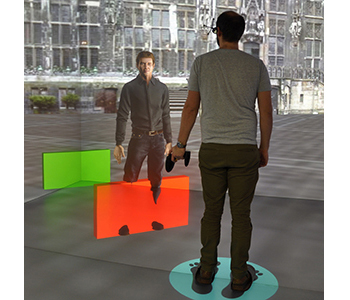

Multi-user virtual reality enables users around the world to interact with one another as avatars in a shared virtual environment. The resulting immersive experience allows participants to explore unknown places, interact with objects in the environment, and discuss the experience using natural body language and pointing gestures. Corresponding systems have already been successfully applied in various fields, including skill training, education, conferencing, therapy, and entertainment. Despite the emerging benefits of multi-user virtual reality, social interactions in virtual environments are still less expressive than their real-world counterparts. Therefore, our research aims to increase the expressiveness of avatar-based communication by studying relevant social cues that contribute to mutual understanding and the feeling of togetherness. Furthermore, our research studies a novel class of interaction techniques that go beyond an accurate depiction of the real world and make use of the unique potential of virtual environments where physical constraints do not apply. These include advanced strategies for joint navigation, collaborative problem solving, and managing large virtual crowds. The aixCAVE at RWTH Aachen University offers unique opportunities to experience such virtual environments, enabling users to directly perceive their own body as well as the presence of other collocated collaborators without requiring avatar representations. To address the recent increase in display diversity, our work explores combinations of both collocated collaboration with the aixCAVE and remote collaboration with head-mounted displays to bring together users independent of their place and available VR hardware. To realize this research and simplify the development and prototyping of multi-user scenarios, we also investigate technical solutions and experiments with various state-of-the-art frameworks. Developing for multi-user virtual reality environments is challenging, and our research aims to facilitate this by exploring methods to help with creating, deploying, and testing collaborative virtual reality applications.

|

Back to project list - Back to top

| funding | internal |

| contact person | Simon Oehrl |

Virtual Reality (VR) systems have high requirements on the resolution and refresh rate of their displays to ensure a pleasant user experience. Rendering at such resolutions and framerates brings even powerful hardware to its limits. There exist various techniques utilizing the spatial correlation of the pixels rendered from each eye as well as the temporal correlation of the pixels in different frames to enhance the rendering performance. These techniques often introduce artifacts due to missing or outdated information, so that they always trade off rendering performance versus visual quality.

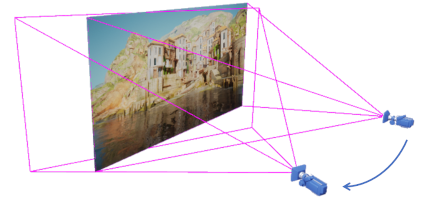

We investigate such reprojection methods in order to further improve rendering in VR applications. In particular, such techniques can be used in combination with low latency compression to enable wireless streaming of VR applications from desktop computers to head-mounted displays as illustrated here.

|

| The figure shows the problem reprojection techniques try to solve: rendering the scene using the image from a slightly altered camera position as an input. |

Back to project list - Back to top

| funding | internal |

| contact person | Simon Oehrl |

Head-mounted displays (HMDs) are nowadays the preferred way to enjoy Virtual Reality (VR) applications due to their low cost and minimal setup requirements. However, as all VR display technologies, driving these high resolution and high refresh rate displays proposes high requirements on the hardware. To ensure high visual quality of the scene the HMD needs to be connected to a desktop computer to utilize their compute power.

The bandwidth required for the high data rates usually requires a cable connecting the HMD and the computer. This cable is usually considered cumbersome as it restricts the movement of the user. Future development of wireless technologies will eventually provide enough bandwidth to switch from cable-based communication to a wireless communication between the HMD and the computer. We, however, currently investigate methods in low latency image compression as well as adapted reprojection techniques to implement such wireless communication for the current consumer hardware.

|

| Concept of the wireless VR setup: the HMD sends the virtual camera position to a desktop computer which will render the scene and send the resulting image back to the HMD. |

Back to project list - Back to top

| funding | DFG Priority Program AUDICTIVE SPP2236: Auditory Cognition in Interactive Virtual Environments |

| contact person | Jonathan Ehret |

Listening to, and remembering the content of conversations is a fundamental aspect during communication within several contexts (e.g., private, professional, educational). Nevertheless, the impact of listening to realistic running speech, and not just to single syllables, words, or isolated sentences, has been hardly researched from cognitive-psychological and/or acoustics perspectives. In particular, the influence of audiovisual characteristics, such as plausible spatial acoustics and visual co-verbal cues, on memory performance and comprehension of spoken text, has been largely ignored in previous research.

This project, therefore, aims to investigate the combined effects of these potentially performance-relevant but scarcely addressed audiovisual cues on memory and comprehension for running speech. Our overarching methodological approach is to develop an audiovisual Virtual Reality (VR) testing environment that includes embodied Virtual Agents (VAs). This testing environment will be used in a series of experiments to research the basic aspects of visual-auditory cognitive performance in a close(r)-to-real-life setting.

This, in turn, will provide insights into the contribution of acoustical and visual cues on the cognitive performance, user experience, and presence in, as well as quality and vibrancy of VR applications, especially those with a social interaction focus.

We conduct this project in cooperation with the Institute of Psychology and the Institute for Hearing Technology and Acoustics of RWTH Aachen University. Our main objective in this project is to investigate how fidelity characteristics in audiovisual virtual environments contribute to the realism and liveliness of social VR scenarios with embodied VAs. In particular, we will study how different co-verbal/visual cues affect a user’s experience and cognitive performance and, in addition, will examine the technical quality criteria to be met in terms of visual immersion. Contributing to quality evaluation methods, this project will study the suitability of text memory, comprehension measures, and subjective judgments to assess the quality of experience (QoE) of a VR environment. Knowing which instances of enhanced realism in the VR environment lead to variations in either cognitive performance and/or subjective judgments is valuable in two ways: to determine the necessary degree of 'realism' for auditory cognition research into memory and comprehension of heard text, and the audiovisual characteristics of a perception-, cognition- and experience-optimized VR-system.

|

Back to project list - Back to top

| funding | RWTH SeedFund 2022 |

| contact person | Andrea Bönsch |

Adverse acoustic conditions make listening effortful. Research indicates that a speaker’s impaired voice quality, e.g., hoarseness, might have the same effect. The listening effort may also increase with background sounds in the listener’s vicinity. A context in which these factors often co-occur is in seminar rooms or lecture halls at universities. This is problematic since effective listening during lectures may be critical for students' learning outcomes. To this end, this project’s aim is twofold: (i) determining the effect of a speaker’s voice quality on students’ listening effort in a seminar room, using audio-visual immersive virtual reality (VR), and (ii) taking initial steps towards a VR-based framework allowing a dynamic and plausible acoustic and visual integration of background sound sources. In the long run, this framework may serve as a tool for well-controlled and easily customizable studies in psychological, acoustic, and VR research.

We conduct this project in cooperation with the Institute of Psychology and the Institute for Hearing Technology and Acoustics of RWTH Aachen University

|

+ |

|

= |

|

Back to project list - Back to top

| funding | internal |

| contact person | Andrea Bönsch |

Computer-controlled, embodied, intelligent and conversational virtual agents (VAs) are increasingly common in various immersive virtual environments (IVEs). They can enliven architectural scenes turning them into plausible, realistic and thus convincing sceneries. Considering, for instance, the visualization of a virtual production facility, VAs might serve as virtual workers, who autonomously operate in the IVE. In addition, VAs can function as assistants by, e.g., guiding users through a scene, training them how to perform certain tasks, or being interlocutors answering questions. Thus, VAs can interact with the human user, forming a social group, while standing still in or moving through the virtual scene.

For the latter, social locomotion is of prime importance. This involves aspects such as finding collision-free trajectories, finding suitable walking constellations based on the situation-dependent interaction (e.g., leading vs. walking side-by-side), respecting the other’s personal space, showing an adequate gazing behavior and body posture as well as many more. Our research focuses on modeling such social locomotion behaviors for virtual agents in architectural scenarios. The resulting locomotion pattern should thereby be applicable in high-end CAVE-like environment as well as in low-cost head-mounted displays. Furthermore, the behavior’s impact on the user’s perceived presence, social presence and comfort is an object of investigation.

|

Back to project list - Back to top

| funding | internal |

| contact person | Jonathan Ehret |

When interacting with embodied conversational agents in VR the conversation has to feel as natural as possible. These agents can function as high-level human-machine-interfaces, can guide or teach the user or simply enliven the virtual environment. For these interactions to feel believable the speech and co-verbal gestures (incl. backchannels) play an important role. Furthermore also the acoustical reproduction, i.e., the way the speech is presented to a user, is of prime importance.

To that end we investigate how different acoustical phenomena, like directivity, influence the perception of the virtual agent considering its realism and its believability, e.g., social presence. Furthermore we look into different ways to generate co-verbal gestures and how they influence the conversation and whether the user’s behavior potentially changes due to these features.

|

Back to project list - Back to top

| funding | internal |

| contact person | Andrea Bönsch |

One important aspect during social locomotion is respecting each other’s personal space (PS). This space is defined as a flexible protective zone maintained around one’s own body in real-life situations as well as in virtual environments. However, the PS is regulated dynamically, its size and shape depends on different personal factors like age, gender, environmental factors like obstacles, gazing behavior, and many more. Furthermore, the personal space is divided into four segments differing in the distance from the user, reflecting the type of relationship between persons. Violating the PS furthermore evokes different levels of discomfort and physiological arousal. Thus, gaining more insight into this phenomenon is important, while letting VAs automatically and authentically respect the PS of a user or other virtual teammates is challenging.

After conducting an initial study regarding PS and collision avoidance during user navigation through a small-scale IVE (see left figure), we now focus on further PS investigations with respect to emotions shown by virtual agents (see right figure) as well as the number of virtual agents a user is facing. Our goal is to develop an appropriate behavioral setting for autonomous VAs in order to embed them into various architectural scenarios while keeping a high user comfort level, immersion, and perceived social presence.

|

|

| A virtual agent blocking a user's path in a two-man office (left). A user indicating his personal space while being approached by a virtual agent (right). | |

Back to project list - Back to top

| funding | European Union’s Horizon 2020 |

| contact person | Sebastian Pape |

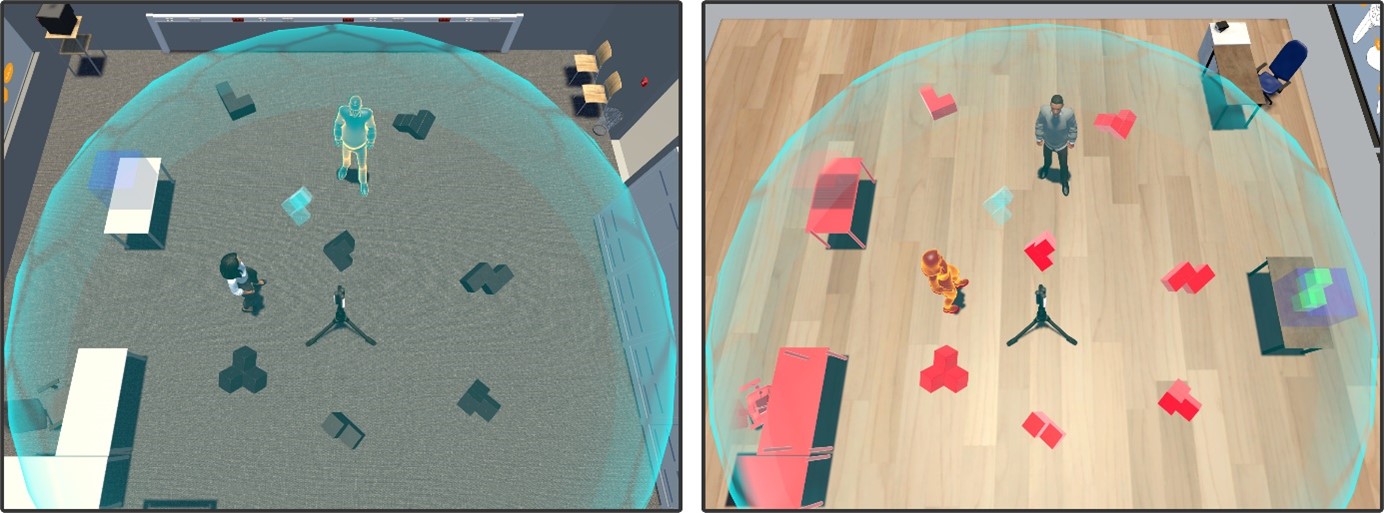

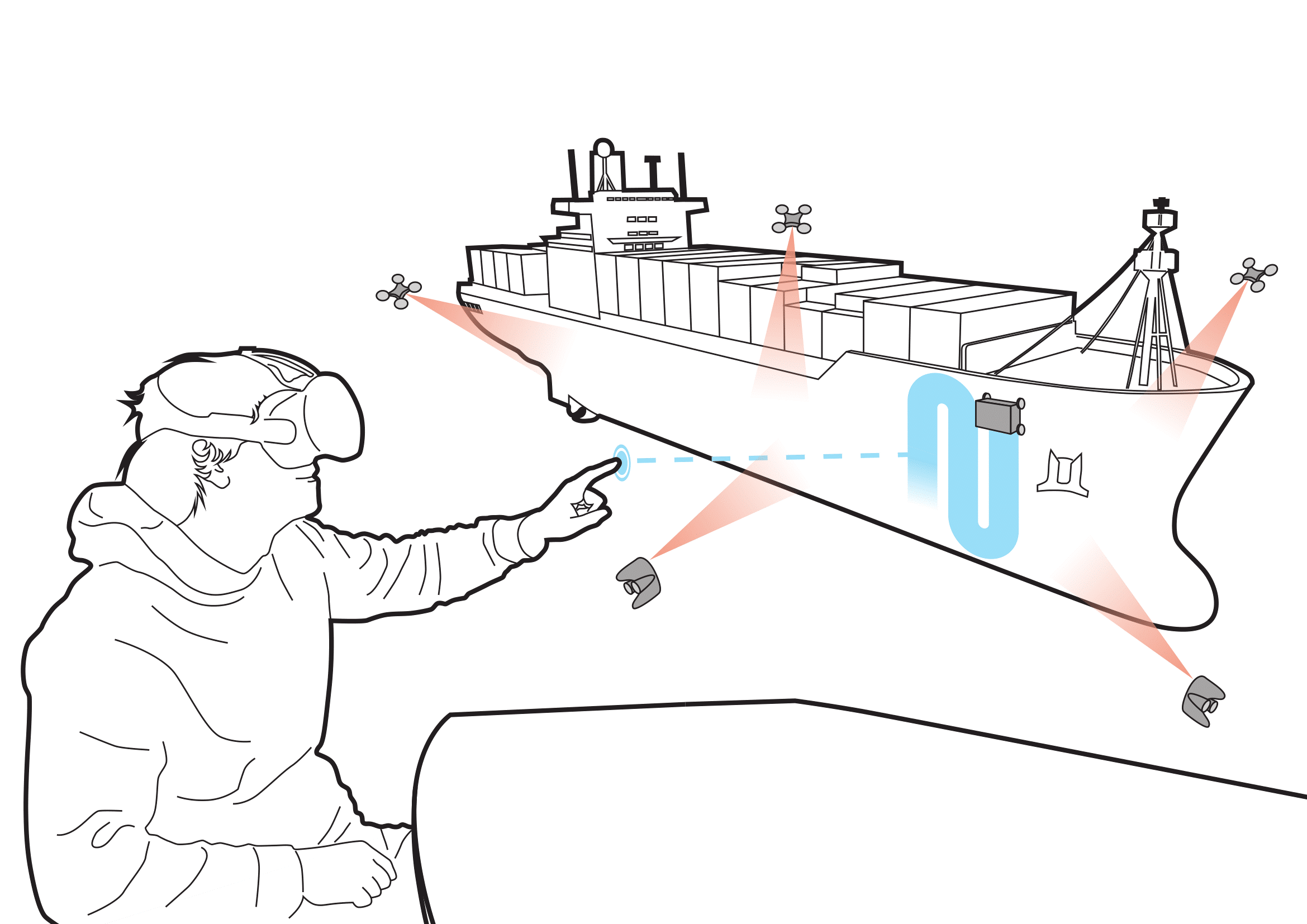

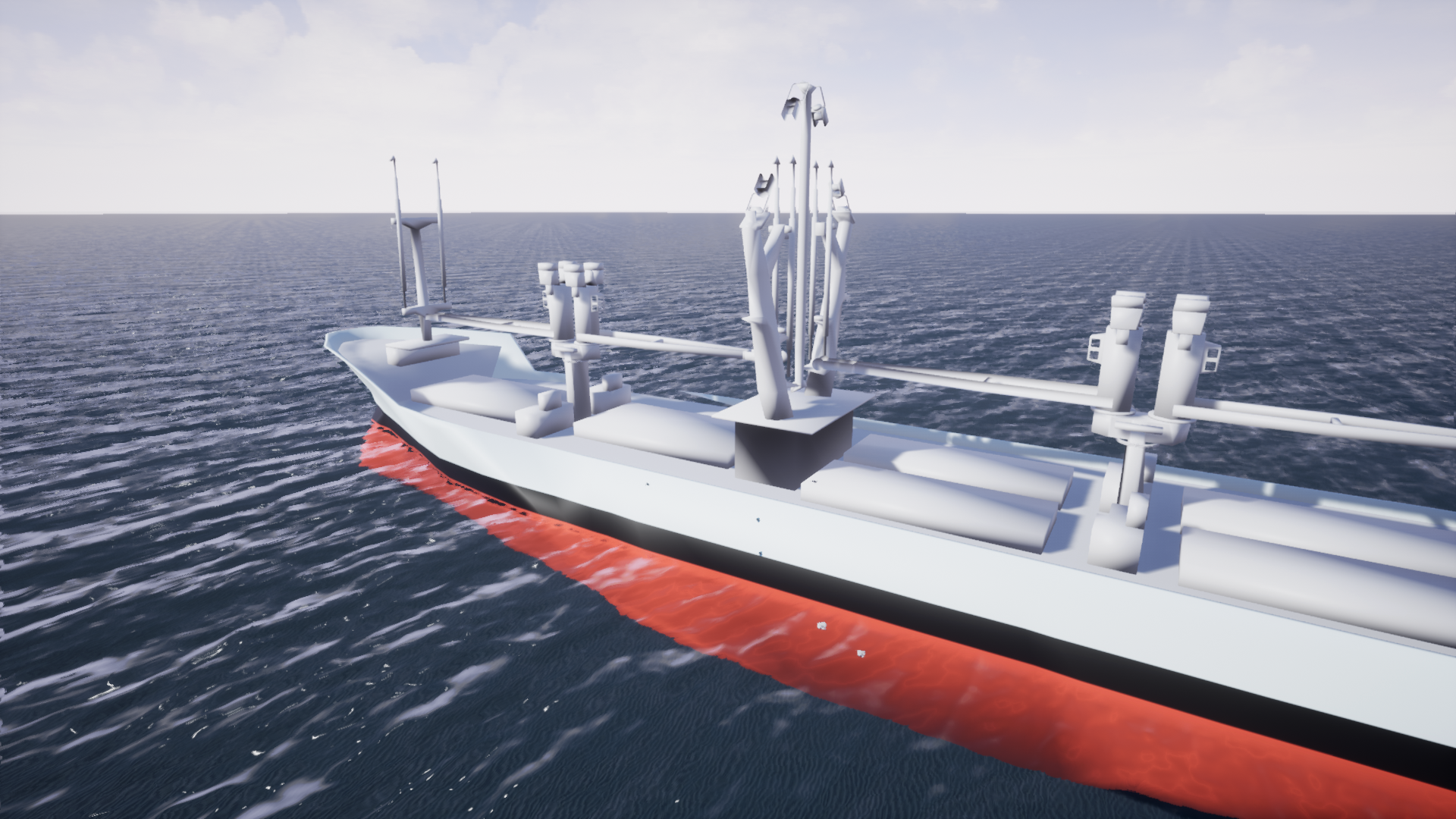

Due to the progressively increasing globalization and the outsourcing of production into lower-wage countries, there is an increasing demand for large, long-distance cargo and component transport. Big parts of this transport demand are done via container ships, cruising around the world.

So far, the inspection and maintenance of these container ships are done in fixed intervals in the drydocks of shipyards. However, the inspection downtimes and the corresponding costs of inspections without findings could be avoided if the condition of the ships' hulls is monitored constantly. Even the interval times could be increased, if small damages and biofouling could be treated locally, in a harbor, or at sea.

This idea is the aim of the EU project BugWright2. In this project, semiautonomous robots should be developed, which are able to inspect the ship hull for corrosion, without the need for a drydock. Additionally, the robots should be able to clean the hull of microorganisms and algae to avoid biofouling.

The technical implementation of this project will be carried out jointly by a group of 21 European partners under the lead of Prof. Cédric Pradalier from GeorgiaTech Lorraine in France. Besides the academic partners like the Norwegian University of Science and Technology, the University of Porto, or the World Maritime University in Malmö, research institutions like the Austrian Lakeside Labs and shipyards owners like the Star Bulk Carriers Corp. are part of the group.

We will lead the development of an augmented- and virtual reality (AR/VR) steering and monitoring system for the planned robots. To achieve this goal in a sustainable fashion we work in a close collaboration with Prof. Thomas Ellwart, the head of the Department for Business Psychology at the University of Trier. In this direct collaboration research on innovative interaction techniques with state-of-the-art AR/VR technology will be conducted to enable better interfaces in the later project stages. Here, special focus is laid on the intuitive usability of the resulting AR and VR system, to give a significant added value over the traditional desktop-based approaches.

In the project's runtime, software solutions for the Unreal Engine are developed. For the communication between the game engine and the Robot Operating System (ROS) a plugin was developed, which is available on GitHub here.

|

|

| The project's goal is the development of semi-autonomous robots that clean the outer walls of container ships and scan them for damage. Robot navigation and monitoring of the work progress will be carried out using an AR/VR-based software solution, while the localization of the robots is ensured by drones above and under water. | |