Profile

|

Dr. Bernd Hentschel |

Publications

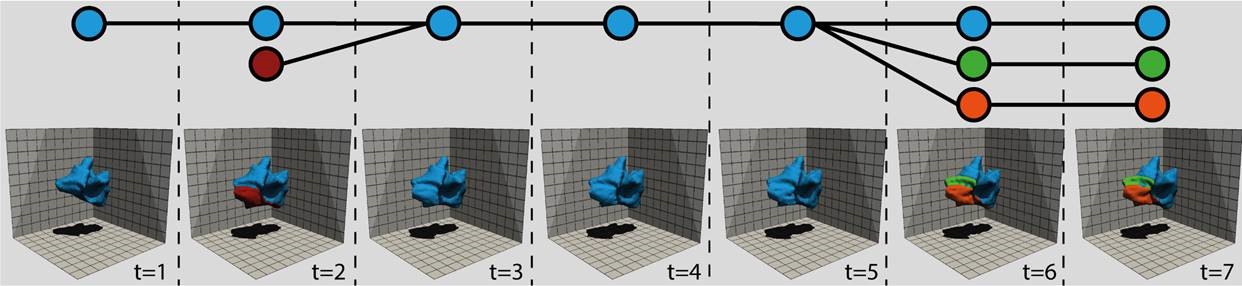

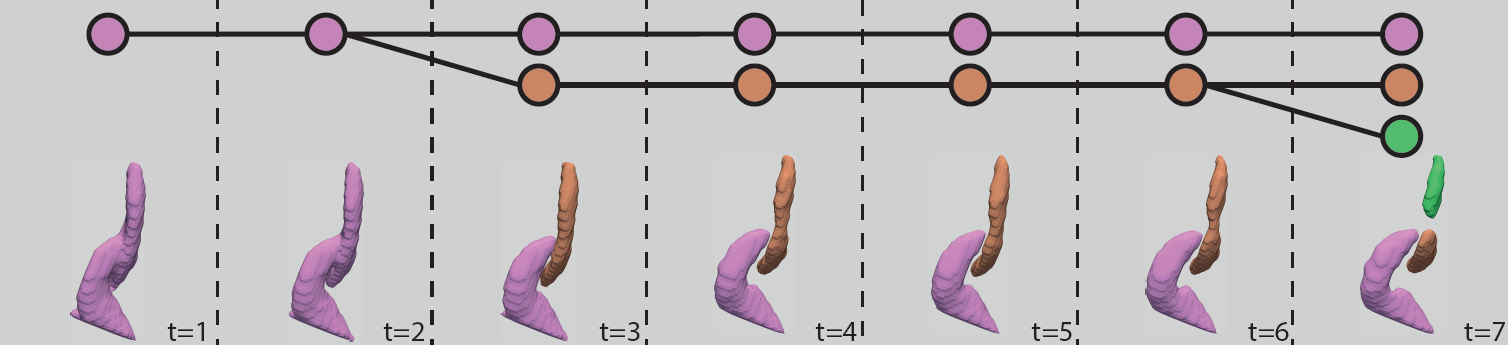

Feature Tracking by Two-Step Optimization

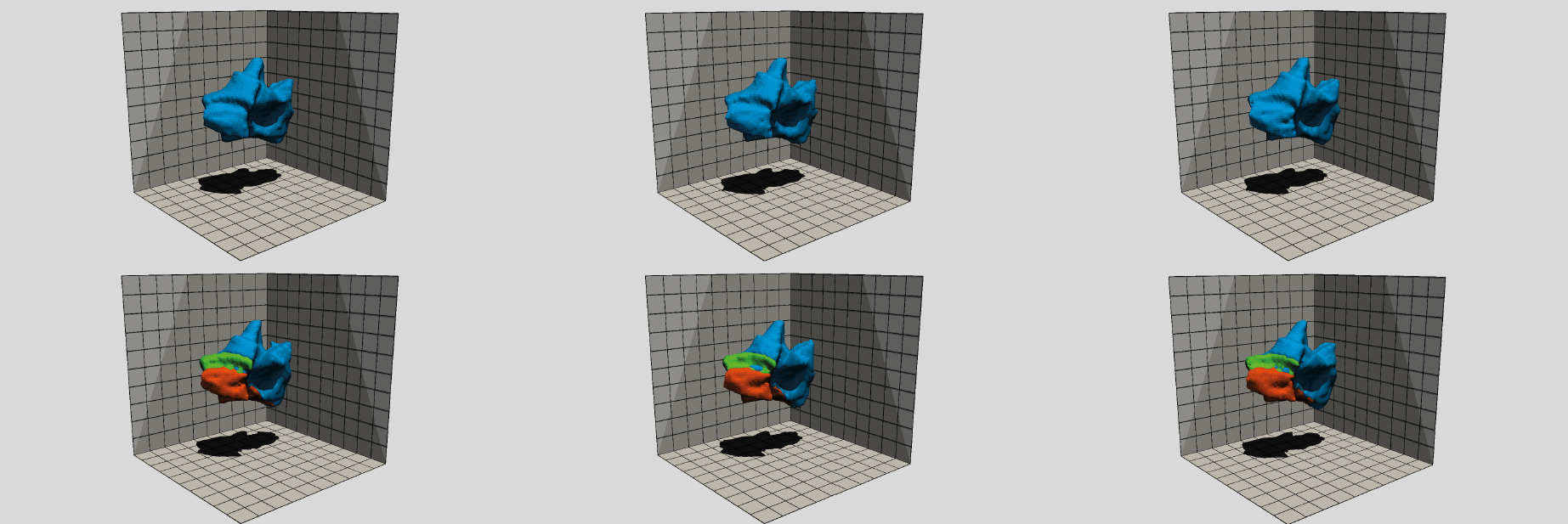

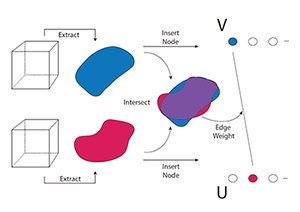

Tracking the temporal evolution of features in time-varying data is a key method in visualization. For typical feature definitions, such as vortices, objects are sparsely distributed over the data domain. In this paper, we present a novel approach for tracking both sparse and space-filling features. While the former comprise only a small fraction of the domain, the latter form a set of objects whose union covers the domain entirely while the individual objects are mutually disjunct. Our approach determines the assignment of features between two successive time-steps by solving two graph optimization problems. It first resolves one-to-one assignments of features by computing a maximum-weight, maximum-cardinality matching on a weighted bi-partite graph. Second, our algorithm detects events by creating a graph of potentially conflicting event explanations and finding a weighted, independent set in it. We demonstrate our method's effectiveness on synthetic and simulation data sets, the former of which enables quantitative evaluation because of the availability of ground-truth information. Here, our method performs on par or better than a well-established reference algorithm. In addition, manual visual inspection by our collaborators confirm the results' plausibility for simulation data.

@ARTICLE{Schnorr2018,

author = {Andrea Schnorr and Dirk N. Helmrich and Dominik Denker and Torsten W. Kuhlen and Bernd Hentschel},

title = {{F}eature {T}racking by {T}wo-{S}tep {O}ptimization},

journal = TVCG,

volume = {preprint available online},

doi = {https://doi.org/10.1109/TVCG.2018.2883630},

year = 2018,

}

Feature Tracking Utilizing a Maximum-Weight Independent Set Problem

Tracking the temporal evolution of features in time-varying data remains a combinatorially challenging problem. A recent method models event detection as a maximum-weight independent set problem on a graph representation of all possible explanations [35]. However, optimally solving this problem is NP-hard in the general case. Following the approach by Schnorr et al., we propose a new algorithm for event detection. Our algorithm exploits the modelspecific structure of the independent set problem. Specifically, we show how to traverse potential explanations in such a way that a greedy assignment provides reliably good results. We demonstrate the effectiveness of our approach on synthetic and simulation data sets, the former of which include ground-truth tracking information which enable a quantitative evaluation. Our results are within 1% of the theoretical optimum and comparable to an approximate solution provided by a state-of-the-art optimization package. At the same time, our algorithm is significantly faster.

@InProceedings{Schnorr2019,

author = {Andrea Schnorr, Dirk Norbert Helmrich, Hank Childs, Torsten Wolfgang Kuhlen, Bernd Hentschel},

title = {{Feature Tracking Utilizing a Maximum-Weight Independent Set Problem}},

booktitle = {9th IEEE Symposium on Large Data Analysis and Visualization},

year = {2019}

}

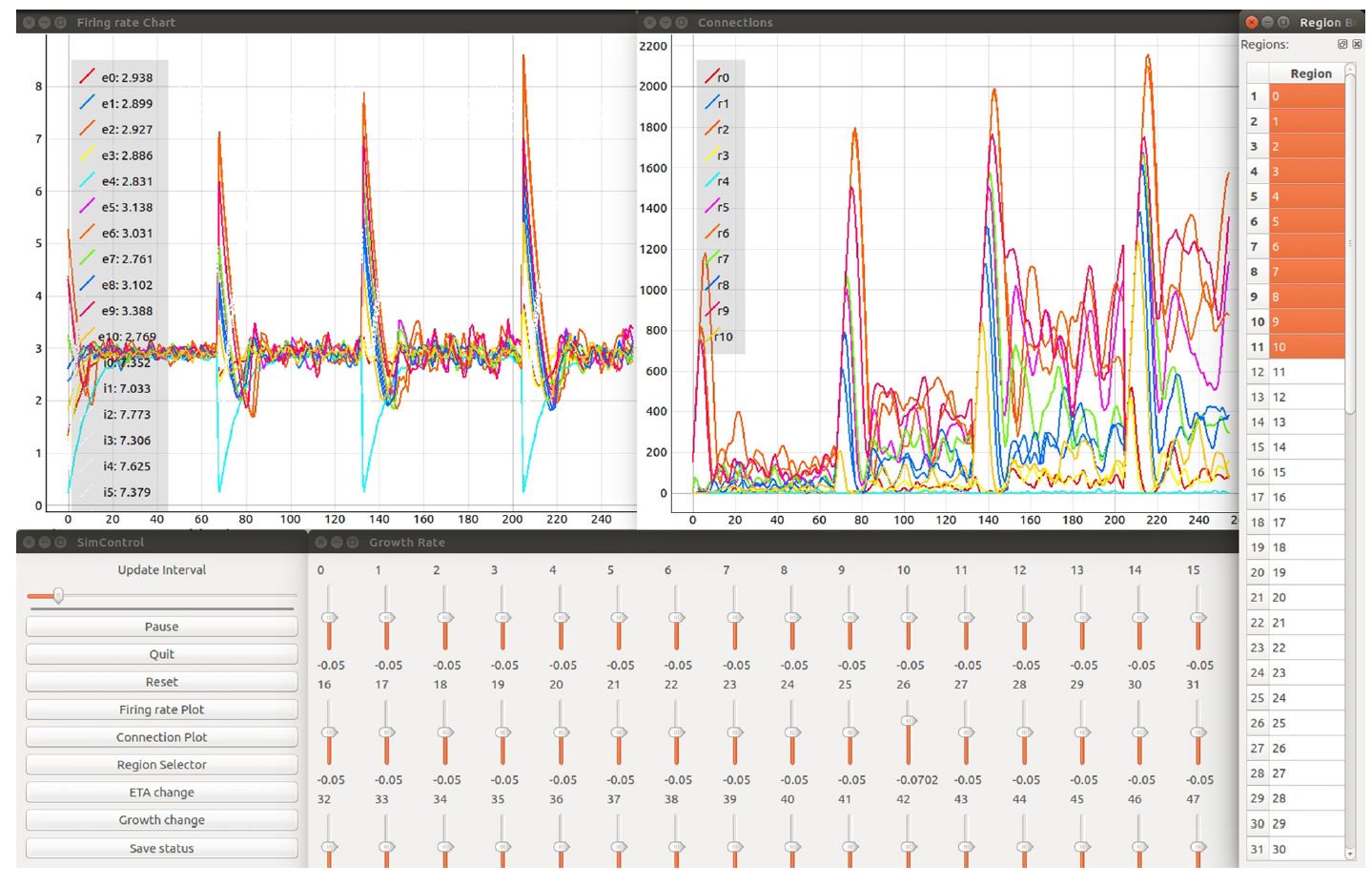

Toward Rigorous Parameterization of Underconstrained Neural Network Models Through Interactive Visualization and Steering of Connectivity Generation

Simulation models in many scientific fields can have non-unique solutions or unique solutions which can be difficult to find. Moreover, in evolving systems, unique ?nal state solutions can be reached by multiple different trajectories. Neuroscience is no exception. Often, neural network models are subject to parameter fitting to obtain desirable output comparable to experimental data. Parameter fitting without sufficient constraints and a systematic exploration of the possible solution space can lead to conclusions valid only around local minima or around non-minima. To address this issue, we have developed an interactive tool for visualizing and steering parameters in neural network simulation models. In this work, we focus particularly on connectivity generation, since ?nding suitable connectivity configurations for neural network models constitutes a complex parameter search scenario. The development of the tool has been guided by several use cases—the tool allows researchers to steer the parameters of the connectivity generation during the simulation, thus quickly growing networks composed of multiple populations with a targeted mean activity. The flexibility of the software allows scientists to explore other connectivity and neuron variables apart from the ones presented as use cases. With this tool, we enable an interactive exploration of parameter spaces and a better understanding of neural network models and grapple with the crucial problem of non-unique network solutions and trajectories. In addition, we observe a reduction in turn around times for the assessment of these models, due to interactive visualization while the simulation is computed.

@ARTICLE{10.3389/fninf.2018.00032,

AUTHOR={Nowke, Christian and Diaz-Pier, Sandra and Weyers, Benjamin and Hentschel, Bernd and Morrison, Abigail and Kuhlen, Torsten W. and Peyser, Alexander},

TITLE={Toward Rigorous Parameterization of Underconstrained Neural Network Models Through Interactive Visualization and Steering of Connectivity Generation},

JOURNAL={Frontiers in Neuroinformatics},

VOLUME={12},

PAGES={32},

YEAR={2018},

URL={https://www.frontiersin.org/article/10.3389/fninf.2018.00032},

DOI={10.3389/fninf.2018.00032},

ISSN={1662-5196},

ABSTRACT={Simulation models in many scientific fields can have non-unique solutions or unique solutions which can be difficult to find.

Moreover, in evolving systems, unique final state solutions can be reached by multiple different trajectories.

Neuroscience is no exception. Often, neural network models are subject to parameter fitting to obtain desirable output comparable to experimental data. Parameter fitting without sufficient constraints and a systematic exploration of the possible solution space can lead to conclusions valid only around local minima or around non-minima. To address this issue, we have developed an interactive tool for visualizing and steering parameters in neural network simulation models.

In this work, we focus particularly on connectivity generation, since finding suitable connectivity configurations for neural network models constitutes a complex parameter search scenario. The development of the tool has been guided by several use cases -- the tool allows researchers to steer the parameters of the connectivity generation during the simulation, thus quickly growing networks composed of multiple populations with a targeted mean activity. The flexibility of the software allows scientists to explore other connectivity and neuron variables apart from the ones presented as use cases. With this tool, we enable an interactive exploration of parameter spaces and a better understanding of neural network models and grapple with the crucial problem of non-unique network solutions and trajectories. In addition, we observe a reduction in turn around times for the assessment of these models, due to interactive visualization while the simulation is computed.}

}

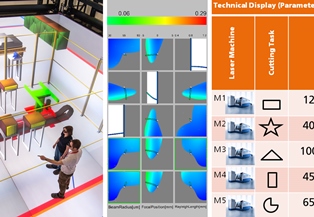

Interactive Visual Analysis of Multi-dimensional Metamodels

In the simulation of manufacturing processes, complex models are used to examine process properties. To save computation time, so-called metamodels serve as surrogates for the original models. Metamodels are inherently difficult to interpret, because they resemble multi-dimensional functions f : Rn -> Rm that map configuration parameters to production criteria. We propose a multi-view visualization application called memoSlice that composes several visualization techniques, specially adapted to the analysis of metamodels. With our application, we enable users to improve their understanding of a metamodel, but also to easily optimize processes. We put special attention on providing a high level of interactivity by realizing specialized parallelization techniques to provide timely feedback on user interactions. In this paper we outline these parallelization techniques and demonstrate their effectivity by means of micro and high level measurements.

@inproceedings {pgv.20181098,

booktitle = {Eurographics Symposium on Parallel Graphics and Visualization},

editor = {Hank Childs and Fernando Cucchietti},

title = {{Interactive Visual Analysis of Multi-dimensional Metamodels}},

author = {Gebhardt, Sascha and Pick, Sebastian and Hentschel, Bernd and Kuhlen, Torsten Wolfgang},

year = {2018},

publisher = {The Eurographics Association},

ISSN = {1727-348X},

ISBN = {978-3-03868-054-3},

DOI = {10.2312/pgv.20181098}

}

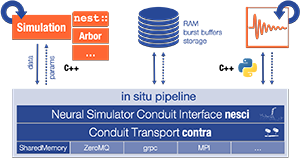

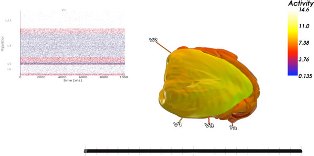

Streaming Live Neuronal Simulation Data into Visualization and Analysis

Neuroscientists want to inspect the data their simulations are producing while these are still running. This will on the one hand save them time waiting for results and therefore insight. On the other, it will allow for more efficient use of CPU time if the simulations are being run on supercomputers. If they had access to the data being generated, neuroscientists could monitor it and take counter-actions, e.g., parameter adjustments, should the simulation deviate too much from in-vivo observations or get stuck.

As a first step toward this goal, we devise an in situ pipeline tailored to the neuroscientific use case. It is capable of recording and transferring simulation data to an analysis/visualization process, while the simulation is still running. The developed libraries are made publicly available as open source projects. We provide a proof-of-concept integration, coupling the neuronal simulator NEST to basic 2D and 3D visualization.

@InProceedings{10.1007/978-3-030-02465-9_18,

author="Oehrl, Simon

and M{\"u}ller, Jan

and Schnathmeier, Jan

and Eppler, Jochen Martin

and Peyser, Alexander

and Plesser, Hans Ekkehard

and Weyers, Benjamin

and Hentschel, Bernd

and Kuhlen, Torsten W.

and Vierjahn, Tom",

editor="Yokota, Rio

and Weiland, Mich{\`e}le

and Shalf, John

and Alam, Sadaf",

title="Streaming Live Neuronal Simulation Data into Visualization and Analysis",

booktitle="High Performance Computing",

year="2018",

publisher="Springer International Publishing",

address="Cham",

pages="258--272",

abstract="Neuroscientists want to inspect the data their simulations are producing while these are still running. This will on the one hand save them time waiting for results and therefore insight. On the other, it will allow for more efficient use of CPU time if the simulations are being run on supercomputers. If they had access to the data being generated, neuroscientists could monitor it and take counter-actions, e.g., parameter adjustments, should the simulation deviate too much from in-vivo observations or get stuck.",

isbn="978-3-030-02465-9"

}

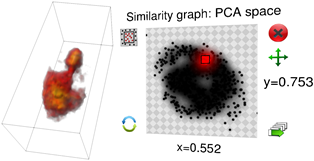

Poster: Complexity Estimation for Feature Tracking Data.

Feature tracking is a method of time-varying data analysis. Due to the complexity of the underlying problem, different feature tracking algorithms have different levels of correctness in certain use cases. However, there is no efficient way to evaluate their performance on simulation data since there is no ground-truth easily obtainable. Synthetic data is a way to ensure a minimum level of correctness, though there are limits to their expressiveness when comparing the results to simulation data. To close this gap, we calculate a synthetic data set and use its results to extract a hypothesis about the algorithm performance that we can apply to simulation data.

@inproceedings{Helmrich2018,

title={Complexity Estimation for Feature Tracking Data.},

author={Helmrich, Dirk N and Schnorr, Andrea and Kuhlen, Torsten W and Hentschel, Bernd},

booktitle={LDAV},

pages={100--101},

year={2018}

}

Talk: Streaming Live Neuronal Simulation Data into Visualization and Analysis

Being able to inspect neuronal network simulations while they are running provides new research strategies to neuroscientists as it enables them to perform actions like parameter adjustments in case the simulation performs unexpectedly. This can also save compute resources when such simulations are run on large supercomputers as errors can be detected and corrected earlier saving valuable compute time. This talk presents a prototypical pipeline that enables in-situ analysis and visualization of running simulations.

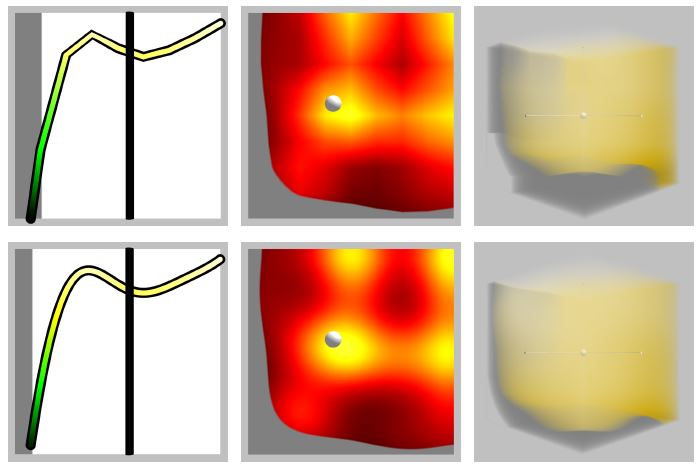

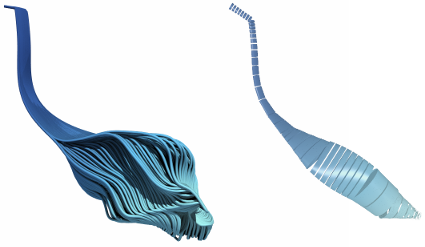

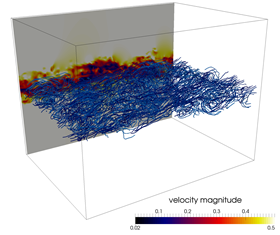

Interactive Exploration of Dissipation Element Geometry

Dissipation elements (DE) define a geometrical structure for the analysis of small-scale turbulence. Existing analyses based on DEs focus on a statistical treatment of large populations of DEs. In this paper, we propose a method for the interactive visualization of the geometrical shape of DE populations. We follow a two-step approach: in a pre-processing step, we approximate individual DEs by tube-like, implicit shapes with elliptical cross sections of varying radii; we then render these approximations by direct ray-casting thereby avoiding the need for costly generation of detailed, explicit geometry for rasterization. Our results demonstrate that the approximation gives a reasonable representation of DE geometries and the rendering performance is suitable for interactive use.

@InProceedings{Vierjahn2017,

booktitle = {Eurographics Symposium on Parallel Graphics and Visualization},

author = {Tom Vierjahn and Andrea Schnorr and Benjamin Weyers and Dominik Denker and Ingo Wald and Christoph Garth and Torsten W. Kuhlen and Bernd Hentschel},

title = {Interactive Exploration of Dissipation Element Geometry},

year = {2017},

pages = {53--62},

ISSN = {1727-348X},

ISBN = {978-3-03868-034-5},

doi = {10.2312/pgv.20171093},

}

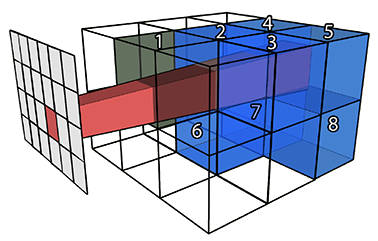

A Task-Based Parallel Rendering Component For Large-Scale Visualization Applications

An increasingly heterogeneous system landscape in modern high performance computing requires the efficient and portable adaption of performant algorithms to diverse architectures. However, classic hybrid shared-memory/distributed systems are designed and tuned towards specific platforms, thus impeding development, usage and optimization of these approaches with respect to portability. We demonstrate a flexible parallel rendering framework built upon a task-based dynamic runtime environment enabling adaptable performance-oriented deployment on various platform configurations. Our task definition represents an effective and easy-to-control trade-off between sort-first and sort-last image compositing, enabling good scalability in combination with inherent dynamic load balancing. We conduct comprehensive benchmarks to verify the characteristics and potential of our novel task-based system design for high-performance visualization.

@inproceedings {Biedert2017,

booktitle = {Eurographics Symposium on Parallel Graphics and Visualization},

title = {{A Task-Based Parallel Rendering Component For Large-Scale Visualization Applications}},

author = {Biedert, Tim and Werner, Kilian and Hentschel, Bernd and Garth, Christoph},

year = {2017},

pages = {63--71},

ISSN = {1727-348X},

ISBN = {978-3-03868-034-5},

DOI = {10.2312/pgv.20171094}

}

Interactive Level-of-Detail Visualization of 3D-Polarized Light Imaging Data Using Spherical Harmonics

3D-Polarized Light Imaging (3D-PLI) provides data that enables an exploration of brain fibers at very high resolution. However, the visualization poses several challenges. Beside the huge data set sizes, users have to visually perceive the pure amount of information which might be, among other aspects, inhibited for inner structures because of occlusion by outer layers of the brain. We propose a clustering of fiber directions by means of spherical harmonics using a level-of-detail structure by which the user can interactively choose a clustering degree according to the zoom level or details required. Furthermore, the clustering method can be used for the automatic grouping of similar spherical harmonics automatically into one representative. An optional overlay with a direct vector visualization of the 3D-PLI data provides a better anatomical context.

Honorable Mention for Best Short Paper!

@inproceedings {Haenel2017Interactive,

booktitle = {EuroVis 2017 - Short Papers},

editor = {Barbora Kozlikova and Tobias Schreck and Thomas Wischgoll},

title = {{Interactive Level-of-Detail Visualization of 3D-Polarized Light Imaging Data Using Spherical Harmonics}},

author = {H\”anel, Claudia and Demiralp, Ali C. and Axer, Markus and Gr\”assel, David and Hentschel, Bernd and Kuhlen, Torsten W.},

year = {2017},

publisher = {The Eurographics Association},

ISBN = {978-3-03868-043-7},

DOI = {10.2312/eurovisshort.20171145}

}

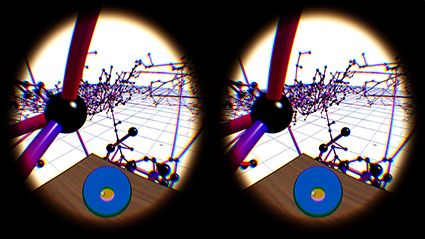

buenoSDIAs: Supporting Desktop Immersive Analytics While Actively Preventing Cybersickness

Immersive data analytics as an emerging research topic in scientific and information visualization has recently been brought back into the focus due to the emergence of low-cost consumer virtual reality hardware. Previous research has shown the positive impact of immersive visualization on data analytics workflows, but in most cases, insights were based on large-screen setups. In contrast, less research focuses on a close integration of immersive technology into existing, i.e., desktop-based data analytics workflows. This implies specific requirements regarding the usability of such systems, which include, i.e., the prevention of cybersickness. In this work, we present a prototypical application, which offers a first set of tools and addresses major challenges for a fully immersive data analytics setting in which the user is sitting at a desktop. In particular, we address the problem of cybersickness by integrating prevention strategies combined with individualized user profiles to maximize time of use.

Gistualizer: An Immersive Glyph for Multidimensional Datapoints

Data from diverse workflows is often too complex for an adequate analysis without visualization. One kind of data are multi-dimensional datasets, which can be visualized via a wide array of techniques. For instance, glyphs can be used to visualize individual datapoints. However, glyphs need to be actively looked at to be comprehended. This work explores a novel approach towards visualizing a single datapoint, with the intention of increasing the user’s awareness of it while they are looking at something else. The basic concept is to represent this point by a scene that surrounds the user in an immersive virtual environment. This idea is based on the observation that humans can extract low-detailed information, the so-called gist, from a scene nearly instantly (equal or less 100ms). We aim at providing a first step towards answering the question whether enough information can be encoded in the gist of a scene to represent a point in multi-dimensional space and if this information is helpful to the user’s understanding of this space.

@inproceedings{Bellgardt2017,

author = {Bellgardt, Martin and Gebhardt, Sascha and Hentschel, Bernd and Kuhlen, Torsten W.},

booktitle = {Workshop on Immersive Analytics},

title = {{Gistualizer: An Immersive Glyph for Multidimensional Datapoints}},

year = {2017}

}

Poster: Towards a Design Space Characterizing Workflows that Take Advantage of Immersive Visualization

Immersive visualization (IV) fosters the creation of mental images of a data set, a scene, a procedure, etc. We devise an initial version of a design space for categorizing workflows that take advantage of IV. From this categorization, specific requirements for seamlessly integrating IV can be derived. We validate the design space with three workflows emerging from our research projects.

@InProceedings{Vierjahn2017,

Title = {Towards a Design Space Characterizing Workflows that Take Advantage of Immersive Visualization},

Author = {Tom Vierjahn and Daniel Zielasko and Kees van Kooten and Peter Messmer and Bernd Hentschel and Torsten W. Kuhlen and Benjamin Weyers},

Booktitle = {IEEE Virtual Reality Conference Poster Proceedings},

Year = {2017},

Pages = {329-330},

DOI={10.1109/VR.2017.7892310}

}

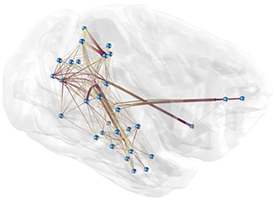

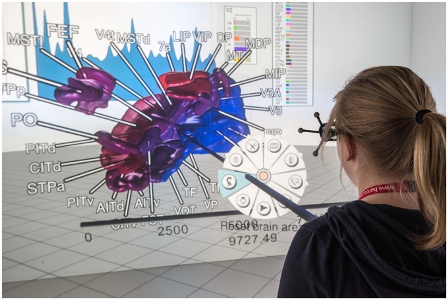

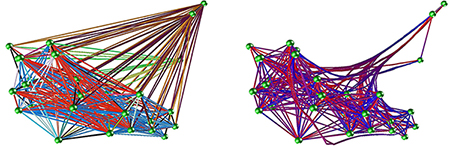

Interactive 3D Force-Directed Edge Bundling

Interactive analysis of 3D relational data is challenging. A common way of representing such data are node-link diagrams as they support analysts in achieving a mental model of the data. However, naïve 3D depictions of complex graphs tend to be visually cluttered, even more than in a 2D layout. This makes graph exploration and data analysis less efficient. This problem can be addressed by edge bundling. We introduce a 3D cluster-based edge bundling algorithm that is inspired by the force-directed edge bundling (FDEB) algorithm [Holten2009] and fulfills the requirements to be embedded in an interactive framework for spatial data analysis. It is parallelized and scales with the size of the graph regarding the runtime. Furthermore, it maintains the edge’s model and thus supports rendering the graph in different structural styles. We demonstrate this with a graph originating from a simulation of the function of a macaque brain.

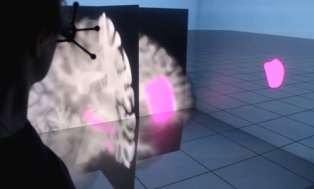

Visual Quality Adjustment for Volume Rendering in a Head-Tracked Virtual Environment

To avoid simulator sickness and improve presence in immersive virtual environments (IVEs), high frame rates and low latency are required. In contrast, volume rendering applications typically strive for high visual quality that induces high computational load and, thus, leads to low frame rates. To evaluate this trade-off in IVEs, we conducted a controlled user study with 53 participants. Search and count tasks were performed in a CAVE with varying volume rendering conditions which are applied according to viewer position updates corresponding to head tracking. The results of our study indicate that participants preferred the rendering condition with continuous adjustment of the visual quality over an instantaneous adjustment which guaranteed for low latency and over no adjustment providing constant high visual quality but rather low frame rates. Within the continuous condition, the participants showed best task performance and felt less disturbed by effects of the visualization during movements. Our findings provide a good basis for further evaluations of how to accelerate volume rendering in IVEs according to user’s preferences.

@article{Hanel2016,

author = { H{\"{a}}nel, Claudia and Weyers, Benjamin and Hentschel, Bernd and Kuhlen, Torsten W.},

doi = {10.1109/TVCG.2016.2518338},

issn = {10772626},

journal = {IEEE Transactions on Visualization and Computer Graphics},

number = {4},

pages = {1472--1481},

pmid = {26780811},

title = {{Visual Quality Adjustment for Volume Rendering in a Head-Tracked Virtual Environment}},

volume = {22},

year = {2016}

}

Design and Evaluation of Data Annotation Workflows for CAVE-like Virtual Environments

Data annotation finds increasing use in Virtual Reality applications with the goal to support the data analysis process, such as architectural reviews. In this context, a variety of different annotation systems for application to immersive virtual environments have been presented. While many interesting interaction designs for the data annotation workflow have emerged from them, important details and evaluations are often omitted. In particular, we observe that the process of handling metadata to interactively create and manage complex annotations is often not covered in detail. In this paper, we strive to improve this situation by focusing on the design of data annotation workflows and their evaluation. We propose a workflow design that facilitates the most important annotation operations, i.e., annotation creation, review, and modification. Our workflow design is easily extensible in terms of supported annotation and metadata types as well as interaction techniques, which makes it suitable for a variety of application scenarios. To evaluate it, we have conducted a user study in a CAVE-like virtual environment in which we compared our design to two alternatives in terms of a realistic annotation creation task. Our design obtained good results in terms of task performance and user experience.

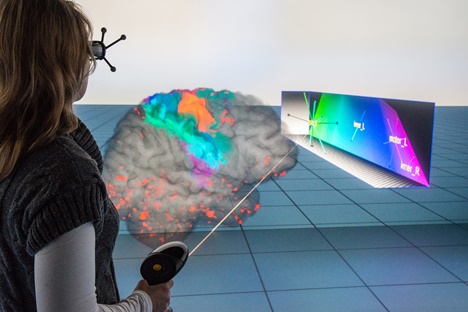

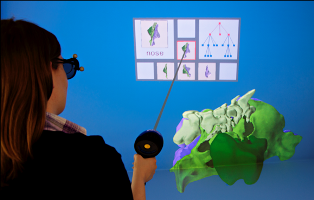

Towards the Ultimate Display for Neuroscientific Data Analysis

This article wants to give some impulses for a discussion about how an “ultimate” display should look like to support the Neuroscience community in an optimal way. In particular, we will have a look at immersive display technology. Since its hype in the early 90’s, immersive Virtual Reality has undoubtedly been adopted as a useful tool in a variety of application domains and has indeed proven its potential to support the process of scientific data analysis. Yet, it is still an open question whether or not such non-standard displays make sense in the context of neuroscientific data analysis. We argue that the potential of immersive displays is neither about the raw pixel count only, nor about other hardware-centric characteristics. Instead, we advocate the design of intuitive and powerful user interfaces for a direct interaction with the data, which support the multi-view paradigm in an efficient and flexible way, and – finally – provide interactive response times even for huge amounts of data and when dealing multiple datasets simultaneously.

@InBook{Kuhlen2016,

Title = {Towards the Ultimate Display for Neuroscientific Data Analysis},

Author = {Kuhlen, Torsten Wolfgang and Hentschel, Bernd},

Editor = {Amunts, Katrin and Grandinetti, Lucio and Lippert, Thomas and Petkov, Nicolai},

Pages = {157--168},

Publisher = {Springer International Publishing},

Year = {2016},

Address = {Cham},

Booktitle = {Brain-Inspired Computing: Second International Workshop, BrainComp 2015, Cetraro, Italy, July 6-10, 2015, Revised Selected Papers},

Doi = {10.1007/978-3-319-50862-7_12},

ISBN = {978-3-319-50862-7},

Url = {http://dx.doi.org/10.1007/978-3-319-50862-7_12}

}

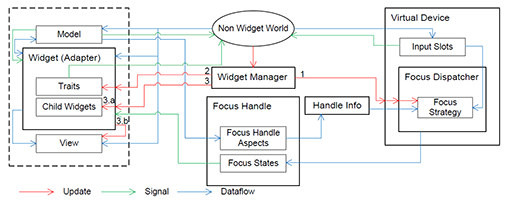

Vista Widgets: A Framework for Designing 3D User Interfaces from Reusable Interaction Building Blocks

Virtual Reality (VR) has been an active field of research for several decades, with 3D interaction and 3D User Interfaces (UIs) as important sub-disciplines. However, the development of 3D interaction techniques and in particular combining several of them to construct complex and usable 3D UIs remains challenging, especially in a VR context. In addition, there is currently only limited reusable software for implementing such techniques in comparison to traditional 2D UIs. To overcome this issue, we present ViSTA Widgets, a software framework for creating 3D UIs for immersive virtual environments. It extends the ViSTA VR framework by providing functionality to create multi-device, multi-focus-strategy interaction building blocks and means to easily combine them into complex 3D UIs. This is realized by introducing a device abstraction layer along sophisticated focus management and functionality to create novel 3D interaction techniques and 3D widgets. We present the framework and illustrate its effectiveness with code and application examples accompanied by performance evaluations.

@InProceedings{Gebhardt2016,

Title = {{Vista Widgets: A Framework for Designing 3D User Interfaces from Reusable Interaction Building Blocks}},

Author = {Gebhardt, Sascha and Petersen-Krau, Till and Pick, Sebastian and Rausch, Dominik and Nowke, Christian and Knott, Thomas and Schmitz, Patric and Zielasko, Daniel and Hentschel, Bernd and Kuhlen, Torsten W.},

Booktitle = {Proceedings of the 22nd ACM Conference on Virtual Reality Software and Technology},

Year = {2016},

Address = {New York, NY, USA},

Pages = {251--260},

Publisher = {ACM},

Series = {VRST '16},

Acmid = {2993382},

Doi = {10.1145/2993369.2993382},

ISBN = {978-1-4503-4491-3},

Keywords = {3D interaction, 3D user interfaces, framework, multi-device, virtual reality},

Location = {Munich, Germany},

Numpages = {10},

Url = {http://doi.acm.org/10.1145/2993369.2993382}

}

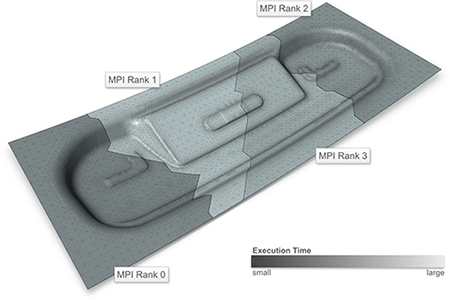

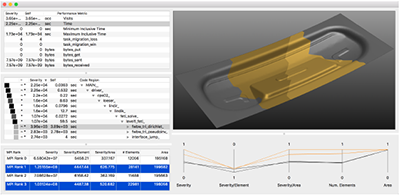

Visualizing Performance Data With Respect to the Simulated Geometry

Understanding the performance behaviour of high-performance computing (hpc) applications based on performance profiles is a challenging task. Phenomena in the performance behaviour can stem from the hpc system itself, from the application’s code, but also from the simulation domain. In order to analyse the latter phenomena, we propose a system that visualizes profile-based performance data in its spatial context in the simulation domain, i.e., on the geometry processed by the application. It thus helps hpc experts and simulation experts understand the performance data better. Furthermore, it reduces the initially large search space by automatically labeling those parts of the data that reveal variation in performance and thus require detailed analysis.

@inproceedings{VIERJAHN-2016-02,

Author = {Vierjahn, Tom and Kuhlen, Torsten W. and M\"{u}ller, Matthias S. and Hentschel, Bernd},

Booktitle = {JARA-HPC Symposium (accepted for publication)},

Title = {Visualizing Performance Data With Respect to the Simulated Geometry},

Year = {2016}}

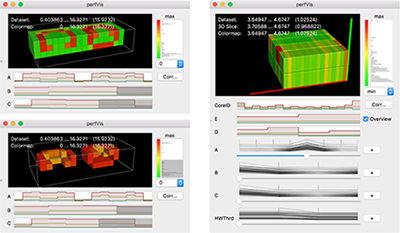

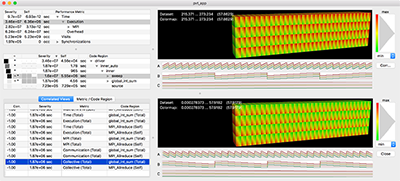

Using Directed Variance to Identify Meaningful Views in Call-Path Performance Profiles

Understanding the performance behaviour of massively parallel high-performance computing (HPC) applications based on call-path performance profiles is a time-consuming task. In this paper, we introduce the concept of directed variance in order to help analysts find performance bottlenecks in massive performance data and in the end optimize the application. According to HPC experts’ requirements, our technique automatically detects severe parts in the data that expose large variation in an application’s performance behaviour across system resources. Previously known variations are effectively filtered out. Analysts are thus guided through a reduced search space towards regions of interest for detailed examination in a 3D visualization. We demonstrate the effectiveness of our approach using performance data of common benchmark codes as well as from actively developed production codes.

@inproceedings{VIERJAHN-2016-04,

Author = {Vierjahn, Tom and Hermanns, Marc-Andr\'{e} and Mohr, Bernd and M\"{u}ller, Matthias S. and Kuhlen, Torsten W. and Hentschel, Bernd},

Booktitle = {3rd Workshop Visual Performance Analysis (to appear)},

Title = {Using Directed Variance to Identify Meaningful Views in Call-Path Performance Profiles},

Year = {2016}}

Poster: Correlating Sub-Phenomena in Performance Data in the Frequency Domain

Finding and understanding correlated performance behaviour of the individual functions of massively parallel high-performance computing (HPC) applications is a time-consuming task. In this poster, we propose filtered correlation analysis for automatically locating interdependencies in call-path performance profiles. Transforming the data into the frequency domain splits a performance phenomenon into sub-phenomena to be correlated separately. We provide the mathematical framework and an overview over the visualization, and we demonstrate the effectiveness of our technique.

Best Poster Award!

@inproceedings{Vierjahn-2016-03,

Author = {Vierjahn, Tom and Hermanns, Marc-Andr\'{e} and Mohr, Bernd and M\"{u}ller, Matthias S. and Kuhlen, Torsten W. and Hentschel, Bernd},

Booktitle = {LDAV 2016 -- Posters (accepted)},

Date-Added = {2016-08-31 22:14:47 +0000},

Date-Modified = {2016-08-31 22:15:58 +0000},

Title = {Correlating Sub-Phenomena in Performance Data in the Frequency Domain}

}

Poster: Formal Evaluation Strategies for Feature Tracking

We present an approach for tracking space-filling features based on a two-step algorithm utilizing two graph optimization techniques. First, one-to-one assignments between successive time steps are found by a matching on a weighted, bi-partite graph. Second, events are detected by computing an independent set on potential event explanations. The main objective of this work is investigating options for formal evaluation of complex feature tracking algorithms in the absence of ground truth data.

@INPROCEEDINGS{Schnorr2016, author = {Andrea Schnorr and Sebastian Freitag and Dirk Helmrich and Torsten W. Kuhlen and Bernd Hentschel}, title = {{F}ormal {E}valuation {S}trategies for {F}eature {T}racking}, booktitle = Proc # { the } # LDAV, year = {2016}, pages = {103--104}, abstract = { We present an approach for tracking space-filling features based on a two-step algorithm utilizing two graph optimization techniques. First, one-to-one assignments between successive time steps are found by a matching on a weighted, bi-partite graph. Second, events are detected by computing an independent set on potential event explanations. The main objective of this work is investigating options for formal evaluation of complex feature tracking algorithms in the absence of ground truth data.

}, doi = { 10.1109/LDAV.2016.7874339}}

Poster: Geometry-Aware Visualization of Performance Data

Phenomena in the performance behaviour of high-performance computing (HPC) applications can stem from the HPC system itself, from the application's code, but also from the simulation domain. In order to analyse the latter phenomena, we propose a system that visualizes profile-based performance data in its spatial context, i.e., on the geometry, in the simulation domain. It thus helps HPC experts but also simulation experts understand the performance data better. In addition, our tool reduces the initially large search space by automatically labelling large-variation views on the data which require detailed analysis.

@inproceedings {eurp.20161136,

booktitle = {EuroVis 2016 - Posters},

editor = {Tobias Isenberg and Filip Sadlo},

title = {{Geometry-Aware Visualization of Performance Data}},

author = {Vierjahn, Tom and Hentschel, Bernd and Kuhlen, Torsten W.},

year = {2016},

publisher = {The Eurographics Association},

ISBN = {978-3-03868-015-4},

DOI = {10.2312/eurp.20161136},

pages = {37--39}

}

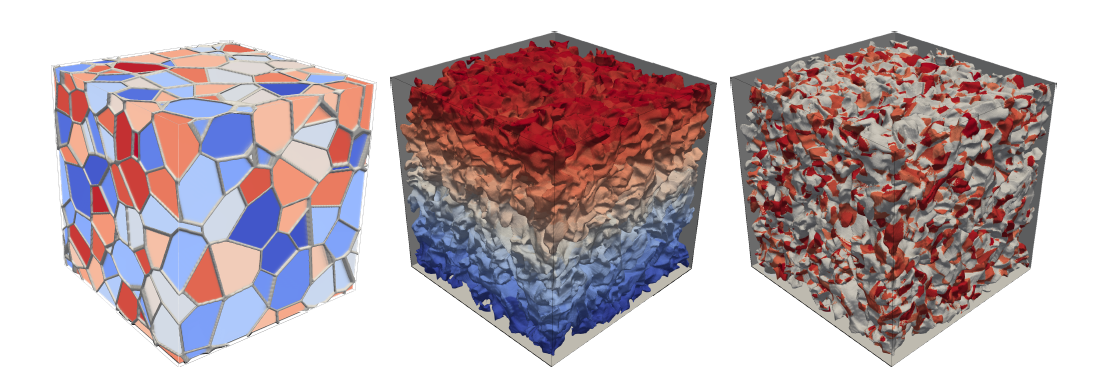

Poster: Tracking Space-Filling Features by Two-Step Optimization

We present a novel approach for tracking space-filling features, i.e., a set of features covering the entire domain. The assignment between successive time steps is determined by a two-step, global optimization scheme. First, a maximum-weight, maximal matching on a bi-partite graph is computed to provide one-to-one assignments between features of successive time steps. Second, events are detected in a subsequent step; here the matching step serves to restrict the exponentially large set of potential solutions. To this end, we compute an independent set on a graph representing conflicting event explanations. The method is evaluated by tracking dissipation elements, a structure definition from turbulent flow analysis.

Honorable Mention Award!

@inproceedings {eurp.20161146,

booktitle = {EuroVis 2016 - Posters},

editor = {Tobias Isenberg and Filip Sadlo},

title = {{Tracking Space-Filling Features by Two-Step Optimization}},

author = {Schnorr, Andrea and Freitag, Sebastian and Kuhlen, Torsten W. and Hentschel, Bernd},

year = {2016},

publisher = {The Eurographics Association},

pages = {77--79},

ISBN = {978-3-03868-015-4},

DOI = {10.2312/eurp.20161146}

}

Integrating Visualizations into Modeling NEST Simulations

Modeling large-scale spiking neural networks showing realistic biological behavior in their dynamics is a complex and tedious task. Since these networks consist of millions of interconnected neurons, their simulation produces an immense amount of data. In recent years it has become possible to simulate even larger networks. However, solutions to assist researchers in understanding the simulation's complex emergent behavior by means of visualization are still lacking. While developing tools to partially fill this gap, we encountered the challenge to integrate these tools easily into the neuroscientists' daily workflow. To understand what makes this so challenging, we looked into the workflows of our collaborators and analyzed how they use the visualizations to solve their daily problems. We identified two major issues: first, the analysis process can rapidly change focus which requires to switch the visualization tool that assists in the current problem domain. Second, because of the heterogeneous data that results from simulations, researchers want to relate data to investigate these effectively. Since a monolithic application model, processing and visualizing all data modalities and reflecting all combinations of possible workflows in a holistic way, is most likely impossible to develop and to maintain, a software architecture that offers specialized visualization tools that run simultaneously and can be linked together to reflect the current workflow, is a more feasible approach. To this end, we have developed a software architecture that allows neuroscientists to integrate visualization tools more closely into the modeling tasks. In addition, it forms the basis for semantic linking of different visualizations to reflect the current workflow. In this paper, we present this architecture and substantiate the usefulness of our approach by common use cases we encountered in our collaborative work.

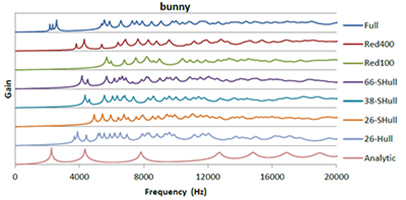

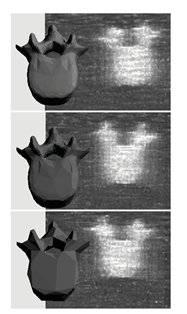

Level-of-Detail Modal Analysis for Real-time Sound Synthesis

Modal sound synthesis is a promising approach for real-time physically-based sound synthesis. A modal analysis is used to compute characteristic vibration modes from the geometry and material properties of scene objects. These modes allow an efficient sound synthesis at run-time, but the analysis is computationally expensive and thus typically computed in a pre-processing step. In interactive applications, however, objects may be created or modified at run-time. Unless the new shapes are known upfront, the modal data cannot be pre-computed and thus a modal analysis has to be performed at run-time. In this paper, we present a system to compute modal sound data at run-time for interactive applications. We evaluate the computational requirements of the modal analysis to determine the computation time for objects of different complexity. Based on these limits, we propose using different levels-of-detail for the modal analysis, using different geometric approximations that trade speed for accuracy, and evaluate the errors introduced by lower-resolution results. Additionally, we present an asynchronous architecture to distribute and prioritize modal analysis computations.

@inproceedings {vriphys.20151335,

booktitle = {Workshop on Virtual Reality Interaction and Physical Simulation},

editor = {Fabrice Jaillet and Florence Zara and Gabriel Zachmann},

title = {{Level-of-Detail Modal Analysis for Real-time Sound Synthesis}},

author = {Rausch, Dominik and Hentschel, Bernd and Kuhlen, Torsten W.},

year = {2015},

publisher = {The Eurographics Association},

ISBN = {978-3-905674-98-9},

DOI = {10.2312/vriphys.20151335}

pages = {61--70}

}

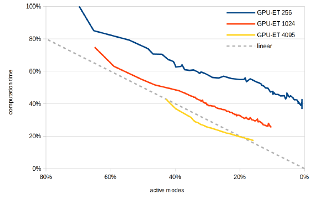

Packet-Oriented Streamline Tracing on Modern SIMD Architectures

The advection of integral lines is an important computational kernel in vector field visualization. We investigate how this kernel can profit from vector (SIMD) extensions in modern CPUs. As a baseline, we formulate a streamline tracing algorithm that facilitates auto-vectorization by an optimizing compiler. We analyze this algorithm and propose two different optimizations. Our results show that particle tracing does not per se benefit from SIMD computation. Based on a careful analysis of the auto-vectorized code, we propose an optimized data access routine and a re-packing scheme which increases average SIMD efficiency. We evaluate our approach on three different, turbulent flow fields. Our optimized approaches increase integration performance up to 5:6 over our baseline measurement. We conclude with a discussion of current limitations and aspects for future work.

@INPROCEEDINGS{Hentschel2015,

author = {Bernd Hentschel and Jens Henrik G{\"o}bbert and Michael Klemm and

Paul Springer and Andrea Schnorr and Torsten W. Kuhlen},

title = {{P}acket-{O}riented {S}treamline {T}racing on {M}odern {SIMD}

{A}rchitectures},

booktitle = {Proceedings of the Eurographics Symposium on Parallel Graphics

and Visualization},

year = {2015},

pages = {43--52},

abstract = {The advection of integral lines is an important computational

kernel in vector field visualization. We investigate

how this kernel can profit from vector (SIMD) extensions in modern CPUs. As a

baseline, we formulate a streamline

tracing algorithm that facilitates auto-vectorization by an optimizing compiler.

We analyze this algorithm and

propose two different optimizations. Our results show that particle tracing does

not per se benefit from SIMD computation.

Based on a careful analysis of the auto-vectorized code, we propose an optimized

data access routine

and a re-packing scheme which increases average SIMD efficiency. We evaluate our

approach on three different,

turbulent flow fields. Our optimized approaches increase integration performance

up to 5.6x over our baseline

measurement. We conclude with a discussion of current limitations and aspects

for future work.}

}

Poster: Scalable Metadata In- and Output for Multi-platform Data Annotation Applications

Metadata in- and output are important steps within the data annotation process. However, selecting techniques that effectively facilitate these steps is non-trivial, especially for applications that have to run on multiple virtual reality platforms. Not all techniques are applicable to or available on every system, requiring to adapt workflows on a per-system basis. Here, we describe a metadata handling system based on Android's Intent system that automatically adapts workflows and thereby makes manual adaption needless.

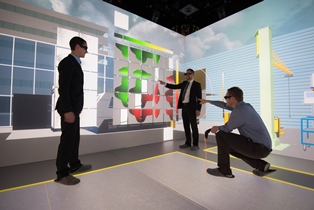

Poster: flapAssist: How the Integration of VR and Visualization Tools Fosters the Factory Planning Process

Virtual Reality (VR) systems are of growing importance to aid decision support in the context of the digital factory, especially factory layout planning. While current solutions either focus on virtual walkthroughs or the visualization of more abstract information, a solution that provides both, does currently not exist. To close this gap, we present a holistic VR application, called Factory Layout Planning Assistant (flapAssist). It is meant to serve as a platform for planning the layout of factories, while also providing a wide range of analysis features. By being scalable from desktops to CAVEs and providing a link to a central integration platform, flapAssist integrates well in established factory planning workflows.

Poster: Tracking Space-Filling Structures in Turbulent Flows

We present a novel approach for tracking space-filling features, i.e. a set of features which covers the entire domain. In contrast to previous work, we determine the assignment between features from successive time steps by computing a globally optimal, maximum-weight, maximal matching on a weighted, bi-partite graph. We demonstrate the method's functionality by tracking dissipation elements (DEs), a space-filling structure definition from turbulent flow analysis. The ability to track DEs over time enables researchers from fluid mechanics to extend their analysis beyond the assessment of static flow fields to time-dependent settings.

@INPROCEEDINGS{Schnorr2015,

author = {Andrea Schnorr and Jens-Henrik Goebbert and Torsten W. Kuhlen and Bernd Hentschel},

title = {{T}racking {S}pace-{F}illing {S}tructures in {T}urbulent {F}lows},

booktitle = Proc # { the } # LDAV,

year = {2015},

pages = {143--144},

abstract = {We present a novel approach for tracking space-filling features, i.e. a set of features which covers the entire domain. In contrast to previous work, we determine the assignment between features from successive time steps by computing a globally optimal, maximum-weight, maximal matching on a weighted, bi-partite graph. We demonstrate the method's functionality by tracking dissipation elements (DEs), a space-filling structure definition from turbulent flow analysis. The abilitytotrack DEs over time enables researchers from fluid mechanics to extend their analysis beyond the assessment of static flow fields to time-dependent settings.},

doi = {10.1109/LDAV.2015.7348089},

keywords = {Feature Tracking, Weighted, Bi-Partite Matching, Flow

Visualization, Dissipation Elements}

}

Ein Konzept zur Integration von Virtual Reality Anwendungen zur Verbesserung des Informationsaustauschs im Fabrikplanungsprozess - A Concept for the Integration of Virtual Reality Applications to Improve the Information Exchange within the Factory Planning Process

Factory planning is a highly heterogeneous process that involves various expert groups at the same time. In this context, the communication between different expert groups poses a major challenge. One reason for this lies in the differing domain knowledge of individual groups. However, since decisions made within one domain usually have an effect on others, it is essential to make these domain interactions visible to all involved experts in order to improve the overall planning process. In this paper, we present a concept that facilitates the integration of two separate virtual-reality- and visualization analysis tools for different application domains of the planning process. The concept was developed in context of the Virtual Production Intelligence and aims at creating an approach to making domain interactions visible, such that the aforementioned challenges can be mitigated.

@Article{Pick2015,

Title = {“Ein Konzept zur Integration von Virtual Reality Anwendungen zur Verbesserung des Informationsaustauschs im Fabrikplanungsprozess”},

Author = {S. Pick, S. Gebhardt, B. Hentschel, T. W. Kuhlen, R. Reinhard, C. Büscher, T. Al Khawli, U. Eppelt, H. Voet, and J. Utsch},

Journal = {Tagungsband 12. Paderborner Workshop Augmented \& Virtual Reality in der Produktentstehung},

Year = {2015},

Pages = {139--152}

}

Ein Ansatz zur Softwaretechnischen Integration von Virtual Reality Anwendungen am Beispiel des Fabrikplanungsprozesses - An Approach for the Softwaretechnical Integration of Virtual Reality Applications by the Example of the Factory Planning Process

The integration of independent applications is a complex task from a software engineering perspective. Nonetheless, it entails significant benefits, especially in the context of Virtual Reality (VR) supported factory planning, e.g., to communicate interdependencies between different domains. To emphasize this aspect, we integrated two independent VR and visualization applications into a holistic planning solution. Special focus was put on parallelization and interaction aspects, while also considering more general requirements of such an integration process. In summary, we present technical solutions for the effective integration of several VR applications into a holistic solution with the integration of two applications from the context of factory planning with special focus on parallelism and interaction aspects. The effectiveness of the approach is demonstrated by performance measurements.

@Article{Gebhardt2015,

Title = {“Ein Ansatz zur Softwaretechnischen Integration von Virtual Reality Anwendungen am Beispiel des Fabrikplanungsprozesses”},

Author = {S. Gebhardt, S. Pick, B. Hentschel, T. W. Kuhlen, R. Reinhard, and C. Büscher},

Journal = {Tagungsband 12. Paderborner Workshop Augmented \& Virtual Reality in der Produktentstehung},

Year = {2015},

Pages = {153--166}

}

Efficient Modal Sound Synthesis on GPUs

Modal sound synthesis is a useful method to interactively generate sounds for Virtual Environments. Forces acting on objects excite modes, which then have to be accumulated to generate the output sound. Due to the high audio sampling rate, algorithms using the CPU typically can handle only a few actively sounding objects. Additionally, force excitation should be applied at a high sampling rate. We present different algorithms to compute the synthesized sound using a GPU, and compare them to CPU implementations. The GPU algorithms shows a significantly higher performance, and allows many sounding objects simultaneously.

Quo Vadis CAVE – Does Immersive Visualization Still Matter?

More than two decades have passed since the introduction of the CAVE (Cave Automatic Virtual Environment), a landmark in the development of VR.1 The CAVE addressed two major issues with head-mounted displays of the era. First, it provided an unprecedented field of view, greatly improving the Feeling of presence in a virtual environment (VE). Second, this feeling was ampli ed because users didn’t have to rely on a virtual representation of their own bodies or parts thereof. Instead, they could physically enter the virtual space. Scientific visualization had been promulgated as a killer app for VR technology almost from day one. With the CAVE’s inception, it became possible to “put users within their data.” Proponents predicted two key advantages. First, immersive VR promised faster, more comprehensive understanding of complex, spatial relationships owing to head-tracked, stereoscopic rendering. Second, it would provide a more natural user interface, specifically for spatial interaction. In a seminal article, Andy van Dam and his colleagues proposed VR-enabled visualization as a midterm solution to the “accelerating data crisis.”2 That is, the ability to generate data had for some time outpaced the ability to analyze it. Over the years, a number of studies have investigated the effects of VR-based visualizations in speci c application scenarios. Recently, Bireswar Laha and his colleagues provided more general, empirical evidence for its bene fits. Although VR and scienti c visualization have matured and many of the original technical limitations have been resolved, immersive visualization has yet to nd the widespread, everyday use that was claimed in the early days. At the same time, the demand for scalable visualization solutions is greater than ever. If anything, the gap between data generation and analysis capabilities has widened even more. So, two questions arise. What should such scalable solutions look like, and what requirements arise regarding the underlying hardware and software and the overall methodology?

A 3D Collaborative Virtual Environment to Integrate Immersive Virtual Reality into Factory Planning Processes

In the recent past, efforts have been made to adopt immersive virtual reality (IVR) systems as a means for design reviews in factory layout planning. While several solutions for this scenario have been developed, their integration into existing planning workflows has not been discussed yet. From our own experience of developing such a solution, we conclude that the use of IVR systems-like CAVEs-is rather disruptive to existing workflows. One major reason for this is that IVR systems are not available everywhere due to their high costs and large physical footprint. As a consequence, planners have to travel to sites offering such systems which is especially prohibitive as planners are usually geographically dispersed. In this paper, we present a concept for integrating IVR systems into the factory planning process by means of a 3D collaborative virtual environment (3DCVE) without disrupting the underlying planning workflow. The goal is to combine non-immersive and IVR systems to facilitate collaborative walkthrough sessions. However, this scenario poses unique challenges to interactive collaborative work that to the best of our knowledge have not been addressed so far. In this regard, we discuss approaches to viewpoint sharing, telepointing and annotation support that are geared towards distributed heterogeneous 3DCVEs.

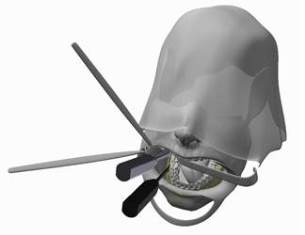

Data-flow Oriented Software Framework for the Development of Haptic-enabled Physics Simulations

This paper presents a software framework that supports the development of haptic-enabled physics simulations. The framework provides tools aiming to facilitate a fast prototyping process by utilizing component and flow-oriented architectures, while maintaining the capability to create efficient code which fulfills the performance requirements induced by the target applications. We argue that such a framework should not only ease the creation of prototypes but also help to effectively and efficiently evaluate them. To this end, we provide analysis tools and the possibility to build problem oriented evaluation environments based on the described software concepts. As motivating use case, we present a project with the goal to develop a haptic-enabled medical training simulator for a maxillofacial procedure. With this example, we demonstrate how the described framework can be used to create a simulation architecture for a complex haptic simulation and how the tools assist in the prototyping process.

An Evaluation of a Smart-Phone-Based Menu System for Immersive Virtual Environments

System control is a crucial task for many virtual reality applications and can be realized in a broad variety of ways, whereat the most common way is the use of graphical menus. These are often implemented as part of the virtual environment, but can also be displayed on mobile devices. Until now, many systems and studies have been published on using mobile devices such as personal digital assistants (PDAs) to realize such menu systems. However, most of these systems have been proposed way before smartphones existed and evolved to everyday companions for many people. Thus, it is worthwhile to evaluate the applicability of modern smartphones as carrier of menu systems for immersive virtual environments. To do so, we implemented a platform-independent menu system for smartphones and evaluated it in two different ways. First, we performed an expert review in order to identify potential design flaws and to test the applicability of the approach for demonstrations of VR applications from a demonstrator's point of view. Second, we conducted a user study with 21 participants to test user acceptance of the menu system. The results of the two studies were contradictory: while experts appreciated the system very much, user acceptance was lower than expected. From these results we could draw conclusions on how smartphones should be used to realize system control in virtual environments and we could identify connecting factors for future research on the topic.

Integration of VR and Visualization Tools to Foster the Factory Planning Process

Recently, virtual reality (VR) and visualization have been increasingly employed to facilitate various tasks in factory planning processes. One major challenge in this context lies in the exchange of information between expert groups concerned with distinct planning tasks in order to make planners aware of inter-dependencies. For example, changes to the configuration of individual machines can have an effect on the overall production performance and vice versa. To this end, we developed VR- and visualization-based planning tools for two distinct planning tasks for which we present an integration concept that facilitates information exchange between these tools. The first application's goal is to facilitate layout planning by means of a CAVE system. The high degree of immersion offered by this system allows users to judge spatial relations in entire factories through cost-effective virtual walkthroughs. Additionally, information like material flow data can be visualized within the virtual environment to further assist planners to comprehensively evaluate the factory layout. Another application focuses on individual machines with the goal to help planners find ideal configurations by providing a visualization solution to explore the multi-dimensional parameter space of a single machine. This is made possible through the use of meta-models of the parameter space that are then visualized by means of the concept of Hyperslice. In this paper we present a concept that shows how these applications can be integrated into one comprehensive planning tool that allows for planning factories while considering factors of different planning levels at the same time. The concept is backed by Virtual Production Intelligence (VPI), which integrates data from different levels of factory processes, while including additional data sources and algorithms to provide further information to be used by the applications. In conclusion, we present an integration concept for VR- and visualization-based software tools that facilitates the communication of interdependencies between different factory planning tasks. As the first steps towards creating a comprehensive factory planning solution, we demonstrate the integration of the aforementioned two use-cases by applying VPI. Finally, we review the proposed concept by discussing its benefits and pointing out potential implementation pitfalls.

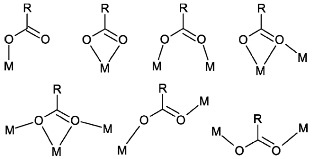

An Unusual Linker and an Unexpected Node: CaCl2 Dumbbells Linked by Proline to Form Square Lattice Networks

Four new structures based on CaCl2 and proline are reported, all with an unusual Cl–Ca–Cl moiety. Depending on the stoichiometry and the chirality of the amino acid, this metal dihalide fragment represents the core of a mononuclear Ca complex or may be linked by the carboxylate to form extended structures. A cisoid coordination of the halide atoms at the calcium cation is encountered in a chain polymer. In the 2D structures, CaCl2 dumbbells act as nodes and are crosslinked by either enantiomerically pure or racemic proline to form square lattice nets. Extensive database searches and topology tests prove that this structure type is rare for MCl2 dumbbells in general and unprecedented for Ca compounds.Four new structures based on CaCl2 and proline are reported, all with an unusual Cl–Ca–Cl moiety. Depending on the stoichiometry and the chirality of the amino acid, this metal dihalide fragment represents the core of a mononuclear Ca complex or may be linked by the carboxylate to form extended structures. A cisoid coordination of the halide atoms at the calcium cation is encountered in a chain polymer. In the 2D structures, CaCl2 dumbbells act as nodes and are crosslinked by either enantiomerically pure or racemic proline to form square lattice nets. Extensive database searches and topology tests prove that this structure type is rare for MCl2 dumbbells in general and unprecedented for Ca compounds.

@Article{Lamberts2014,

Title = {{An Unusual Linker and an Unexpected Node: CaCl2 Dumbbells Linked by Proline to Form Square Lattice Networks}},

Author = {Lamberts, Kevin and Porsche, Sven and Hentschel, Bernd and Kuhlen, Torsten and Englert, Ulli},

Journal = {CrystEngComm},

Year = {2014},

Pages = {3305-3311},

Volume = {16},

Doi = {10.1039/C3CE42357C},

Issue = {16},

Publisher = {The Royal Society of Chemistry},

Url = {http://dx.doi.org/10.1039/C3CE42357C}

}

The Human Brain Project - Chances and Challenges for Cognitive Systems

The Human Brain Project is one of the largest scientific initiatives dedicated to the research of the human brain worldwide. Over 80 research groups from a broad variety of scientific areas, such as neuroscience, simulation science, high performance computing, robotics, and visualization work together in this European research initiative. This work at hand will identify certain chances and challenges for cognitive systems engineering resulting from the HBP research activities. Beside the main goal of the HBP gathering deeper insights into the structure and function of the human brain, cognitive system research can directly benefit from the creation of cognitive architectures, the simulation of neural networks, and the application of these in context of (neuro-)robotics. Nevertheless, challenges arise regarding the utilization and transformation of these research results for cognitive systems, which will be discussed in this paper. Tools necessary to cope with these challenges are visualization techniques helping to understand and gain insights into complex data. Therefore, this paper presents a set of visualization techniques developed at the Virtual Reality Group at the RWTH Aachen University.

@inproceedings{Weyers2014,

author = {Weyers, Benjamin and Nowke, Christian and H{\"{a}}nel, Claudia and Zielasko, Daniel and Hentschel, Bernd and Kuhlen, Torsten},

booktitle = {Workshop Kognitive Systeme: Mensch, Teams, Systeme und Automaten},

title = {{The Human Brain Project – Chances and Challenges for Cognitive Systems}},

year = {2014}

}

Interactive Volume Rendering for Immersive Virtual Environments

Immersive virtual environments (IVEs) are an appropriate platform for 3D data visualization and exploration as, for example, the spatial understanding of these data is facilitated by stereo technology. However, in comparison to desktop setups a lower latency and thus a higher frame rate is mandatory. In this paper we argue that current realizations of direct volume rendering do not allow for a desirable visualization w.r.t. latency and visual quality that do not impair the immersion in virtual environments. To this end, we analyze published acceleration techniques and discuss their potential in IVEs; furthermore, head tracking is considered as a main challenge but also a starting point for specific optimization techniques.

@inproceedings{Hanel2014,

author = {H{\"{a}}nel, Claudia and Weyers, Benjamin and Hentschel, Bernd and Kuhlen, Torsten W.},

booktitle = {IEEE VIS International Workshop on 3DVis: Does 3D really make sense for Data Visualization?},

title = {{Interactive Volume Rendering for Immersive Virtual Environments}},

year = {2014}

}

Visualization of Memory Access Behavior on Hierarchical NUMA Architectures

The available memory bandwidth of existing high performance computing platforms turns out as being more and more the limitation to various applications. Therefore, modern microarchitectures integrate the memory controller on the processor chip, which leads to a non-uniform memory access behavior of such systems. This access behavior in turn entails major challenges in the development of shared memory parallel applications. An improperly implemented memory access functionality results in a bad ratio between local and remote memory access, and causes low performance on such architectures. To address this problem, the developers of such applications rely on tools to make these kinds of performance problems visible. This work presents a new tool for the visualization of performance data of the non-uniform memory access behavior. Because of the visual design of the tool, the developer is able to judge the severity of remote memory access in a time-dependent simulation, which is currently not possible using existing tools.

Poster: Visualizing Geothermal Simulation Data with Uncertainty

Simulations of geothermal reservoirs inherently contain uncertainty due to the fact that the underlying physical models are created from sparse data. Moreover, this uncertainty often cannot be completely expressed by simple key measures (e.g., mean and standard deviation), as the distribution of possible values is often not unimodal. Nevertheless, existing visualizations of these simulation data often completely neglect displaying the uncertainty, or are limited to a mean/variance representation. We present an approach to visualize geothermal simulation data that deals with both cases: scalar uncertainties as well as general ensembles of data sets. Users can interactively define two-dimensional transfer functions to visualize data and uncertainty values directly, or browse a 2D scatter plot representation to explore different possibilities in an ensemble.

Poster: Guided Tour Creation in Immersive Virtual Environments

Guided tours have been found to be a good approach to introducing users to previously unknown virtual environments and to allowing them access to relevant points of interest. Two important tasks during the creation of guided tours are the definition of views onto relevant information and their arrangement into an order in which they are to be visited. To allow a maximum of flexibility an interactive approach to these tasks is desirable. To this end, we present and evaluate two approaches to the mentioned interaction tasks in this paper. The first approach is a hybrid 2D/3D interaction metaphor in which a tracked tablet PC is used as a virtual digital camera that allows to specify and order views onto the scene. The second one is a purely 3D version of the first one, which does not require a tablet PC. Both approaches were compared in an initial user study, whose results indicate a superiority of the 3D over the hybrid approach.

@InProceedings{Pick2014,

Title = {{P}oster: {G}uided {T}our {C}reation in {I}mmersive {V}irtual {E}nvironments},

Author = {Sebastian Pick and Andreas B\"{o}nsch and Irene Tedjo-Palczynski and Bernd Hentschel and Torsten Kuhlen},

Booktitle = {IEEE Symposium on 3D User Interfaces (3DUI), 2014},

Year = {2014},

Month = {March},

Pages = {151-152},

Doi = {10.1109/3DUI.2014.6798865},

Url = {http://ieeexplore.ieee.org/xpl/abstractReferences.jsp?arnumber=6798865}

}

Poster: Interactive 3D Force-Directed Edge Bundling on Clustered Edges

Graphs play an important role in data analysis. Especially, graphs with a natural spatial embedding can benefit from a 3D visualization. But even more then in 2D, graphs visualized as intuitively readable 3D node-link diagrams can become very cluttered. This makes graph exploration and data analysis difficult. For this reason, we focus on the challenge of reducing edge clutter by utilizing edge bundling. In this paper we introduce a parallel, edge cluster based accelerator for the force-directed edge bundling algorithm presented in [Holten2009]. This opens up the possibility for user interaction during and after both the clustering and the bundling.

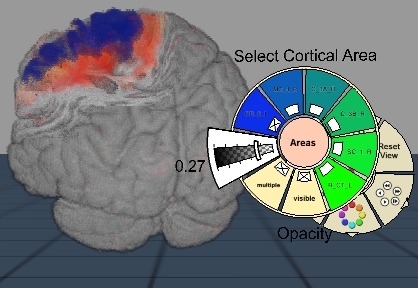

Interactive Definition of Discrete Color Maps for Volume Rendered Data in Immersive Virtual Environments

The visual discrimination of different structures in one or multiple combined volume data sets is generally done with individual transfer functions that can usually be adapted interactively. Immersive virtual environments support the depth perception and thus the spatial orientation in these volume visualizations. However, complex 2D menus for elaborate transfer function design cannot be easily integrated. We therefore present an approach for changing the color mapping during volume exploration with direct volume interaction and an additional 3D widget. In this way we incorporate the modification of a color mapping for a large number of discretely labeled brain areas in an intuitive way into the virtual environment. We use our approach for the analysis of a patient’s data with a brain tissue degenerating disease to allow for an interactive analysis of affected regions.

@inproceedings{Hanel2014a,

address = {Minneapolis},

author = {H{\"{a}}nel, Claudia and Freitag, Sebastian and Hentschel, Bernd and Kuhlen, Torsten},

booktitle = {2nd International Workshop on Immersive Volumetric Interaction (WIVI 2014) at IEEE Virtual Reality 2014},

editor = {Banic, Amy and O'Leary, Patrick and Laha, Bireswar},

title = {{Interactive Definition of Discrete Color Maps for Volume Rendered Data in Immersive Virtual Environments}},

year = {2014}

}

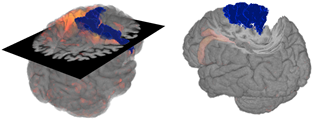

Interactive 3D Visualization of Structural Changes in the Brain of a Person With Corticobasal Syndrome

The visualization of the progression of brain tissue loss in neurodegenerative diseases like corticobasal syndrome (CBS) can provide not only information about the localization and distribution of the volume loss, but also helps to understand the course and the causes of this neurodegenerative disorder. The visualization of such medical imaging data is often based on 2D sections, because they show both internal and external structures in one image. Spatial information, however, is lost. 3D visualization of imaging data is capable to solve this problem, but it faces the difficulty that more internally located structures may be occluded by structures near the surface. Here, we present an application with two designs for the 3D visualization of the human brain to address these challenges. In the first design, brain anatomy is displayed semi-transparently; it is supplemented by an anatomical section and cortical areas for spatial orientation, and the volumetric data of volume loss. The second design is guided by the principle of importance-driven volume rendering: A direct line-of-sight to the relevant structures in the deeper parts of the brain is provided by cutting out a frustum-like piece of brain tissue. The application was developed to run in both, standard desktop environments and in immersive virtual reality environments with stereoscopic viewing for improving the depth perception. We conclude that the presented application facilitates the perception of the extent of brain degeneration with respect to its localization and affected regions.

@article{Hanel2014b,

author = {H{\"{a}}nel, Claudia and Pieperhoff, Peter and Hentschel, Bernd and Amunts, Katrin and Kuhlen, Torsten},

issn = {1662-5196},

journal = {Frontiers in Neuroinformatics},

number = {42},

pmid = {24847243},

title = {{Interactive 3D visualization of structural changes in the brain of a person with corticobasal syndrome.}},

url = {http://journal.frontiersin.org/article/10.3389/fninf.2014.00042/abstract},

volume = {8},

year = {2014}

}

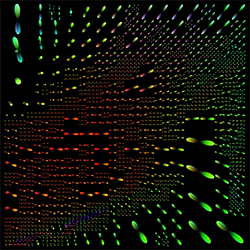

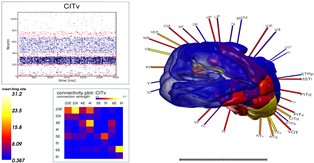

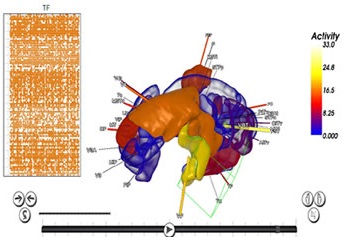

VisNEST – Interactive Analysis of Neural Activity Data

The aim of computational neuroscience is to gain insight into the dynamics and functionality of the nervous system by means of modeling and simulation. Current research leverages the power of High Performance Computing facilities to enable multi-scale simulations capturing both low-level neural activity and large-scale interactions between brain regions. In this paper, we describe an interactive analysis tool that enables neuroscientists to explore data from such simulations. One of the driving challenges behind this work is the integration of macroscopic data at the level of brain regions with microscopic simulation results, such as the activity of individual neurons. While researchers validate their findings mainly by visualizing these data in a non-interactive fashion, state-of-the-art visualizations, tailored to the scientific question yet sufficiently general to accommodate different types of models, enable such analyses to be performed more efficiently. This work describes several visualization designs, conceived in close collaboration with domain experts, for the analysis of network models. We primarily focus on the exploration of neural activity data, inspecting connectivity of brain regions and populations, and visualizing activity flux across regions. We demonstrate the effectiveness of our approach in a case study conducted with domain experts.

Distributed Parallel Particle Advection using Work Requesting

Particle advection is an important vector field visualization technique that is difficult to apply to very large data sets in a distributed setting due to scalability limitations in existing algorithms. In this paper, we report on several experiments using work requesting dynamic scheduling which achieves balanced work distribution on arbitrary problems with minimal communication overhead. We present a corresponding prototype implementation, provide and analyze benchmark results, and compare our results to an existing algorithm.

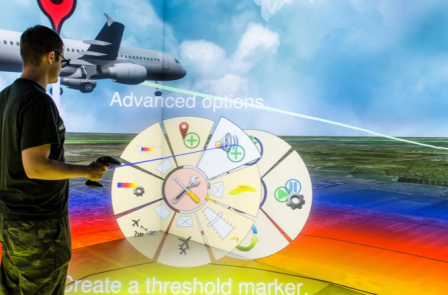

Virtual Air Traffic System Simulation - Aiding the Communication of Air Traffic Effects

A key aspect of air traffic infrastructure projects is the communication between stakeholders during the approval process regarding their environmental impact. Yet, established means of communication have been found to be rather incomprehensible. In this paper we present an application that addresses these communication issues by enabling the exploration of airplane noise emissions in the vicinity of airports in a virtual environment (VE). The VE is composed of a model of the airport area and flight movement data. We combine a real-time 3D auralization approach with visualization techniques to allow for an intuitive access to noise emissions. Specifically designed interaction techniques help users to easily explore and compare air traffic scenarios.

Extended Pie Menus for Immersive Virtual Environments

Pie menus are a well-known technique for interacting with 2D environments and so far a large body of research documents their usage and optimizations. Yet, comparatively little research has been done on the usability of pie menus in immersive virtual environments (IVEs). In this paper we reduce this gap by presenting an implementation and evaluation of an extended hierarchical pie menu system for IVEs that can be operated with a six-degrees-of-freedom input device. Following an iterative development process, we first developed and evaluated a basic hierarchical pie menu system. To better understand how pie menus should be operated in IVEs, we tested this system in a pilot user study with 24 participants and focus on item selection. Regarding the results of the study, the system was tweaked and elements like check boxes, sliders, and color map editors were added to provide extended functionality. An expert review with five experts was performed with the extended pie menus being integrated into an existing VR application to identify potential design issues. Overall results indicated high performance and efficient design.

Poster: Interactive Visualization of Brain-Scale Spiking Activity

In recent years, the simulation of spiking neural networks has advanced in terms of both simulation technology and knowledge about neuroanatomy. Due to these advances, it is now possible to run simulations at the brain scale, which produce an unprecedented amount of data to be analyzed and understood by researchers. As aid, VisNEST, a tool for the combined visualization of simulated spike data and anatomy was developed.

Poster Interactive Visualization of Brain Volume Changes

The visual analysis of brain volume data by neuroscientists is commonly done in 2D coronal, sagittal and transversal views, limiting the visualization domain from potentially three to two dimensions. This is done to avoid occlusion and thus gain necessary context information. In contrast, this work intends to benefit from all spatial information that can help to understand the original data. Example data of a patient with brain degeneration are used to demonstrate how to enrich 2D with 3D data. To this end, two approaches are presented. First, a conventional 2D section in combination with transparent brain anatomy is used. Second, the principle of importance-driven volume rendering is adapted to allow a direct line-of-sight to relevant structures by means of a frustum-like cutout.

Poster: Hyperslice Visualization of Metamodels for Manufacturing Processes

In modeling and simulation of manufacturing processes, complex models are used to examine and understand the behavior and properties of the product or process. To save computation time, global approximation models, often referred to as metamodels, serve as surrogates for the original complex models. Such metamodels are difficult to interpret, because they usually have multi-dimensional input and output domains. We propose a hyperslice-based visualization approach, that uses hyperslices in combination with direct volume rendering, training point visualization, and gradient trajectory navigation, that helps in understanding such metamodels. Great care was taken to provide a high level of interactivity for the exploration of the data space.

Geometrical-Acoustics-based Ultrasound Image Simulation

Brightness modulation (B-Mode) ultrasound (US) images are used to visualize internal body structures during diagnostic and invasive procedures, such as needle insertion for Regional Anesthesia. Due to patient availability and health risks-during invasive procedures-training is often limited, thus, medical training simulators become a viable solution to the problem. Simulation of ultrasound images for medical training requires not only an acceptable level of realism but also interactive rendering times in order to be effective. To address these challenges, we present a generative method for simulating B-Mode ultrasound images using surface representations of the body structures and geometrical acoustics to model sound propagation and its interaction within soft tissue. Furthermore, physical models for backscattered, reflected and transmitted energies as well as for the beam profile are used in order to improve realism. Through the proposed methodology we are able to simulate, in real-time, plausible view- and depth-dependent visual artifacts that are characteristic in B-Mode US images, achieving both, realism and interactivity.

Poster: VisNEST - Interactive Analysis of Neural Activity Data

Modeling and simulating a brain’s connectivity produces an immense

amount of data, which has to be analyzed in a timely fashion.

Neuroscientists are currently modeling parts of the brain –

e.g. the visual cortex – of primates like Macaque monkeys in order

to deduce functionality and transfer newly gained insights to

the human brain. Current research leverages the power of today’s

High Performance Computing (HPC) machines in order to simulate

low level neural activity. In this paper, we describe an interactive

analysis tool that enables neuroscientists to visualize the resulting

simulation output. One of the driving challenges behind our development

is the integration of macroscopic data, e.g. brain areas, with