Publications

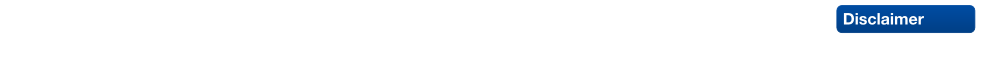

Integrating Visualizations into Modeling NEST Simulations

Modeling large-scale spiking neural networks showing realistic biological behavior in their dynamics is a complex and tedious task. Since these networks consist of millions of interconnected neurons, their simulation produces an immense amount of data. In recent years it has become possible to simulate even larger networks. However, solutions to assist researchers in understanding the simulation's complex emergent behavior by means of visualization are still lacking. While developing tools to partially fill this gap, we encountered the challenge to integrate these tools easily into the neuroscientists' daily workflow. To understand what makes this so challenging, we looked into the workflows of our collaborators and analyzed how they use the visualizations to solve their daily problems. We identified two major issues: first, the analysis process can rapidly change focus which requires to switch the visualization tool that assists in the current problem domain. Second, because of the heterogeneous data that results from simulations, researchers want to relate data to investigate these effectively. Since a monolithic application model, processing and visualizing all data modalities and reflecting all combinations of possible workflows in a holistic way, is most likely impossible to develop and to maintain, a software architecture that offers specialized visualization tools that run simultaneously and can be linked together to reflect the current workflow, is a more feasible approach. To this end, we have developed a software architecture that allows neuroscientists to integrate visualization tools more closely into the modeling tasks. In addition, it forms the basis for semantic linking of different visualizations to reflect the current workflow. In this paper, we present this architecture and substantiate the usefulness of our approach by common use cases we encountered in our collaborative work.

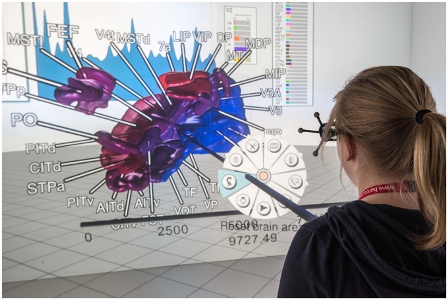

Level-of-Detail Modal Analysis for Real-time Sound Synthesis

Modal sound synthesis is a promising approach for real-time physically-based sound synthesis. A modal analysis is used to compute characteristic vibration modes from the geometry and material properties of scene objects. These modes allow an efficient sound synthesis at run-time, but the analysis is computationally expensive and thus typically computed in a pre-processing step. In interactive applications, however, objects may be created or modified at run-time. Unless the new shapes are known upfront, the modal data cannot be pre-computed and thus a modal analysis has to be performed at run-time. In this paper, we present a system to compute modal sound data at run-time for interactive applications. We evaluate the computational requirements of the modal analysis to determine the computation time for objects of different complexity. Based on these limits, we propose using different levels-of-detail for the modal analysis, using different geometric approximations that trade speed for accuracy, and evaluate the errors introduced by lower-resolution results. Additionally, we present an asynchronous architecture to distribute and prioritize modal analysis computations.

@inproceedings {vriphys.20151335,

booktitle = {Workshop on Virtual Reality Interaction and Physical Simulation},

editor = {Fabrice Jaillet and Florence Zara and Gabriel Zachmann},

title = {{Level-of-Detail Modal Analysis for Real-time Sound Synthesis}},

author = {Rausch, Dominik and Hentschel, Bernd and Kuhlen, Torsten W.},

year = {2015},

publisher = {The Eurographics Association},

ISBN = {978-3-905674-98-9},

DOI = {10.2312/vriphys.20151335}

pages = {61--70}

}

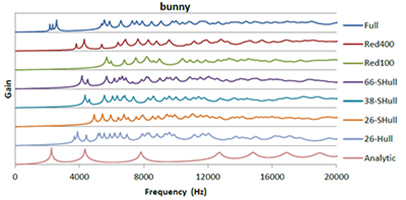

Accurate Contact Modeling for Multi-rate Single-point Haptic Rendering of Static and Deformable Environments

Common approaches for the haptic rendering of complex scenarios employ multi-rate simulation schemes. Here, the collision queries or the simulation of a complex deformable object are often performed asynchronously on a lower frequency, while some kind of intermediate contact representation is used to simulate interactions on the haptic rate. However, this can produce artifacts in the haptic rendering when the contact situation quickly changes and the intermediate representation is not able to reflect the changes due to the lower update rate. We address this problem utilizing a novel contact model. It facilitates the creation of contact representations that are accurate for a large range of motions and multiple simulation time-steps.We handle problematic convex contact regions using a local convex decomposition and special constraints for convex areas.We combine our accurate contact model with an implicit temporal integration scheme to create an intermediate mechanical contact representation, which reflects the dynamic behavior of the simulated objects. Moreover, we propose a new iterative solving scheme for the involved constrained dynamics problems.We increase the robustness of our method using techniques from trust region-based optimization. Our approach can be combined with standard methods for the modeling of deformable objects or constraint-based approaches for the modeling of, for instance, friction or joints. We demonstrate its benefits with respect to the simulation accuracy and the quality of the rendered haptic forces in multiple scenarios.

Best Paper Award!

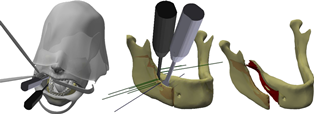

Bimanual Haptic Simulation of Bone Fracturing for the Training of the Bilateral Sagittal Split Osteotomy

In this work we present a haptic training simulator for a maxillofacial procedure comprising the controlled breaking of the lower mandible. To our knowledge the haptic simulation of fracture is seldom addressed, especially when a realistic breaking behavior is required. Our system combines bimanual haptic interaction with a simulation of the bone based on well-founded methods from fracture mechanics. The system resolves the conflict between simulation complexity and haptic real-time constraints by employing a dedicated multi-rate simulation and a special solving strategy for the occurring mechanical equations. Furthermore, we present remeshing-free methods for collision detection and visualization which are tailored for an efficient treatment of the topological changes induced by the fracture. The methods have been successfully implemented and tested in a simulator prototype using real pathological data and a semi-immersive VR-system with two haptic devices. We evaluated the computational efficiency of our methods and show that a stable and responsive haptic simulation of the fracturing has been achieved.

A Framework for Developing Flexible Virtual-Reality-centered Annotation Systems

The act of note-taking is an essential part of the data analysis process. It has been realized in form of various annotation systems that have been discussed in many publications. Unfortunately, the focus usually lies on high-level functionality, like interaction metaphors and display strategies. We argue that it is worthwhile to also consider software engineering aspects. Annotation systems often share similar functionality that can potentially be factored into reusable components with the goal to speed up the creation of new annotation systems. At the same time, however, VR-centered annotation systems are not only subject to application-specific requirements, but also to those arising from differences between the various VR platforms, like desktop VR setups or CAVEs. As a result, it is usually necessary to build application-specific VR-centered annotation systems from scratch instead of reusing existing components.

To improve this situation, we present a framework that provides reusable and adaptable building blocks to facilitate the creation of flexible annotation systems for VR applications. We discuss aspects ranging from data representation over persistence to the integration of new data types and interaction metaphors, especially in context of multi-platform applications. To underpin the benefits of such an approach and promote the proposed concepts, we describe how the framework was applied to several of our own projects.

Proceedings of the Workshop on Formal Methods in Human Computer Interaction(FoMHCI)

Conference Proceedings

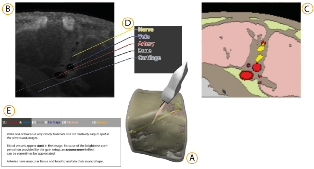

Simulation-based Ultrasound Training Supported by Annotations, Haptics and Linked Multimodal Views

When learning ultrasound (US) imaging, trainees must learn how to recognize structures, interpret textures and shapes, and simultaneously register the 2D ultrasound images to their 3D anatomical mental models. Alleviating the cognitive load imposed by these tasks should free the cognitive resources and thereby improve the learning process. We argue that the amount of cognitive load that is required to mentally rotate the models to match the images to them is too large and therefore negatively impacts the learning process. We present a 3D visualization tool that allows the user to naturally move a 2D slice and navigate around a 3D anatomical model. The slice is displayed in-place to facilitate the registration of the 2D slice in its 3D context. Two duplicates are also shown externally to the model; the first is a simple rendered image showing the outlines of the structures and the second is a simulated ultrasound image. Haptic cues are also provided to the users to help them maneuver around the 3D model in the virtual space. With the additional display of annotations and information of the most important structures, the tool is expected to complement the available didactic material used in the training of ultrasound procedures.

Comparison and Evaluation of Viewpoint Quality Estimation Algorithms for Immersive Virtual Environments

The knowledge of which places in a virtual environment are interesting or informative can be used to improve user interfaces and to create virtual tours. Viewpoint Quality Estimation algorithms approximate this information by calculating quality scores for viewpoints. However, even though several such algorithms exist and have also been used, e.g., in virtual tour generation, they have never been comparatively evaluated on virtual scenes. In this work, we introduce three new Viewpoint Quality Estimation algorithms, and compare them against each other and six existing metrics, by applying them to two different virtual scenes. Furthermore, we conducted a user study to obtain a quantitative evaluation of viewpoint quality. The results reveal strengths and limitations of the metrics on actual scenes, and provide recommendations on which algorithms to use for real applications.

@InProceedings{Freitag2015,

Title = {{Comparison and Evaluation of Viewpoint Quality Estimation Algorithms for Immersive Virtual Environments}},

Author = {Freitag, Sebastian and Weyers, Benjamin and B\"{o}nsch, Andrea and Kuhlen, Torsten W.},

Booktitle = {ICAT-EGVE 2015 - International Conference on Artificial Reality and Telexistence and Eurographics Symposium on Virtual Environments},

Year = {2015},

Pages = {53-60},

Doi = {10.2312/egve.20151310}

}

Haptic 3D Surface Representation of Table-Based Data for People With Visual Impairments

The UN Convention on the Rights of Persons with Disabilities Article 24 states that “States Parties shall ensure inclusive education at all levels of education and life long learning.” This article focuses on the inclusion of people with visual impairments in learning processes including complex table-based data. Gaining insight into and understanding of complex data is a highly demanding task for people with visual impairments. Especially in the case of table-based data, the classic approaches of braille-based output devices and printing concepts are limited. Haptic perception requires sequential information processing rather than the parallel processing used by the visual system, which hinders haptic perception to gather a fast overview of and deeper insight into the data. Nevertheless, neuroscientific research has identified great dependencies between haptic perception and the cognitive processing of visual sensing. Based on these findings, we developed a haptic 3D surface representation of classic diagrams and charts, such as bar graphs and pie charts. In a qualitative evaluation study, we identified certain advantages of our relief-type 3D chart approach. Finally, we present an education model for German schools that includes a 3D printing approach to help integrate students with visual impairments./citation.cfm?id=2700433

Verified Stochastic Methods in Geographic Information System Applications With Uncertainty

Modern localization techniques are based on the Global Positioning System (GPS). In general, the accuracy of the measurement depends on various uncertain parameters. In addition, despite its relevance, a number of localization approaches fail to consider the modeling of uncertainty in geographic information system (GIS) applications. This paper describes a new verified method for uncertain (GPS) localization for use in GPS and GIS application scenarios based on Dempster-Shafer theory (DST), with two-dimensional and interval-valued basic probability assignments. The main benefit our approach offers for GIS applications is a workflow concept using DST-based models that are embedded into an ontology-based semantic querying mechanism accompanied by 3D visualization techniques. This workflow provides interactive means of querying uncertain GIS models semantically and provides visual feedback.

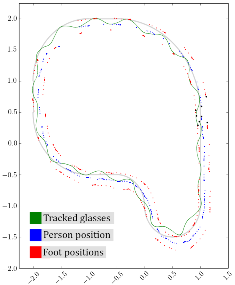

Low-Cost Vision-Based Multi-Person Foot Tracking for CAVE Systems with Under-Floor Projection

In this work, we present an approach for tracking the feet of multiple users in CAVE-like systems with under-floor projection. It is based on low-cost consumer cameras, does not require users to wear additional equipment, and can be installed without modifying existing components. If the brightness of the floor projection does not contain too much variation, the feet of several people can be successfully and precisely tracked and assigned to individuals. The tracking data can be used to enable or enhance user interfaces like Walking-in-Place or torso-directed steering, provide audio feedback for footsteps, and improve the immersive experience for multiple users.

BlowClick: A Non-Verbal Vocal Input Metaphor for Clicking

In contrast to the wide-spread use of 6-DOF pointing devices, freehand user interfaces in Immersive Virtual Environments (IVE) are non-intrusive. However, for gesture interfaces, the definition of trigger signals is challenging. The use of mechanical devices, dedicated trigger gestures, or speech recognition are often used options, but each comes with its own drawbacks. In this paper, we present an alternative approach, which allows to precisely trigger events with a low latency using microphone input. In contrast to speech recognition, the user only blows into the microphone. The audio signature of such blow events can be recognized quickly and precisely. The results of a user study show that the proposed method allows to successfully complete a standard selection task and performs better than expected against a standard interaction device, the Flystick.

Cirque des Bouteilles: The Art of Blowing on Bottles

Making music by blowing on bottles is fun but challenging. We introduce a novel 3D user interface to play songs on virtual bottles. For this purpose the user blows into a microphone and the stream of air is recreated in the virtual environment and redirected to virtual bottles she is pointing to with her fingers. This is easy to learn and subsequently opens up opportunities for quickly switching between bottles and playing groups of them together to form complex melodies. Furthermore, our interface enables the customization of the virtual environment, by means of moving bottles, changing their type or filling level.

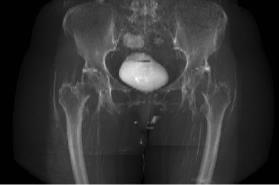

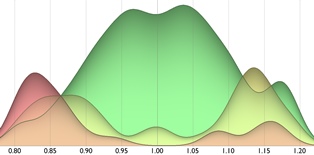

MRI Visualisation by Digitally Reconstructed Radiographs

Visualising volumetric medical images such as computed tomography and magnetic resonance imaging (MRI) on picture archiving and communication systems (PACS) clients is often achieved by image browsing in sagittal, coronal or axial views or three-dimensional (3D) rendering. This latter technique requires fine thresholding for MRI. On the other hand, computing virtual radiograph images, also referred to as digitally reconstructed radiographs (DRR), provides in a single two-dimensional (2D) image a complete overview of the 3D data. It appears therefore as a powerful alternative for MRI visualisation and preview in PACS. This study describes a method to compute DRR from T1-weighted MRI. After segmentation of the background, a histogram distribution analysis is performed and each foreground MRI voxel is labeled as one of three tissues: cortical bone, also known as principal absorber of the X-rays, muscle and fat. An intensity level is attributed to each voxel according to the Hounsfield scale, linearly related to the X-ray attenuation coefficient. Each DRR pixel is computed as the accumulation of the new intensities of the MRI dataset along the corresponding X-ray. The method has been tested on 16 T1-weighted MRI sets. Anterior-posterior and lateral DRR have been computed with reasonable qualities and avoiding any manual tissue segmentations.

@Article{Serrurier2015,

Title = {{MRI} {V}isualisation by {D}igitally {R}econstructed {R}adiographs},

Author = {Antoine Serrurier and Andrea B\"{o}nsch and Robert Lau and Thomas M. Deserno (n\'{e} Lehmann)},

Journal = {Proceeding of SPIE 9418, Medical Imaging 2015: PACS and Imaging Informatics: Next Generation and Innovations},

Year = {2015},

Pages = {94180I-94180I-7},

Volume = {9418},

Doi = {10.1117/12.2081845},

Url = {http://rasimas.imib.rwth-aachen.de/output_publications.php}

}

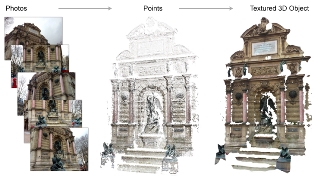

Surface-Reconstructing Growing Neural Gas: A Method for Online Construction of Textured Triangle Meshes

In this paper we propose surface-reconstructing growing neural gas (SGNG), a learning based artificial neural network that iteratively constructs a triangle mesh from a set of sample points lying on an object?s surface. From these input points SGNG automatically approximates the shape and the topology of the original surface. It furthermore assigns suitable textures to the triangles if images of the surface are available that are registered to the points.

By expressing topological neighborhood via triangles, and by learning visibility from the input data, SGNG constructs a triangle mesh entirely during online learning and does not need any post-processing to close untriangulated holes or to assign suitable textures without occlusion artifacts. Thus, SGNG is well suited for long-running applications that require an iterative pipeline where scanning, reconstruction and visualization are executed in parallel.

Results indicate that SGNG improves upon its predecessors and achieves similar or even better performance in terms of smaller reconstruction errors and better reconstruction quality than existing state-of-the-art reconstruction algorithms. If the input points are updated repeatedly during reconstruction, SGNG performs even faster than existing techniques.

Best Paper Award!

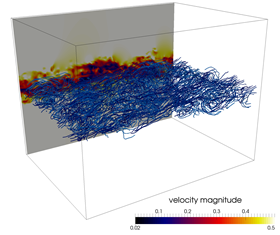

Packet-Oriented Streamline Tracing on Modern SIMD Architectures

The advection of integral lines is an important computational kernel in vector field visualization. We investigate how this kernel can profit from vector (SIMD) extensions in modern CPUs. As a baseline, we formulate a streamline tracing algorithm that facilitates auto-vectorization by an optimizing compiler. We analyze this algorithm and propose two different optimizations. Our results show that particle tracing does not per se benefit from SIMD computation. Based on a careful analysis of the auto-vectorized code, we propose an optimized data access routine and a re-packing scheme which increases average SIMD efficiency. We evaluate our approach on three different, turbulent flow fields. Our optimized approaches increase integration performance up to 5:6 over our baseline measurement. We conclude with a discussion of current limitations and aspects for future work.

@INPROCEEDINGS{Hentschel2015,

author = {Bernd Hentschel and Jens Henrik G{\"o}bbert and Michael Klemm and

Paul Springer and Andrea Schnorr and Torsten W. Kuhlen},

title = {{P}acket-{O}riented {S}treamline {T}racing on {M}odern {SIMD}

{A}rchitectures},

booktitle = {Proceedings of the Eurographics Symposium on Parallel Graphics

and Visualization},

year = {2015},

pages = {43--52},

abstract = {The advection of integral lines is an important computational

kernel in vector field visualization. We investigate

how this kernel can profit from vector (SIMD) extensions in modern CPUs. As a

baseline, we formulate a streamline

tracing algorithm that facilitates auto-vectorization by an optimizing compiler.

We analyze this algorithm and

propose two different optimizations. Our results show that particle tracing does

not per se benefit from SIMD computation.

Based on a careful analysis of the auto-vectorized code, we propose an optimized

data access routine

and a re-packing scheme which increases average SIMD efficiency. We evaluate our

approach on three different,

turbulent flow fields. Our optimized approaches increase integration performance

up to 5.6x over our baseline

measurement. We conclude with a discussion of current limitations and aspects

for future work.}

}

Gaze Guiding zur Unterstützung der Bedienung technischer Systeme

Die Vermeidung von Bedienfehlern ist gerade in sicherheitskritischen Systemen von zentraler Bedeutung. Um das Wiedererinnern an einmal erlernte Fähigkeiten für das Bedienen und Steuern technischer Systeme zu erleichtern und damit Fehler zu vermeiden, werden sogenannte Refresher Interventionen eingesetzt. Hierbei handelt es sich bisher zumindest um aufwändige Simulations- oder Simulationstrainings, die bereits erlernte Fähigkeiten durch deren wiederholte Ausführung auffrischen und so in selten auftretenden kritischen Situationen korrekt abrufbar machen. Die vorliegende Arbeit zeigt wie das Ziel des Wiedererinnerns auch ohne Refresher in Form einer Gaze Guiding Komponente erreicht werden kann, die in eine visuelle Benutzerschnittstelle zur Bedienung des technischen Prozesses eingebettet wird und den Fertigkeitsabruf durch gezielte kontextabhängige Ein- und Überblendungen unterstützt. Die Wirkung dieses Konzepts wird zurzeit in einer größeren DFG-geförderten Studie untersucht.

An Integrative Tool Chain for Collaborative Virtual Museums in Immersive Virtual Environments

Various conceptual approaches for the creation and presentation of virtual museums can be found. However, less work exists that concentrates on collaboration in virtual museums. The support of collaboration in virtual museums provides various benefits for the visit as well as the preparation and creation of virtual exhibits. This paper addresses one major problem of collaboration in virtual museums: the awareness of visitors. We use a Cave Automated Virtual Environment (CAVE) for the visualization of generated virtual museums to offer simple awareness through co-location. Furthermore, the use of smartphones during the visit enables the visitors to create comments or to access exhibit related metadata. Thus, the main contribution of this ongoing work is the presentation of a workflow that enables an integrated deployment of generic virtual museums into a CAVE, which will be demonstrated by deploying the virtual Leopold Fleischhacker Museum.

Crowdsourcing and Knowledge Co-creation in Virtual Museums

This paper gives an overview on crowdsourcing practices in virtual mu-seums. Engaged nonprofessionals and specialists support curators in creating digi-tal 2D or 3D exhibits, exhibitions and tour planning and enhancement of metadata using the Virtual Museum and Cultural Object Exchange Format (ViMCOX). ViMCOX provides the semantic structure of exhibitions and complete museums and includes new features, such as room and outdoor design, interactions with artwork, path planning and dissemination and presentation of contents. Applica-tion examples show the impact of crowdsourcing in the Museo de Arte Contem-poraneo in Santiago de Chile and in the virtual museum depicting the life and work of the Jewish sculptor Leopold Fleischhacker. A further use case is devoted to crowd-based support for restoration of high-quality 3D shapes.

"Workshop on Formal Methods in Human Computer Interaction."

This workshop aims to gather active researchers and practitioners in the field of formal methods in the context of user interfaces, interaction techniques, and interactive systems. The main objectives are to look at the evolutions of the definition and use of formal methods for interactive systems since the last book on the field nearly 20 years ago and also to identify important themes for the next decade of research. The goals of this workshop are to offer an exchange platform for scientists who are interested in the formal modeling and description of interaction, user interfaces, and interactive systems and to discuss existing formal modeling methods in this area of conflict. Participants will be asked to present their perspectives, concepts, and techniques for formal modeling along one of two case studies – the control of a nuclear power plant and an air traffic management arrival manager.

FILL: Formal Description of Executable and Reconfigurable Models of Interactive Systems

This paper presents the Formal Interaction Logic Language (FILL) as modeling approach for the description of user interfaces in an executable way. In the context of the workshop on Formal Methods in Human Computer Interaction, this work presents FILL by first introducing its architectural structure, its visual representation and transformation of reference nets, a special type of Petri nets, and finally discussing FILL in context of two use case proposed by the workshop. Therefore, this work shows how FILL can be used to model automation as part of the user interface model as well as how formal reconfiguration can be used to implement user-based automation given a formal user interface model.

3DUIdol-6th Annual 3DUI Contest.

The 6th annual IEEE 3DUI contest focuses on Virtual Music Instruments (VMIs), and on 3D user interfaces for playing them. The Contest is part of the IEEE 2015 3DUI Symposium held in Arles, France. The contest is open to anyone interested in 3D User Interfaces (3DUIs), from researchers to students, enthusiasts, and professionals. The purpose of the contest is to stimulate innovative and creative solutions to challenging 3DUI problems. Due to the recent explosion of affordable and portable 3D devices, this year's contest will be judged live at 3DUI. The judgment will be done by selected 3DUI experts during on-site presentation during the conference. Therefore, contestants are required to bring their systems for live judging and for attendees to experience them.

Poster: Scalable Metadata In- and Output for Multi-platform Data Annotation Applications

Metadata in- and output are important steps within the data annotation process. However, selecting techniques that effectively facilitate these steps is non-trivial, especially for applications that have to run on multiple virtual reality platforms. Not all techniques are applicable to or available on every system, requiring to adapt workflows on a per-system basis. Here, we describe a metadata handling system based on Android's Intent system that automatically adapts workflows and thereby makes manual adaption needless.

Poster: Vision-based Multi-Person Foot Tracking for CAVE Systems with Under-Floor Projection

In this work, we present an approach for tracking the feet of mul- tiple users in CAVE-like systems with under-floor projection. It is based on low-cost consumer cameras, does not require users to wear additional equipment, and can be installed without modifying existing components. If the brightness of the floor projection does not contain too much variation, the feet of several people can be reliably tracked and assigned to individuals.

Poster: Effects and Applicability of Rotation Gain in CAVE-like Environments

In this work, we report on a pilot study we conducted, and on a study design, to examine the effects and applicability of rotation gain in CAVE-like virtual environments. The results of the study will give recommendations for the maximum levels of rotation gain that are reasonable in algorithms for enlarging the virtual field of regard or redirected walking.

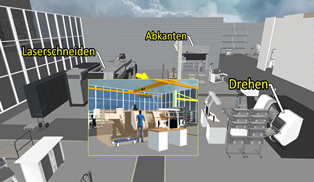

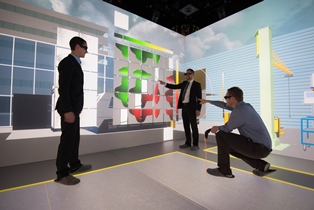

Poster: flapAssist: How the Integration of VR and Visualization Tools Fosters the Factory Planning Process

Virtual Reality (VR) systems are of growing importance to aid decision support in the context of the digital factory, especially factory layout planning. While current solutions either focus on virtual walkthroughs or the visualization of more abstract information, a solution that provides both, does currently not exist. To close this gap, we present a holistic VR application, called Factory Layout Planning Assistant (flapAssist). It is meant to serve as a platform for planning the layout of factories, while also providing a wide range of analysis features. By being scalable from desktops to CAVEs and providing a link to a central integration platform, flapAssist integrates well in established factory planning workflows.

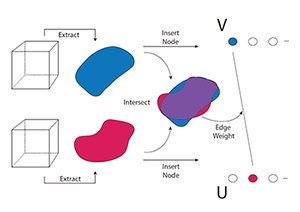

Poster: Tracking Space-Filling Structures in Turbulent Flows

We present a novel approach for tracking space-filling features, i.e. a set of features which covers the entire domain. In contrast to previous work, we determine the assignment between features from successive time steps by computing a globally optimal, maximum-weight, maximal matching on a weighted, bi-partite graph. We demonstrate the method's functionality by tracking dissipation elements (DEs), a space-filling structure definition from turbulent flow analysis. The ability to track DEs over time enables researchers from fluid mechanics to extend their analysis beyond the assessment of static flow fields to time-dependent settings.

@INPROCEEDINGS{Schnorr2015,

author = {Andrea Schnorr and Jens-Henrik Goebbert and Torsten W. Kuhlen and Bernd Hentschel},

title = {{T}racking {S}pace-{F}illing {S}tructures in {T}urbulent {F}lows},

booktitle = Proc # { the } # LDAV,

year = {2015},

pages = {143--144},

abstract = {We present a novel approach for tracking space-filling features, i.e. a set of features which covers the entire domain. In contrast to previous work, we determine the assignment between features from successive time steps by computing a globally optimal, maximum-weight, maximal matching on a weighted, bi-partite graph. We demonstrate the method's functionality by tracking dissipation elements (DEs), a space-filling structure definition from turbulent flow analysis. The abilitytotrack DEs over time enables researchers from fluid mechanics to extend their analysis beyond the assessment of static flow fields to time-dependent settings.},

doi = {10.1109/LDAV.2015.7348089},

keywords = {Feature Tracking, Weighted, Bi-Partite Matching, Flow

Visualization, Dissipation Elements}

}

Ein Konzept zur Integration von Virtual Reality Anwendungen zur Verbesserung des Informationsaustauschs im Fabrikplanungsprozess - A Concept for the Integration of Virtual Reality Applications to Improve the Information Exchange within the Factory Planning Process

Factory planning is a highly heterogeneous process that involves various expert groups at the same time. In this context, the communication between different expert groups poses a major challenge. One reason for this lies in the differing domain knowledge of individual groups. However, since decisions made within one domain usually have an effect on others, it is essential to make these domain interactions visible to all involved experts in order to improve the overall planning process. In this paper, we present a concept that facilitates the integration of two separate virtual-reality- and visualization analysis tools for different application domains of the planning process. The concept was developed in context of the Virtual Production Intelligence and aims at creating an approach to making domain interactions visible, such that the aforementioned challenges can be mitigated.

@Article{Pick2015,

Title = {“Ein Konzept zur Integration von Virtual Reality Anwendungen zur Verbesserung des Informationsaustauschs im Fabrikplanungsprozess”},

Author = {S. Pick, S. Gebhardt, B. Hentschel, T. W. Kuhlen, R. Reinhard, C. Büscher, T. Al Khawli, U. Eppelt, H. Voet, and J. Utsch},

Journal = {Tagungsband 12. Paderborner Workshop Augmented \& Virtual Reality in der Produktentstehung},

Year = {2015},

Pages = {139--152}

}

Ein Ansatz zur Softwaretechnischen Integration von Virtual Reality Anwendungen am Beispiel des Fabrikplanungsprozesses - An Approach for the Softwaretechnical Integration of Virtual Reality Applications by the Example of the Factory Planning Process

The integration of independent applications is a complex task from a software engineering perspective. Nonetheless, it entails significant benefits, especially in the context of Virtual Reality (VR) supported factory planning, e.g., to communicate interdependencies between different domains. To emphasize this aspect, we integrated two independent VR and visualization applications into a holistic planning solution. Special focus was put on parallelization and interaction aspects, while also considering more general requirements of such an integration process. In summary, we present technical solutions for the effective integration of several VR applications into a holistic solution with the integration of two applications from the context of factory planning with special focus on parallelism and interaction aspects. The effectiveness of the approach is demonstrated by performance measurements.

@Article{Gebhardt2015,

Title = {“Ein Ansatz zur Softwaretechnischen Integration von Virtual Reality Anwendungen am Beispiel des Fabrikplanungsprozesses”},

Author = {S. Gebhardt, S. Pick, B. Hentschel, T. W. Kuhlen, R. Reinhard, and C. Büscher},

Journal = {Tagungsband 12. Paderborner Workshop Augmented \& Virtual Reality in der Produktentstehung},

Year = {2015},

Pages = {153--166}

}

Immersive Art: Using a CAVE-like Virtual Environment for the Presentation of Digital Works of Art

Digital works of art are often created using some kind of modeling software, like Cinema4D. Usually they are presented in a non-interactive form, like large Diasecs, and can thus only be experienced by passive viewing. To explore alternative, more captivating presentation channels, we investigate the use of a CAVE virtual reality (VR) system as an immersive and interactive presentation platform in this paper. To this end, in a collaboration with an artist, we built an interactive VR experience from one of his existing works. We provide details on our design and report on the results of a qualitative user study.

» Show BibTeX

@Article{Pick2015,

Title = {{Immersive Art: Using a CAVE-like Virtual Environment for the Presentation of Digitial Works of Art}},

Author = {Pick, Sebastian and B\"{o}nsch, Andrea and Scully, Dennis and Kuhlen, Torsten W.},

Journal = {{V}irtuelle und {E}rweiterte {R}ealit\"at, 12. {W}orkshop der {GI}-{F}achgruppe {VR}/{AR}},

Year = {2015},

Pages = {10-21},

ISSN = {978-3-8440-3868-2},

Publisher = {Shaker Verlag}

}

Previous Year (2014)