Profile

|

Prof. Dr. Torsten Wolfgang Kuhlen |

Publications

Exploring Gaze Dynamics: Initial Findings on the Role of Listening Bystanders in Conversational Interactions

This work-in-progress paper investigates how virtual listening bystanders influence participants’ gaze behavior and their perception of turn-taking during scripted conversations with embodied conversational agents (ECAs). 25 participants interacted with five ECAs – two speakers and three bystanders – across three conditions: no bystanders, bystanders exhibiting random gazing behavior, and social bystanders engaging in mutual gaze and backchanneling. Participants either observed the conversation or actively participated as speakers by reciting prompted sentences. The results indicated that bystanders reduced the participants’ attention to speakers, hindering their ability to anticipate turn changes and resulting in longer delays in shifting their gaze to the new speaker after an ECA yielded the turn. Random gazing bystanders were particularly noted for obscuring conversational flow. These findings underscore the challenges of designing effective and natural conversational environments, highlighting the need for careful consideration of ECA behaviors to enhance user engagement.

@INPROCEEDINGS{Ehret2025,

author={Ehret, Jonathan and Dasbach, Valentin and Hartmann, Jan-Nikjas and

Fels, Janina and Kuhlen, Torsten W. and Bönsch, Andrea},

booktitle={2025 IEEE Conference on Virtual Reality and 3D User Interfaces

Abstracts and Workshops (VRW)},

title={Exploring Gaze Dynamics: Initial Findings on the Role of Listening

Bystanders in Conversational Interactions},

year={2025},

volume={},

number={},

pages={748-752},

doi={10.1109/VRW66409.2025.00151}}

Geschichte(n) in Virtual Reality - Perspektiven der Informatik

Den Bau der Pyramiden von Gizeh beobachten – und dann gleich weiter ins antike Rom? Das und mehr soll mit Virtual Reality möglich werden. Doch was macht das mit unserem Verständnis von Geschichte?

Virtual-Reality-Anwendungen mit historischem Inhalt haben Konjunktur. Sie versprechen virtuelle Zeitreisen und die Möglichkeit, endlich zeigen zu können, wie die Vergangenheit wirklich war. Daraus resultieren Formen des Umgangs mit Geschichte, die nicht nur die außerschulische Geschichtskultur und -vermittlung prägen, sondern auch zunehmend in den Geschichtsunterricht hineinwirken. Im Zentrum dieses Bandes steht daher die Frage: Was macht Virtual Reality mit Geschichte? Während aus Sicht der Informatik historische Inhalte »nur« besondere Gestaltungskriterien mit sich bringen, sieht sich die Geschichtswissenschaft mit einer Konkurrenz im Bereich der Geschichtsdarstellung konfrontiert, die womöglich sogar droht, diese obsolet zu machen. Museen und Gedenkstätten sehen sich mit der Aufgabe konfrontiert, VR-Anwendungen in ihr Angebot einzubinden und trotzdem – oder gerade damit – Besuchende für ihre Institutionen zu gewinnen. Die Geschichtsdidaktik diskutiert vor diesem Hintergrund die Folgen virtueller Darstellungen innerhalb und außerhalb des Unterrichts auf historische Lernprozesse. Zu Wort kommen Expert:innen aus den genannten Fachbereichen, um ihre Perspektive auf die Frage darzulegen: Ist Virtual Reality die Zukunft der historischen Bildung?

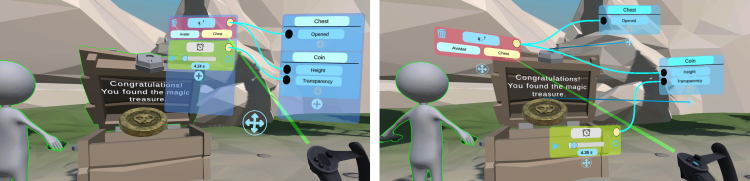

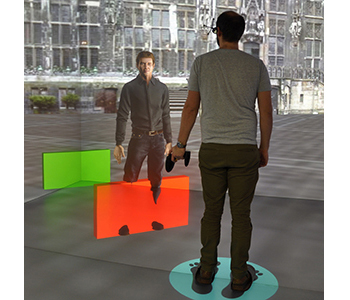

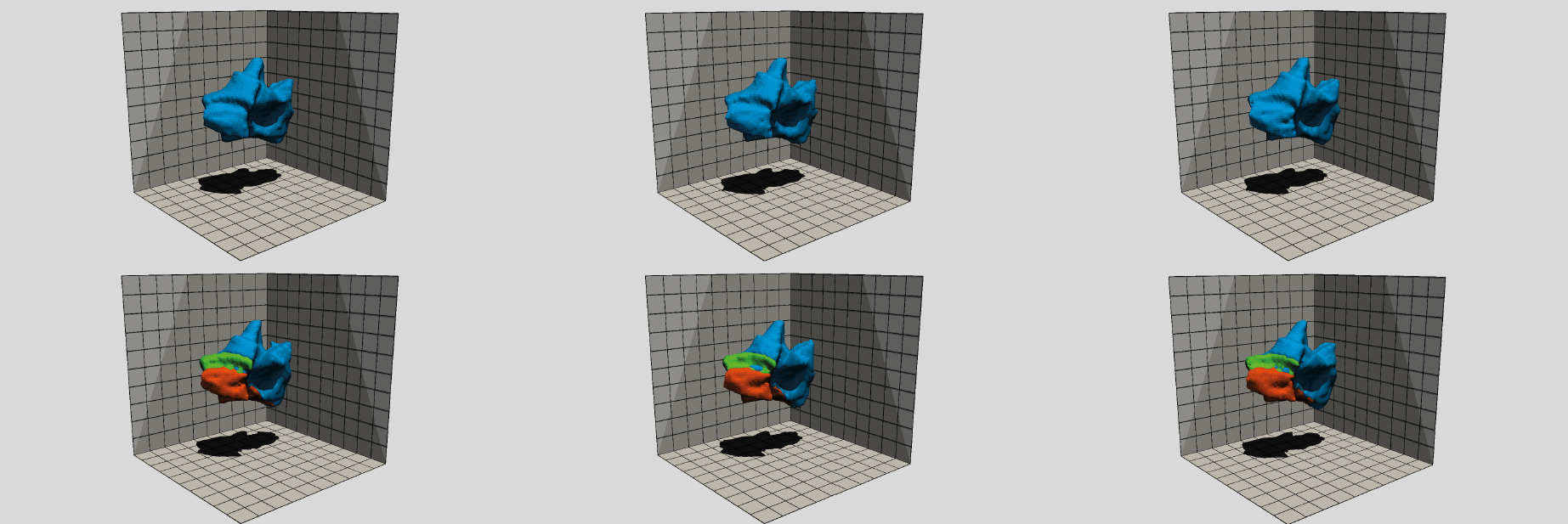

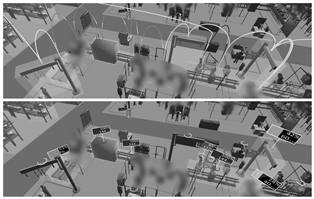

PASCAL - A Collaboration Technique Between Non-Collocated Avatars in Large Collaborative Virtual Environments

Collaborative work in large virtual environments often requires transitions from loosely-coupled collaboration at different locations to tightly-coupled collaboration at a common meeting point. Inspired by prior work on the continuum between these extremes, we present two novel interaction techniques designed to share spatial context while collaborating over large virtual distances. The first method replicates the familiar setup of a video conference by providing users with a virtual tablet to share video feeds with their peers. The second method called PASCAL (Parallel Avatars in a Shared Collaborative Aura Link) enables users to share their immediate spatial surroundings with others by creating synchronized copies of it at the remote locations of their collaborators. We evaluated both techniques in a within-subject user study, in which 24 participants were tasked with solving a puzzle in groups of two. Our results indicate that the additional contextual information provided by PASCAL had significantly positive effects on task completion time, ease of communication, mutual understanding, and co-presence. As a result, our insights contribute to the repertoire of successful interaction techniques to mediate between loosely- and tightly-coupled work in collaborative virtual environments.

@article{Gilbert2025,

author={D. {Gilbert} and A. {Bose} and T. {Kuhlen} and T. {Weissker}},

journal={IEEE Transactions on Visualization and Computer Graphics},

title={PASCAL - A Collaboration Technique Between Non-Collocated Avatars in Large Collaborative Virtual Environments},

year={2025},

volume={31},

number={5},

pages={1268-1278},

doi={10.1109/TVCG.2025.3549175}

}

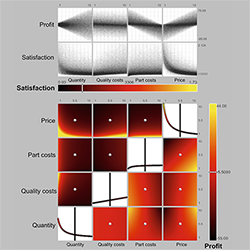

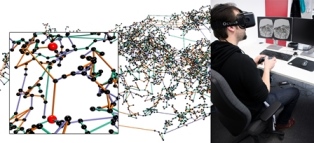

Minimalism or Creative Chaos? On the Arrangement and Analysis of Numerous Scatterplots in Immersi-ve 3D Knowledge Spaces

Working with scatterplots is a classic everyday task for data analysts, which gets increasingly complex the more plots are required to form an understanding of the underlying data. To help analysts retrieve relevant plots more quickly when they are needed, immersive virtual environments (iVEs) provide them with the option to freely arrange scatterplots in the 3D space around them. In this paper, we investigate the impact of different virtual environments on the users' ability to quickly find and retrieve individual scatterplots from a larger collection. We tested three different scenarios, all having in common that users were able to position the plots freely in space according to their own needs, but each providing them with varying numbers of landmarks serving as visual cues - an Emptycene as a baseline condition, a single landmark condition with one prominent visual cue being a Desk, and a multiple landmarks condition being a virtual Office. Results from a between-subject investigation with 45 participants indicate that the time and effort users invest in arranging their plots within an iVE had a greater impact on memory performance than the design of the iVE itself. We report on the individual arrangement strategies that participants used to solve the task effectively and underline the importance of an active arrangement phase for supporting the spatial memorization of scatterplots in iVEs.

@article{Derksen2025,

author={M. {Derksen} and T. {Kuhlen} and M. {Botsch} and T. {Weissker}},

journal={IEEE Transactions on Visualization and Computer Graphics},

title={Minimalism or Creative Chaos? On the Arrangement and Analysis of Numerous Scatterplots in Immersive 3D Knowledge Spaces},

year={2025},

volume={31},

number={5},

pages={746-756},

doi={10.1109/TVCG.2025.3549546}

}

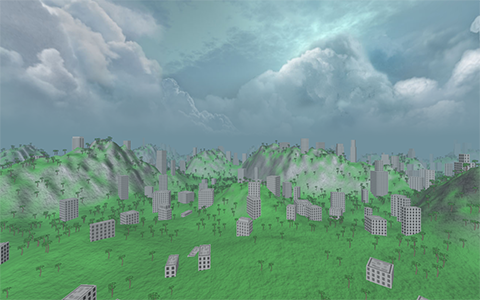

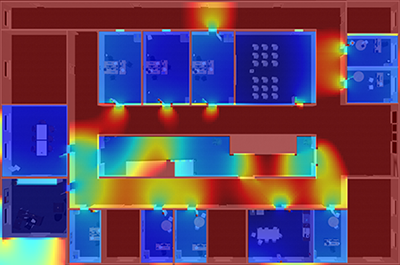

Wayfinding in Immersive Virtual Environments as Social Activity Supported by Virtual Agents

Effective navigation and interaction within immersive virtual environments rely on thorough scene exploration. Therefore, wayfinding is essential, assisting users in comprehending their surroundings, planning routes, and making informed decisions. Based on real-life observations, wayfinding is, thereby, not only a cognitive process but also a social activity profoundly influenced by the presence and behaviors of others. In virtual environments, these 'others' are virtual agents (VAs), defined as anthropomorphic computer-controlled characters, who enliven the environment and can serve as background characters or direct interaction partners. However, little research has been done to explore how to efficiently use VAs as social wayfinding support. In this paper, we aim to assess and contrast user experience, user comfort, and the acquisition of scene knowledge through a between-subjects study involving n = 60 participants across three distinct wayfinding conditions in one slightly populated urban environment: (i) unsupported wayfinding, (ii) strong social wayfinding using a virtual supporter who incorporates guiding and accompanying elements while directly impacting the participants' wayfinding decisions, and (iii) weak social wayfinding using flows of VAs that subtly influence the participants' wayfinding decisions by their locomotion behavior. Our work is the first to compare the impact of VAs' behavior in virtual reality on users' scene exploration, including spatial awareness, scene comprehension, and comfort. The results show the general utility of social wayfinding support, while underscoring the superiority of the strong type. Nevertheless, further exploration of weak social wayfinding as a promising technique is needed. Thus, our work contributes to the enhancement of VAs as advanced user interfaces, increasing user acceptance and usability.

@article{Boensch2024,

title={Wayfinding in Immersive Virtual Environments as Social Activity Supported by Virtual Agents},

author={B{\"o}nsch, Andrea and Ehret, Jonathan and Rupp, Daniel and Kuhlen, Torsten W.},

journal={Frontiers in Virtual Reality},

volume={4},

year={2024},

pages={1334795},

publisher={Frontiers},

doi={10.3389/frvir.2023.1334795}

}

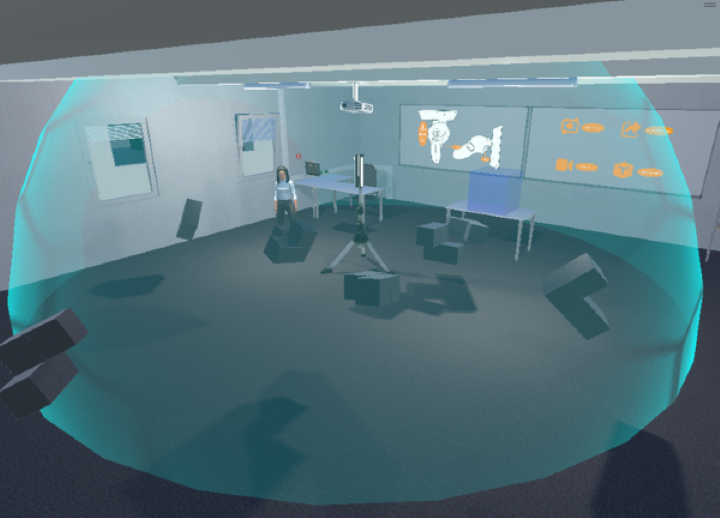

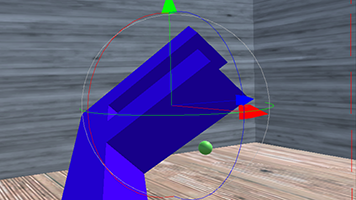

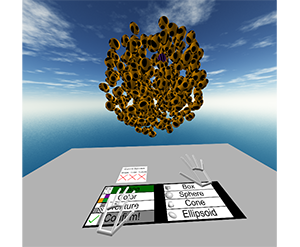

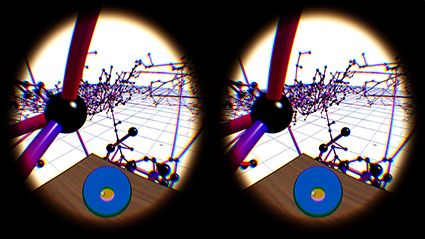

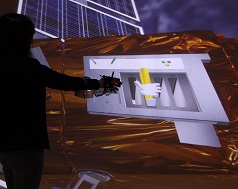

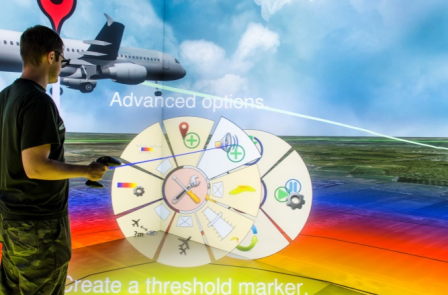

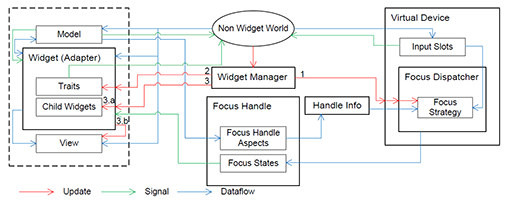

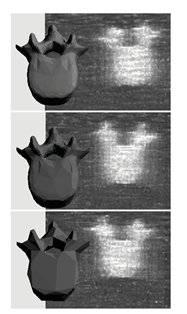

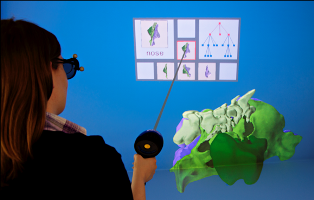

Choose Your Reference Frame Right: An Immersive Authoring Technique for Creating Reactive Behavior

Immersive authoring enables content creation for virtual environments without a break of immersion. To enable immersive authoring of reactive behavior for a broad audience, we present modulation mapping, a simplified visual programming technique. To evaluate the applicability of our technique, we investigate the role of reference frames in which the programming elements are positioned, as this can affect the user experience. Thus, we developed two interface layouts: "surround-referenced" and "object-referenced". The former positions the programming elements relative to the physical tracking space, and the latter relative to the virtual scene objects. We compared the layouts in an empirical user study (n = 34) and found the surround-referenced layout faster, lower in task load, less cluttered, easier to learn and use, and preferred by users. Qualitative feedback, however, revealed the object-referenced layout as more intuitive, engaging, and valuable for visual debugging. Based on the results, we propose initial design implications for immersive authoring of reactive behavior by visual programming. Overall, modulation mapping was found to be an effective means for creating reactive behavior by the participants.

Honorable Mention for Best Paper!» Show BibTeX

@inproceedings{eroglu2024choose,

title={Choose Your Reference Frame Right: An Immersive Authoring Technique for Creating Reactive Behavior},

author={Eroglu, Sevinc and Schmitz, Patric and Sinke, Kilian and Anders, David and Kuhlen, Torsten Wolfgang and Weyers, Benjamin},

booktitle={30th ACM Symposium on Virtual Reality Software and Technology},

pages={1--11},

year={2024}

}

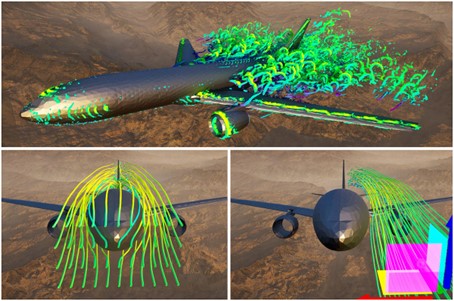

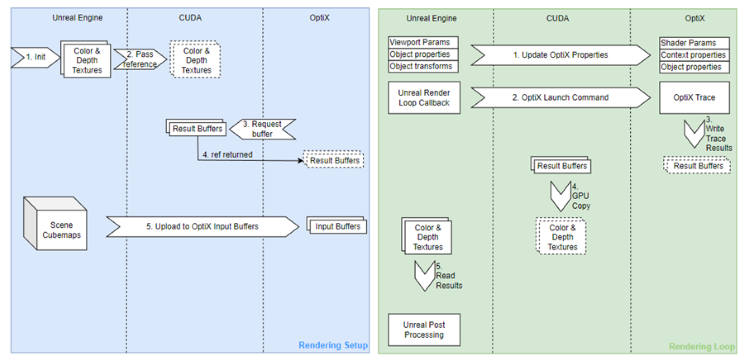

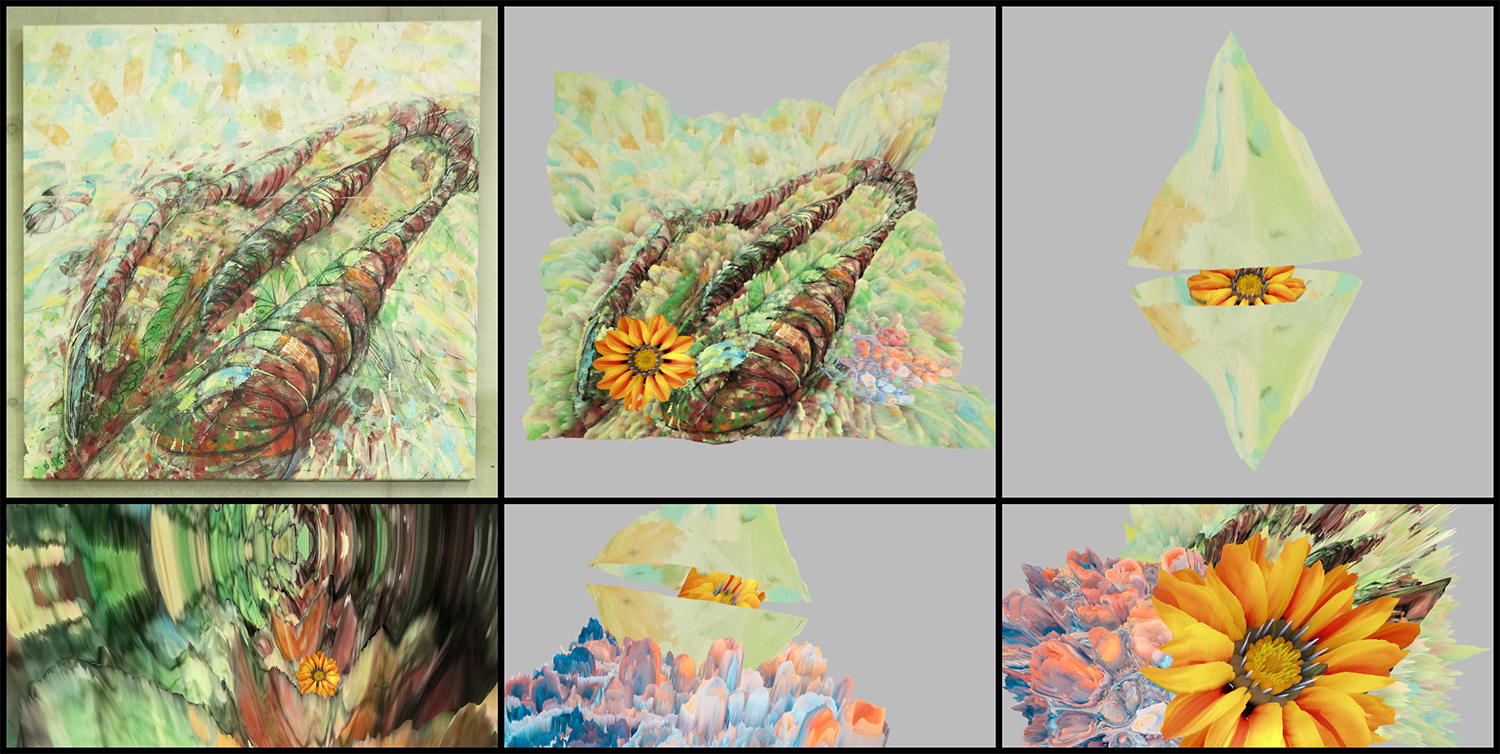

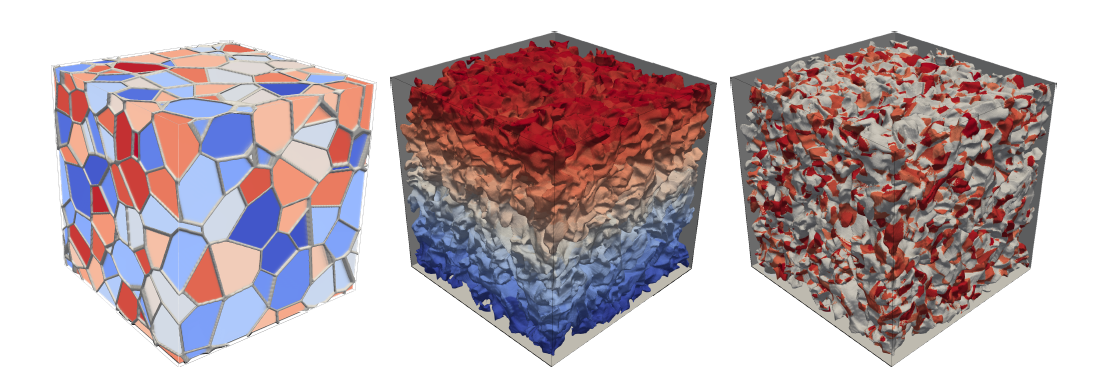

InsitUE - Enabling Hybrid In-situ Visualizations through Unreal Engine and Catalyst

In-situ, in-transit, and hybrid approaches have become well-established visualization methods over the last decades. Especially for large simulations, these paradigms enable visualization and additionally allow for early insights. While there has been a lot of research on combining these approaches with classical visualization software, only a few worked on combining in-situ/in-transit approaches with modern game engines. In this paper, we present and demonstrate InsitUE, a Catalyst2 compatible hybrid workflow that enables interactive real-time visualization of simulation results using Unreal Engine.

@InProceedings{10.1007/978-3-031-73716-9_33,

author="Kr{\"u}ger, Marcel

and Milke, Jan Frieder

and Kuhlen, Torsten W.

and Gerrits, Tim",

editor="Weiland, Mich{\`e}le

and Neuwirth, Sarah

and Kruse, Carola

and Weinzierl, Tobias",

title="InsitUE - Enabling Hybrid In-situ Visualizations Through Unreal Engine and Catalyst",

booktitle="High Performance Computing. ISC High Performance 2024 International Workshops",

year="2025",

publisher="Springer Nature Switzerland",

address="Cham",

pages="469--481",

abstract="In-situ, in-transit, and hybrid approaches have become well-established visualization methods over the last decades. Especially for large simulations, these paradigms enable visualization and additionally allow for early insights. While there has been a lot of research on combining these approaches with classical visualization software, only a few worked on combining in-situ/in-transit approaches with modern game engines. In this paper, we present and demonstrate InsitUE, a Catalyst2 compatible hybrid workflow that enables interactive real-time visualization of simulation results using Unreal Engine.",

isbn="978-3-031-73716-9"

}

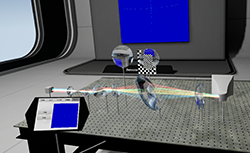

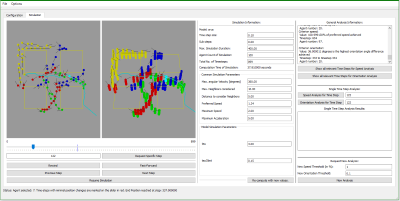

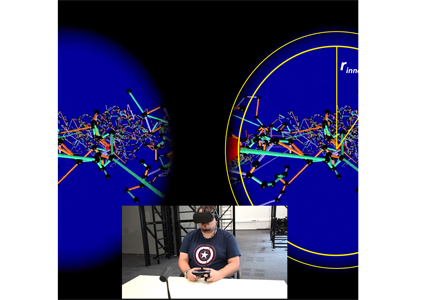

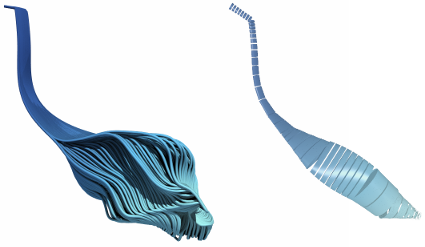

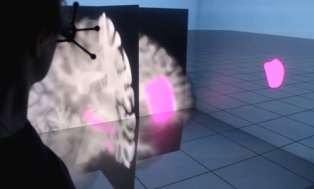

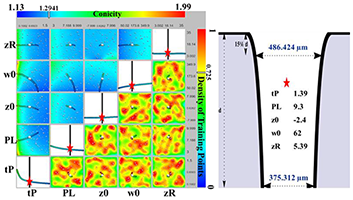

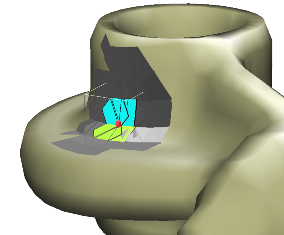

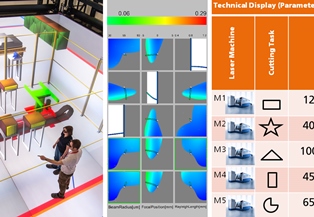

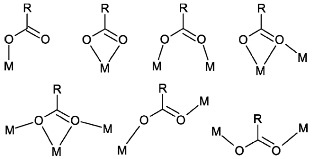

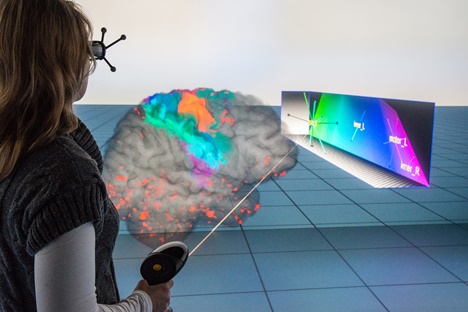

Virtual Reality as a Tool for Monitoring Additive Manufacturing Processes via Digital Shadows

We present a data acquisition and visualization pipeline that allows experts to monitor additive manufacturing processes, in particular laser metal deposition with wire (LMD-w) processes, in immersive virtual reality. Our virtual environment consists of a digital shadow of the LMD-w production site enriched with additional measurement data shown on both static as well as handheld virtual displays. Users can explore the production site by enhanced teleportation capabilities that enable them to change their scale as well as their elevation above the ground plane. In an exploratory user study with 22 participants, we demonstrate that our system is generally suitable for the supervision of LMD-w processes while generating low task load and cybersickness. Therefore, it serves as a first promising step towards the successful application of virtual reality technology in the comparatively young field of additive manufacturing.

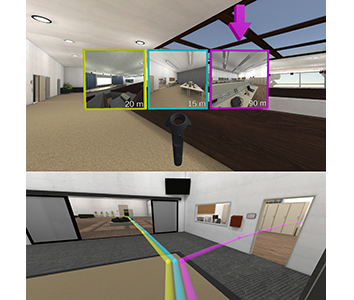

Semi-Automated Guided Teleportation through Immersive Virtual Environments

Immersive knowledge spaces like museums or cultural sites are often explored by traversing pre-defined paths that are curated to unfold a specific educational narrative. To support this type of guided exploration in VR, we present a semi-automated, handsfree path traversal technique based on teleportation that features a slow-paced interaction workflow targeted at fostering knowledge acquisition and maintaining spatial awareness. In an empirical user study with 34 participants, we evaluated two variations of our technique, differing in the presence or absence of intermediate teleportation points between the main points of interest along the route. While visiting additional intermediate points was objectively less efficient, our results indicate significant benefits of this approach regarding the user’s spatial awareness and perception of interface dependability. However, the user’s perception of flow, presence, attractiveness, perspicuity, and stimulation did not differ significantly. The overall positive reception of our approach encourages further research into semi-automated locomotion based on teleportation and provides initial insights into the design space of successful techniques in this domain.

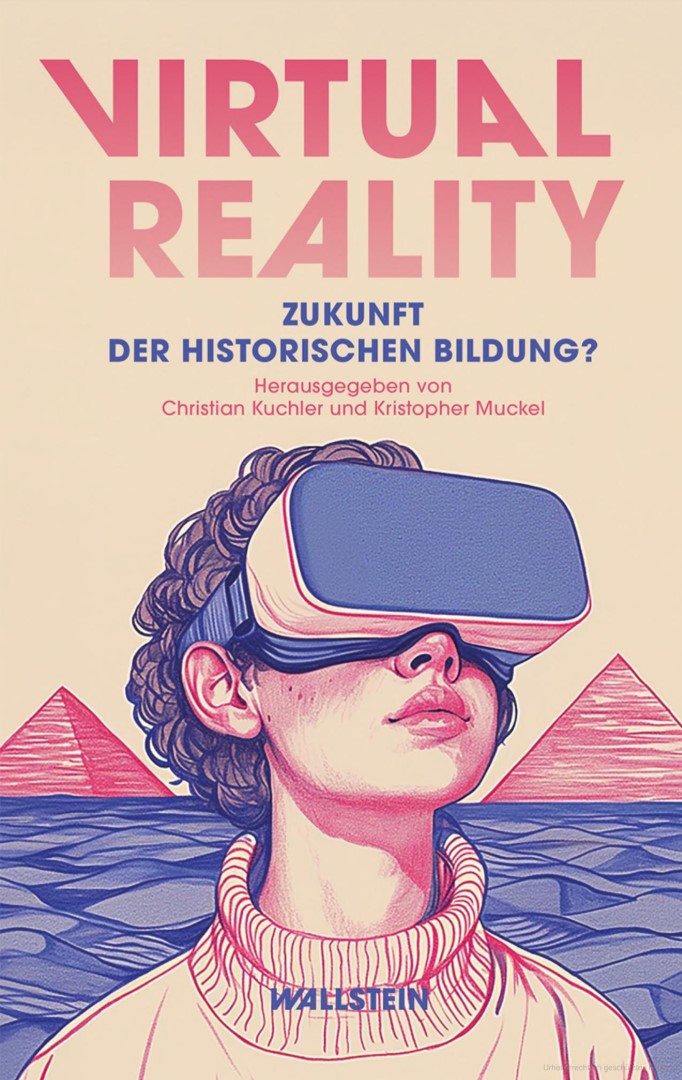

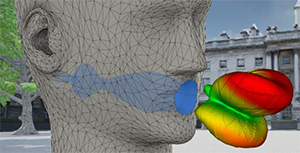

A Lecturer’s Voice Quality and its Effect on Memory, Listening Effort, and Perception in a VR Environment

Many lecturers develop voice problems, such as hoarseness. Nevertheless, research on how voice quality influences listeners’ perception, comprehension, and retention of spoken language is limited to a small number of audio-only experiments. We aimed to address this gap by using audio-visual virtual reality (VR) to investigate the impact of a lecturer’s hoarseness on university students’ heard text recall, listening effort, and listening impression. Fifty participants were immersed in a virtual seminar room, where they engaged in a Dual-Task Paradigm. They listened to narratives presented by a virtual female professor, who spoke in either a typical or hoarse voice. Simultaneously, participants performed a secondary task. Results revealed significantly prolonged secondary-task response times with the hoarse voice compared to the typical voice, indicating increased listening effort. Subjectively, participants rated the hoarse voice as more annoying, effortful to listen to, and impeding for their cognitive performance. No effect of voice quality was found on heard text recall, suggesting that, while hoarseness may compromise certain aspects of spoken language processing, this might not necessarily result in reduced information retention. In summary, our findings underscore the importance of promoting vocal health among lecturers, which may contribute to enhanced listening conditions in learning spaces.

» Show BibTeX

@article{Schiller2024,

author = {Isabel S. Schiller and Carolin Breuer and Lukas Aspöck and

Jonathan Ehret and Andrea Bönsch and Torsten W. Kuhlen and Janina Fels and

Sabine J. Schlittmeier},

doi = {10.1038/s41598-024-63097-6},

issn = {2045-2322},

issue = {1},

journal = {Scientific Reports},

keywords = {Audio-visual language processing,Virtual reality,Voice

quality},

month = {5},

pages = {12407},

pmid = {38811832},

title = {A lecturer’s voice quality and its effect on memory, listening

effort, and perception in a VR environment},

volume = {14},

url = {https://www.nature.com/articles/s41598-024-63097-6},

year = {2024},

}

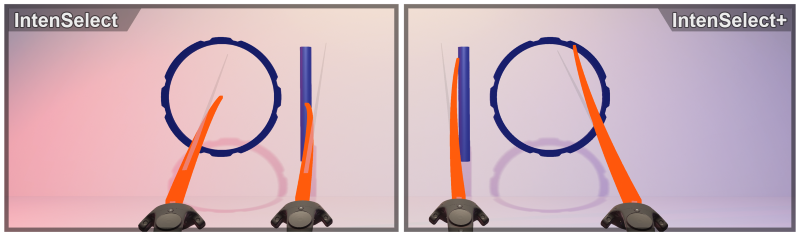

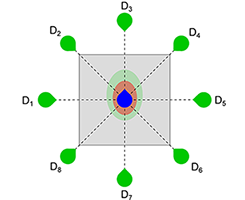

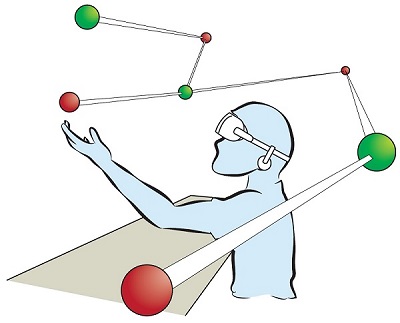

IntenSelect+: Enhancing Score-Based Selection in Virtual Reality

Object selection in virtual environments is one of the most common and recurring interaction tasks. Therefore, the used technique can critically influence a system’s overall efficiency and usability. IntenSelect is a scoring-based selection-by-volume technique that was shown to offer improved selection performance over conventional raycasting in virtual reality. This initial method, however, is most pronounced for small spherical objects that converge to a point-like appearance only, is challenging to parameterize, and has inherent limitations in terms of flexibility. We present an enhanced version of IntenSelect called IntenSelect+ designed to overcome multiple shortcomings of the original IntenSelect approach. In an empirical within-subjects user study with 42 participants, we compared IntenSelect+ to IntenSelect and conventional raycasting on various complex object configurations motivated by prior work. In addition to replicating the previously shown benefits of IntenSelect over raycasting, our results demonstrate significant advantages of IntenSelect+ over IntenSelect regarding selection performance, task load, and user experience. We, therefore, conclude that IntenSelect+ is a promising enhancement of the original approach that enables faster, more precise, and more comfortable object selection in immersive virtual environments.

» Show BibTeX

@ARTICLE{10459000,

author={Krüger, Marcel and Gerrits, Tim and Römer, Timon and Kuhlen, Torsten and Weissker, Tim},

journal={IEEE Transactions on Visualization and Computer Graphics},

title={IntenSelect+: Enhancing Score-Based Selection in Virtual Reality},

year={2024},

volume={},

number={},

pages={1-10},

keywords={Visualization;Three-dimensional displays;Task analysis;Usability;Virtual environments;Shape;Engines;Virtual Reality;3D User Interfaces;3D Interaction;Selection;Score-Based Selection;Temporal Selection;IntenSelect},

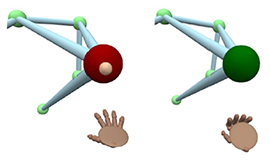

Authentication in Immersive Virtual Environments through Gesture-Based Interaction with a Virtual Agent

Authentication poses a significant challenge in VR applications, as conventional methods, such as text input for usernames and passwords, prove cumbersome and unnatural in immersive virtual environments. Alternatives such as password managers or two-factor authentication may necessitate users to disengage from the virtual experience by removing their headsets. Consequently, we present an innovative system that utilizes virtual agents (VAs) as interaction partners, enabling users to authenticate naturally through a set of ten gestures, such as high fives, fist bumps, or waving. By combining these gestures, users can create personalized authentications akin to PINs, potentially enhancing security without compromising the immersive experience. To gain first insights into the suitability of this authentication process, we conducted a formal expert review with five participants and compared our system to a virtual keypad authentication approach. While our results show that the effectiveness of a VA-mediated gesture-based authentication system is still limited, they motivate further research in this area.

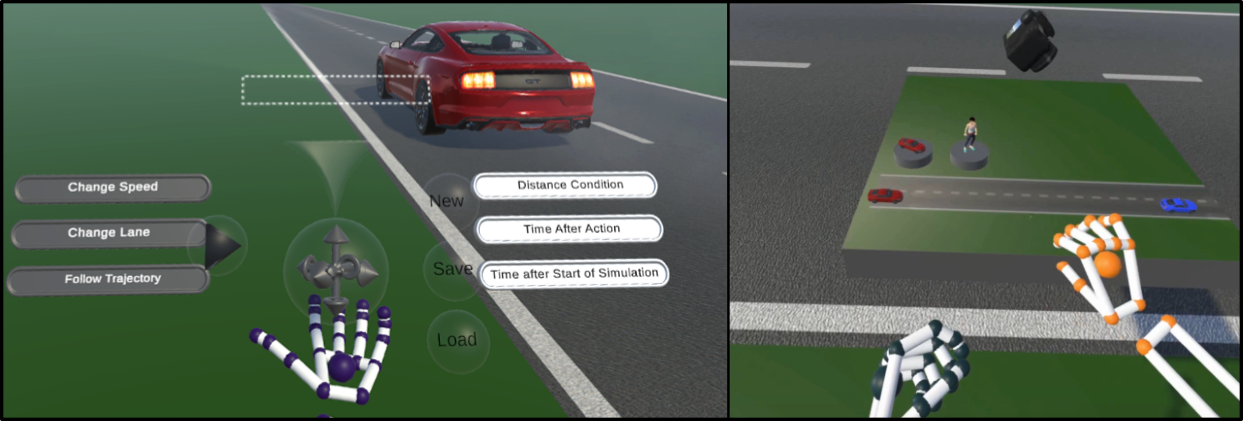

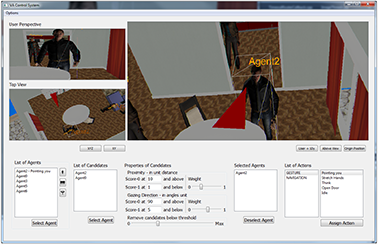

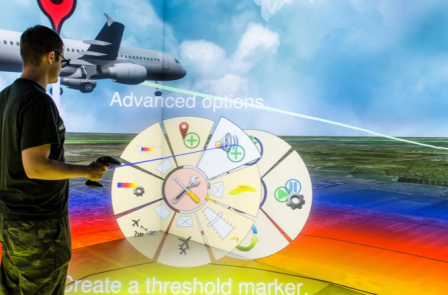

VRScenarioBuilder: Free-Hand Immersive Authoring Tool for Scenario-based Testing of Automated Vehicles

Virtual Reality has become an important medium in the automotive industry, providing engineers with a simulated platform to actively engage with and evaluate realistic driving scenarios for testing and validating automated vehicles. However, engineers are often restricted to using 2D desktop-based tools for designing driving scenarios, which can result in inefficiencies in the development and testing cycles. To this end, we present VRScenarioBuilder, an immersive authoring tool that enables engineers to create and modify dynamic driving scenarios directly in VR using free-hand interactions. Our tool features a natural user interface that enables users to create scenarios by using drag-and-drop building blocks. To evaluate the interface components and interactions, we conducted a user study with VR experts. Our findings highlight the effectiveness and potential improvements of our tool. We have further identified future research directions, such as exploring the spatial arrangement of the interface components and managing lengthy blocks.

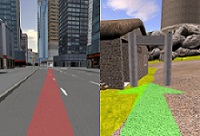

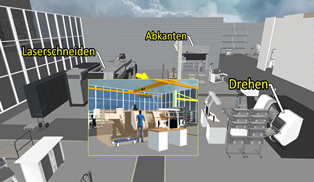

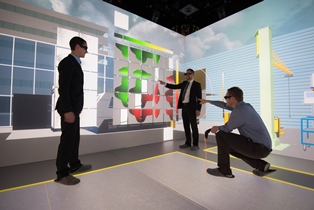

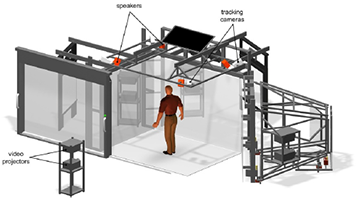

Game Engines for Immersive Visualization: Using Unreal Engine Beyond Entertainment

One core aspect of immersive visualization labs is to develop and provide powerful tools and applications that allow for efficient analysis and exploration of scientific data. As the requirements for such applications are often diverse and complex, the same applies to the development process. This has led to a myriad of different tools, frameworks, and approaches that grew and developed over time. The steady advance of commercial off-the-shelf game engines such as Unreal Engine has made them a valuable option for development in immersive visualization labs. In this work, we share our experience of migrating to Unreal Engine as a primary developing environment for immersive visualization applications. We share our considerations on requirements, present use cases developed in our lab to communicate advantages and challenges experienced, discuss implications on our research and development environments, and aim to provide guidance for others within our community facing similar challenges.

@article{10.1162/pres_a_00416,

author = {Krüger, Marcel and Gilbert, David and Kuhlen, Torsten W. and Gerrits, Tim},

title = "{Game Engines for Immersive Visualization: Using Unreal Engine Beyond Entertainment}",

journal = {PRESENCE: Virtual and Augmented Reality},

volume = {33},

pages = {31-55},

year = {2024},

month = {07},

abstract = "{One core aspect of immersive visualization labs is to develop and provide powerful tools and applications that allow for efficient analysis and exploration of scientific data. As the requirements for such applications are often diverse and complex, the same applies to the development process. This has led to a myriad of different tools, frameworks, and approaches that grew and developed over time. The steady advance of commercial off-the-shelf game engines such as Unreal Engine has made them a valuable option for development in immersive visualization labs. In this work, we share our experience of migrating to Unreal Engine as a primary developing environment for immersive visualization applications. We share our considerations on requirements, present use cases developed in our lab to communicate advantages and challenges experienced, discuss implications on our research and development environments, and aim to provide guidance for others within our community facing similar challenges.}",

issn = {1054-7460},

doi = {10.1162/pres_a_00416},

url = {https://doi.org/10.1162/pres\_a\_00416},

eprint = {https://direct.mit.edu/pvar/article-pdf/doi/10.1162/pres\_a\_00416/2465397/pres\_a\_00416.pdf},

}

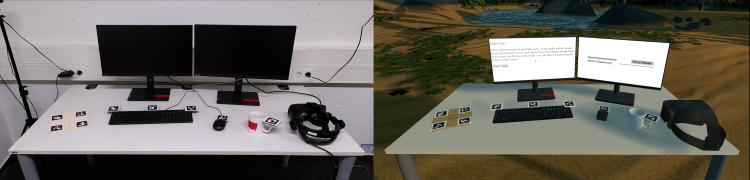

Demo: Webcam-based Hand- and Object-Tracking for a Desktop Workspace in Virtual Reality

As virtual reality overlays the user’s view, challenges arise when interaction with their physical surroundings is still needed. In a seated workspace environment interaction with the physical surroundings can be essential to enable productive working. Interaction with e.g. physical mouse and keyboard can be difficult when no visual reference is given to where they are placed. This demo shows a combination of computer vision-based marker detection with machine-learning-based hand detection to bring users’ hands and arbitrary objects into VR.

@inproceedings{10.1145/3677386.3688879,

author = {Pape, Sebastian and Beierle, Jonathan Heinrich and Kuhlen, Torsten Wolfgang and Weissker, Tim},

title = {Webcam-based Hand- and Object-Tracking for a Desktop Workspace in Virtual Reality},

year = {2024},

isbn = {9798400710889},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3677386.3688879},

doi = {10.1145/3677386.3688879},

abstract = {As virtual reality overlays the user’s view, challenges arise when interaction with their physical surroundings is still needed. In a seated workspace environment interaction with the physical surroundings can be essential to enable productive working. Interaction with e.g. physical mouse and keyboard can be difficult when no visual reference is given to where they are placed. This demo shows a combination of computer vision-based marker detection with machine-learning-based hand detection to bring users’ hands and arbitrary objects into VR.},

booktitle = {Proceedings of the 2024 ACM Symposium on Spatial User Interaction},

articleno = {64},

numpages = {2},

keywords = {Hand-Tracking, Object-Tracking, Physical Props, Virtual Reality, Webcam},

location = {Trier, Germany},

series = {SUI '24}

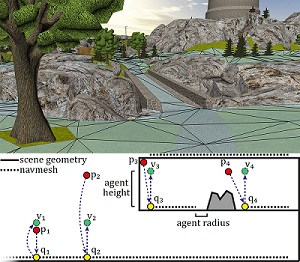

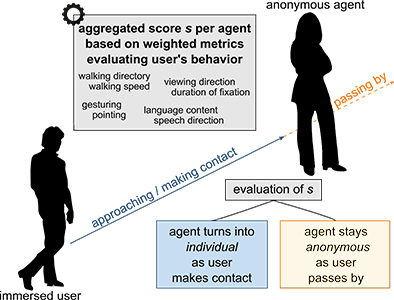

On the Computation of User Placements for Virtual Formation Adjustments during Group Navigation

Several group navigation techniques enable a single navigator to control travel for all group members simultaneously in social virtual reality. A key aspect of this process is the ability to rearrange the group into a new formation to facilitate the joint observation of the scene or to avoid obstacles on the way. However, the question of how users should be distributed within the new formation to create an intuitive transition that minimizes disruptions of ongoing social activities is currently not explored. In this paper, we begin to close this gap by introducing four user placement strategies based on mathematical considerations, discussing their benefits and drawbacks, and sketching further novel ideas to approach this topic from different angles in future work. Our work, therefore, contributes to the overarching goal of making group interactions in social virtual reality more intuitive and comfortable for the involved users.

» Show BibTeX

@INPROCEEDINGS{10536250,

author={Weissker, Tim and Franzgrote, Matthis and Kuhlen, Torsten and Gerrits, Tim},

booktitle={2024 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW)},

title={On the Computation of User Placements for Virtual Formation Adjustments During Group Navigation},

year={2024},

volume={},

number={},

pages={396-402},

keywords={Three-dimensional displays;Navigation;Conferences;Virtual reality;Human factors;User interfaces;Task analysis;Human-centered computing—Human computer interaction (HCI)—Interaction paradigms—Virtual reality;Human-centered computing—Interaction design—Interaction design theory, concepts and paradigms},

doi={10.1109/VRW62533.2024.00077}}

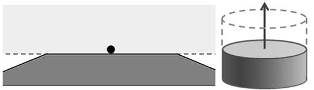

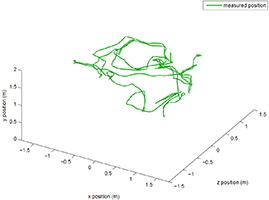

Try This for Size: Multi-Scale Teleportation in Immersive Virtual Reality

The ability of a user to adjust their own scale while traveling through virtual environments enables them to inspect tiny features being ant-sized and to gain an overview of the surroundings as a giant. While prior work has almost exclusively focused on steering-based interfaces for multi-scale travel, we present three novel teleportation-based techniques that avoid continuous motion flow to reduce the risk of cybersickness. Our approaches build on the extension of known teleportation workflows and suggest specifying scale adjustments either simultaneously with, as a connected second step after, or separately from the user’s new horizontal position. The results of a two-part user study with 30 participants indicate that the simultaneous and connected specification paradigms are both suitable candidates for effective and comfortable multi-scale teleportation with nuanced individual benefits. Scale specification as a separate mode, on the other hand, was considered less beneficial. We compare our findings to prior research and publish the executable of our user study to facilitate replication and further analyses.

» Show BibTeX

@ARTICLE{10458384,

author={Weissker, Tim and Franzgrote, Matthis and Kuhlen, Torsten},

journal={IEEE Transactions on Visualization and Computer Graphics},

title={Try This for Size: Multi-Scale Teleportation in Immersive Virtual Reality},

year={2024},

volume={30},

number={5},

pages={2298-2308},

keywords={Teleportation;Navigation;Virtual environments;Three-dimensional displays;Visualization;Cybersickness;Collaboration;Virtual Reality;3D User Interfaces;3D Navigation;Head-Mounted Display;Teleportation;Multi-Scale},

doi={10.1109/TVCG.2024.3372043}}

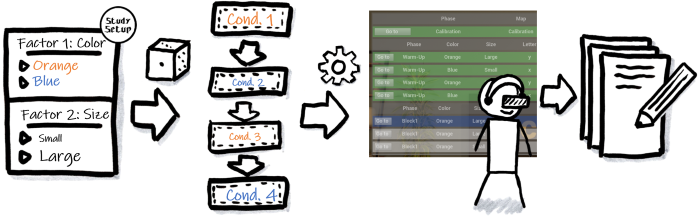

StudyFramework: Comfortably Setting up and Conducting Factorial-Design Studies Using the Unreal Engine

Setting up and conducting user studies is fundamental to virtual reality research. Yet, often these studies are developed from scratch, which is time-consuming and especially hard and error-prone for novice developers. In this paper, we introduce the StudyFramework, a framework specifically designed to streamline the setup and execution of factorial-design VR-based user studies within the Unreal Engine, significantly enhancing the overall process. We elucidate core concepts such as setup, randomization, the experimenter view, and logging. After utilizing our framework to set up and conduct their respective studies, 11 study developers provided valuable feedback through a structured questionnaire. This feedback, which was generally positive, highlighting its simplicity and usability, is discussed in detail.

» Show BibTeX

@ InProceedings{Ehret2024a,

author={Ehret, Jonathan and Bönsch, Andrea and Fels, Janina and

Schlittmeier, Sabine J. and Kuhlen, Torsten W.},

booktitle={2024 IEEE Conference on Virtual Reality and 3D User Interfaces

Abstracts and Workshops (VRW): Workshop "Open Access Tools and Libraries for

Virtual Reality"},

title={StudyFramework: Comfortably Setting up and Conducting

Factorial-Design Studies Using the Unreal Engine},

year={2024}

}

Audiovisual Coherence: Is Embodiment of Background Noise Sources a Necessity?

Exploring the synergy between visual and acoustic cues in virtual reality (VR) is crucial for elevating user engagement and perceived (social) presence. We present a study exploring the necessity and design impact of background sound source visualizations to guide the design of future soundscapes. To this end, we immersed n = 27 participants using a head-mounted display (HMD) within a virtual seminar room with six virtual peers and a virtual female professor. Participants engaged in a dual-task paradigm involving simultaneously listening to the professor and performing a secondary vibrotactile task, followed by recalling the heard speech content. We compared three types of background sound source visualizations in a within-subject design: no visualization, static visualization, and animated visualization. Participants’ subjective ratings indicate the importance of animated background sound source visualization for an optimal coherent audiovisual representation, particularly when embedding peer-emitted sounds. However, despite this subjective preference, audiovisual coherence did not affect participants’ performance in the dual-task paradigm measuring their listening effort.

» Show BibTeX

@ InProceedings{Ehret2024b,

author={Ehret, Jonathan and Bönsch, Andrea and Schiller, Isabel S. and

Breuer, Carolin and Aspöck, Lukas and Fels, Janina and Schlittmeier, Sabine

J. and Kuhlen, Torsten W.},

booktitle={2024 IEEE Conference on Virtual Reality and 3D User Interfaces

Abstracts and Workshops (VRW): "Workshop on Virtual Humans and Crowds in

Immersive Environments (VHCIE)"},

title={Audiovisual Coherence: Is Embodiment of Background Noise Sources a

Necessity?},

year={2024}

}

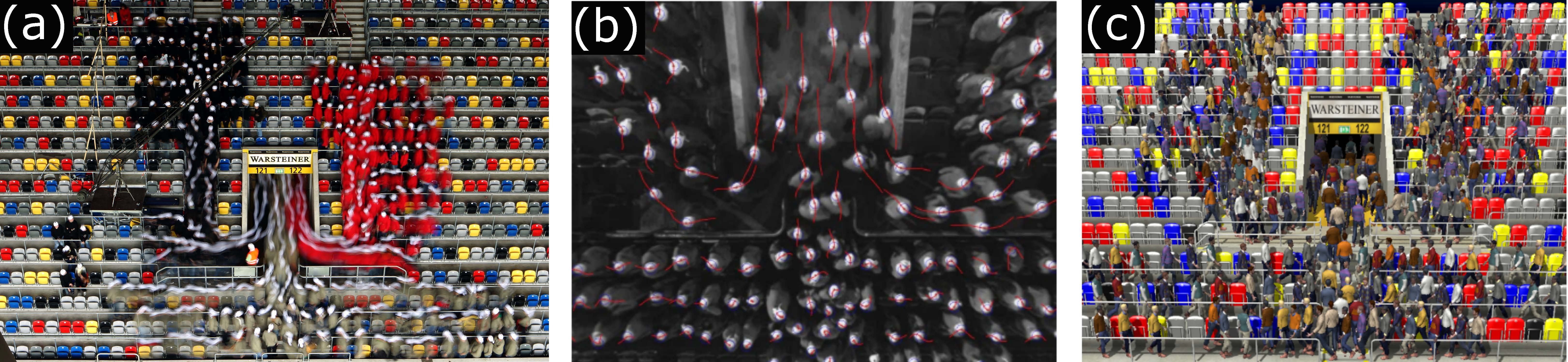

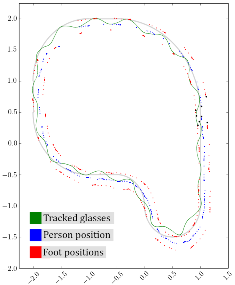

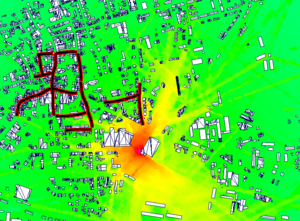

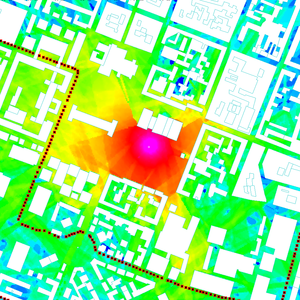

Late-Breaking Report: VR-CrowdCraft: Coupling and Advancing Research in Pedestrian Dynamics and Social Virtual Reality

VR-CrowdCraft is a newly formed interdisciplinary initiative, dedicated to the convergence and advancement of two distinct yet interconnected research fields: pedestrian dynamics (PD) and social virtual reality (VR). The initiative aims to establish foundational workflows for a systematic integration of PD data obtained from real-life experiments, encompassing scenarios ranging from smaller clusters of approximately ten individuals to larger groups comprising several hundred pedestrians, into immersive virtual environments (IVEs), addressing the following two crucial goals: (1) Advancing pedestrian dynamic analysis and (2) Advancing virtual pedestrian behavior: authentic populated IVEs and new PD experiments. The LBR presentation will focus on goal 1.

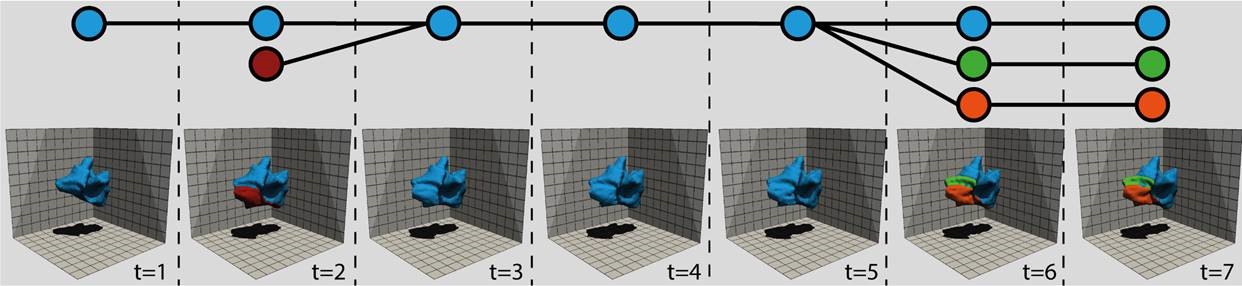

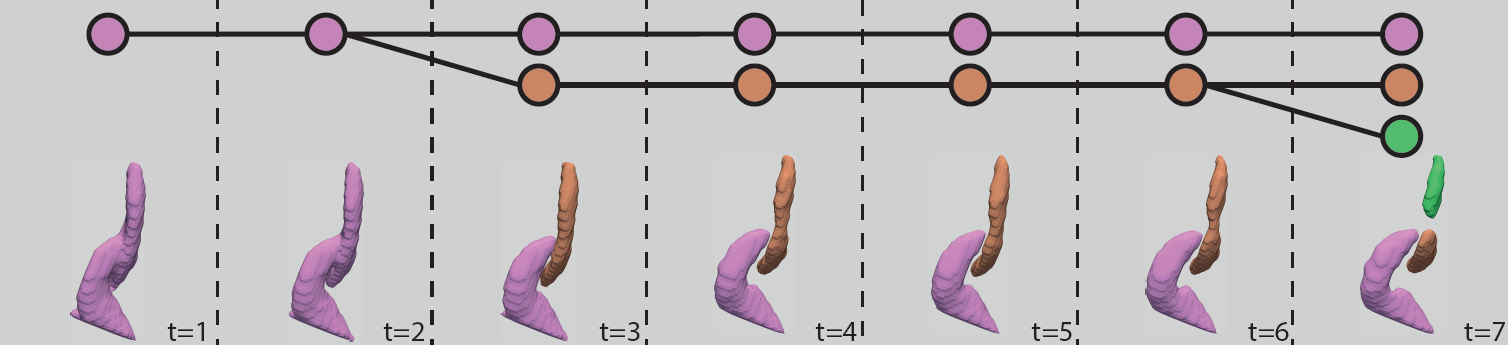

TENETvr: Comprehensible Temporal Teleportation in Time-Varying Virtual Environments

The iterative design process of virtual environments commonly generates a history of revisions that each represent the state of the scene at a different point in time. Browsing through these discrete time points by common temporal navigation interfaces like time sliders, however, can be inaccurate and lead to an uncomfortably high number of visual changes in a short time. In this paper, we therefore present a novel technique called TENETvr (Temporal Exploration and Navigation in virtual Environments via Teleportation) that allows for efficient teleportation-based travel to time points in which a particular object of interest changed. Unlike previous systems, we suggest that changes affecting other objects in the same time span should also be mediated before the teleport to improve predictability. We therefore propose visualizations for nine different types of additions, property changes, and deletions. In a formal user study with 20 participants, we confirmed that this addition leads to significantly more efficient change detection, lower task loads, and higher usability ratings, therefore reducing temporal disorientation.

@INPROCEEDINGS{10316438,

author={Rupp, Daniel and Kuhlen, Torsten and Weissker, Tim},

booktitle={2023 IEEE International Symposium on Mixed and Augmented Reality (ISMAR)},

title={{TENETvr: Comprehensible Temporal Teleportation in Time-Varying Virtual Environments}},

year={2023},

volume={},

number={},

pages={922-929},

doi={10.1109/ISMAR59233.2023.00108}}

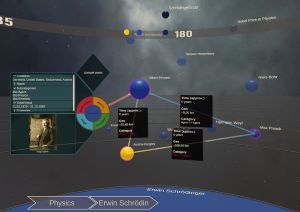

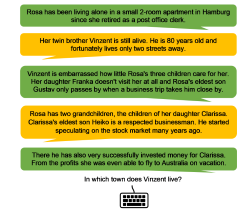

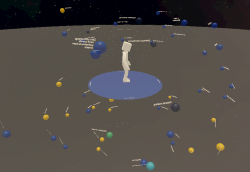

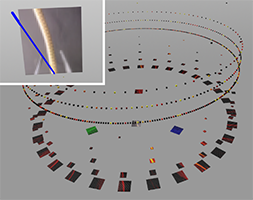

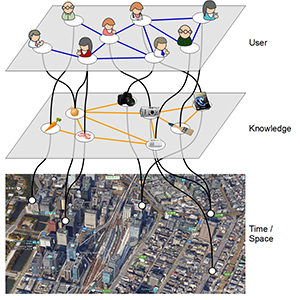

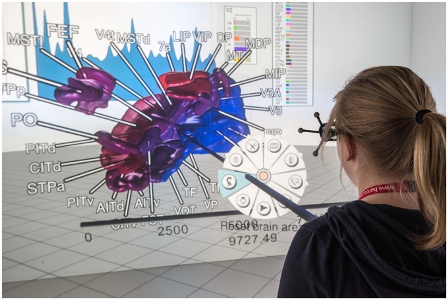

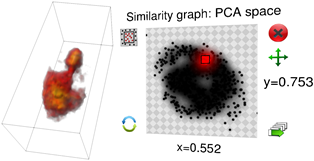

Who Did What When? Discovering Complex Historical Interrelations in Immersive Virtual Reality

Traditional digital tools for exploring historical data mostly rely on conventional 2D visualizations, which often cannot reveal all relevant interrelationships between historical fragments (e.g., persons or events). In this paper, we present a novel interactive exploration tool for historical data in VR, which represents fragments as spheres in a 3D environment and arranges them around the user based on their temporal, geo, categorical and semantic similarity. Quantitative and qualitative results from a user study with 29 participants revealed that most participants considered the virtual space and the abstract fragment representation well-suited to explore historical data and to discover complex interrelationships. These results were particularly underlined by high usability scores in terms of attractiveness, stimulation, and novelty, while researching historical facts with our system did not impose unexpectedly high task loads. Additionally, the insights from our post-study interviews provided valuable suggestions for future developments to further expand the possibilities of our system.

@INPROCEEDINGS{10316480,

author={Derksen, Melanie and Becker, Julia and Elahi, Mohammad Fazleh and Maier, Angelika and Maile, Marius and Pätzold, Ingo and Penningroth, Jonas and Reglin, Bettina and Rothgänger, Markus and Cimiano, Philipp and Schubert, Erich and Schwandt, Silke and Kuhlen, Torsten and Botsch, Mario and Weissker, Tim},

booktitle={2023 IEEE International Symposium on Mixed and Augmented Reality (ISMAR)},

title={{Who Did What When? Discovering Complex Historical Interrelations in Immersive Virtual Reality}},

year={2023},

volume={},

number={},

pages={129-137},

doi={10.1109/ISMAR59233.2023.00027}}

Who's next? Integrating Non-Verbal Turn-Taking Cues for Embodied Conversational Agents

Taking turns in a conversation is a delicate interplay of various signals, which we as humans can easily decipher. Embodied conversational agents (ECAs) communicating with humans should leverage this ability for smooth and enjoyable conversations. Extensive research hasanalyzed human turn-taking cues, and attempts have been made to predict turn-taking based on observed cues. These cues vary from prosodic, semantic, and syntactic modulation over adapted gesture and gaze behavior to actively used respiration. However, when generating such behavior for social robots or ECAs, often only single modalities were considered, e.g., gazing. We strive to design a comprehensive system that produces cues for all non-verbal modalities: gestures, gaze, and breathing. The system provides valuable cues without requiring speech content adaptation. We evaluated our system in a VR based user study with N = 32 participants executing two subsequent tasks. First, we asked them to listen to two ECAs taking turns in several conversations. Second, participants engaged in taking turns with one of the ECAs directly. We examined the system’s usability and the perceived social presence of the ECAs' turn-taking behavior, both with respect to each individual non-verbal modality and their interplay. While we found effects of gesture manipulation in interactions with the ECAs, no effects on social presence were found.

This work is licensed under a Creative Commons Attribution International 4.0 License

» Show BibTeX

@InProceedings{Ehret2023,

author = {Jonathan Ehret, Andrea Bönsch, Patrick Nossol, Cosima A. Ermert, Chinthusa Mohanathasan, Sabine J. Schlittmeier, Janina Fels and Torsten W. Kuhlen},

booktitle = {ACM International Conference on Intelligent Virtual Agents (IVA ’23)},

title = {Who's next? Integrating Non-Verbal Turn-Taking Cues for Embodied Conversational Agents},

year = {2023},

organization = {ACM},

pages = {8},

doi = {10.1145/3570945.3607312},

}

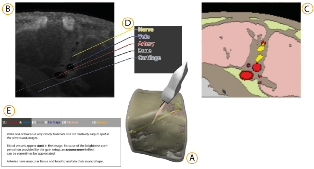

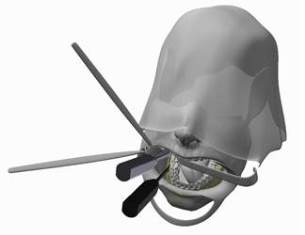

Effect of Head-Mounted Displays on Students’ Acquisition of Surgical Suturing Techniques Compared to an E-Learning and Tutor-Led Course: A Randomized Controlled Trial

Background: Although surgical suturing is one of the most important basic skills, many medical school graduates do not acquire sufficient knowledge of it due to its lack of integration into the curriculum or a shortage of tutors. E learning approaches attempt to address this issue but still rely on the involvement of tutors. Furthermore, the learning experience and visual spatial ability appear to play a critical role in surgical skill acquisition. Virtual reality head-mounted displays (HMDs) could address this, but the benefits of immersive and stereoscopic learning of surgical suturing techniques are still unclear.

Material and Methods: In this multi-arm randomized controlled trial, 150 novices participated. Three teaching modalities were compared: an e-learning course (monoscopic), an HMD-based course (stereoscopic, immersive), both self-directed, and a tutor-led course with feedback. Suturing performance was recorded by video camera both before and after course participation (>26 hours of video material) and assessed in a blinded fashion using the OSATS Global Rating Score (GRS). Furthermore, the optical flow of the videos was determined using an algorithm. The number of sutures performed was counted, visual spatial ability was measured with the mental rotation test (MRT), and courses were assessed with questionnaires.

Results: Students' self-assessment in the HMD-based course was comparable to that of the tutor-led course and significantly better than in the e-learning course (P=0.003). Course suitability was rated best for the tutor-led course (x=4.8), followed by the HMD-based (x=3.6) and e-learning (x=2.5) courses. The median GRS between courses was comparable (P=0.15) at 12.4 (95% CI 10.0 12.7) for the e-learning course, 14.1 (95% CI 13.0-15.0) for the HMD-based course, and 12.7 (95% CI 10.3-14.2) for the tutor-led course. However, the GRS was significantly correlated with the number of sutures performed during the training session (P=0.002), but not with visual-spatial ability (P=0.626). Optical flow (R2=0.15, P<0.001) and the number of sutures performed (R2=0.73, P<0.001) can be used as additional measures to GRS.

Conclusion: The use of HMDs with stereoscopic and immersive video provides advantages in the learning experience and should be preferred over a traditional web application for e-learning. Contrary to expectations, feedback is not necessary for novices to achieve a sufficient level in suturing; only the number of surgical sutures performed during training is a good determinant of competence improvement. Nevertheless, feedback still enhances the learning experience. Therefore, automated assessment as an alternative feedback approach could further improve self-directed learning modalities. As a next step, the data from this study could be used to develop such automated AI-based assessments.

@Article{Peters2023,

author = {Philipp Peters and Martin Lemos and Andrea Bönsch and Mark Ooms and Max Ulbrich and Ashkan Rashad and Felix Krause and Myriam Lipprandt and Torsten Wolfgang Kuhlen and Rainer Röhrig and Frank Hölzle and Behrus Puladi},

journal = {International Journal of Surgery},

title = {Effect of head-mounted displays on students' acquisition of surgical suturing techniques compared to an e-learning and tutor-led course: A randomized controlled trial},

year = {2023},

month = {may},

volume = {Publish Ahead of Print},

creationdate = {2023-05-12T11:00:37},

doi = {10.1097/js9.0000000000000464},

modificationdate = {2023-05-12T11:00:37},

publisher = {Ovid Technologies (Wolters Kluwer Health)},

}

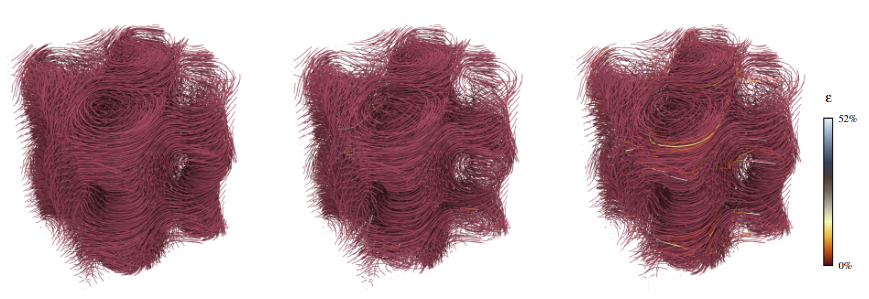

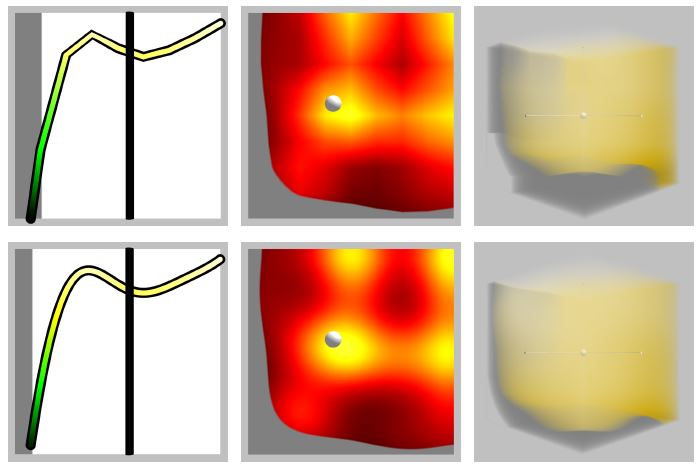

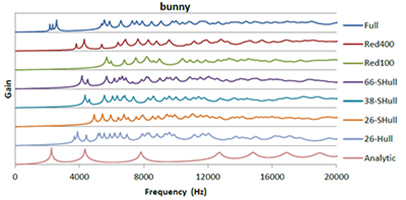

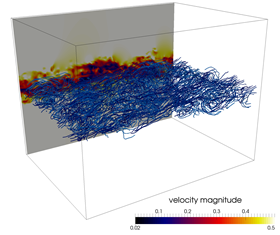

Leveraging BC6H Texture Compression and Filtering for Efficient Vector Field Visualization

The steady advance of compute hardware is accompanied by an ever-steeper amount of data to be processed for visualization. Limited memory bandwidth provides a significant bottleneck to the runtime performance of visualization algorithms while limited video memory requires complex out-of-core loading techniques for rendering large datasets. Data compression methods aim to overcome these limitations, potentially at the cost of information loss. This work presents an approach to the compression of large data for flow visualization using the BC6H texture compression format natively supported, and therefore effortlessly leverageable, on modern GPUs. We assess the performance and accuracy of BC6H for compression of steady and unsteady vector fields and investigate its applicability to particle advection. The results indicate an improvement in memory utilization as well as runtime performance, at a cost of moderate loss in precision.

@inproceedings{10.2312:vmv.20231238,

booktitle = {Vision, Modeling, and Visualization},

editor = {Guthe, Michael and Grosch, Thorsten},

title = {{Leveraging BC6H Texture Compression and Filtering for Efficient Vector Field Visualization}},

author = {Oehrl, Simon and Milke, Jan Frieder and Koenen, Jens and Kuhlen, Torsten W. and Gerrits, Tim},

year = {2023},

publisher = {The Eurographics Association},

ISBN = {978-3-03868-232-5},

DOI = {10.2312/vmv.20231238}

}

Voice Quality and its Effects on University Students' Listening Effort in a Virtual Seminar Room

A teacher’s poor voice quality may increase listening effort in pupils, but it is unclear whether this effect persists in adult listeners. Thus, the goal of this study is to examine the impact of vocal hoarseness on university students' listening effort in a virtual seminar room. An audio-visual immersive virtual reality environment is utilized to simulate a typical seminar room with common background sounds and fellow students represented as wooden mannequins. Participants wear a head-mounted display and are equipped with two controllers to engage in a dual-task paradigm. The primary task is to listen to a virtual professor reading short texts and retain relevant content information to be recalled later. The texts are presented either in a normal or an imitated hoarse voice. In parallel, participants perform a secondary task which is responding to tactile vibration patterns via the controllers. It is hypothesized that listening to the hoarse voice induces listening effort, resulting in more cognitive resources needed for primary task performance while secondary task performance is hindered. Results are presented and discussed in light of students’ cognitive performance and listening challenges in higher education learning environments.

@INPROCEEDINGS{Schiller:977871,

author = {Schiller, Isabel Sarah and Aspöck, Lukas and Breuer,

Carolin and Ehret, Jonathan and Bönsch, Andrea and Fels,

Janina and Kuhlen, Torsten and Schlittmeier, Sabine Janina},

title = {{V}oice Quality and its Effects on University

Students' Listening Effort in a Virtual Seminar Room},

year = {2023},

month = {Dec},

date = {2023-12-04},

organization = {Acoustics 2023, Sydney (Australia), 4

Dec 2023 - 8 Dec 2023},

doi = {10.1121/10.0022982}

}

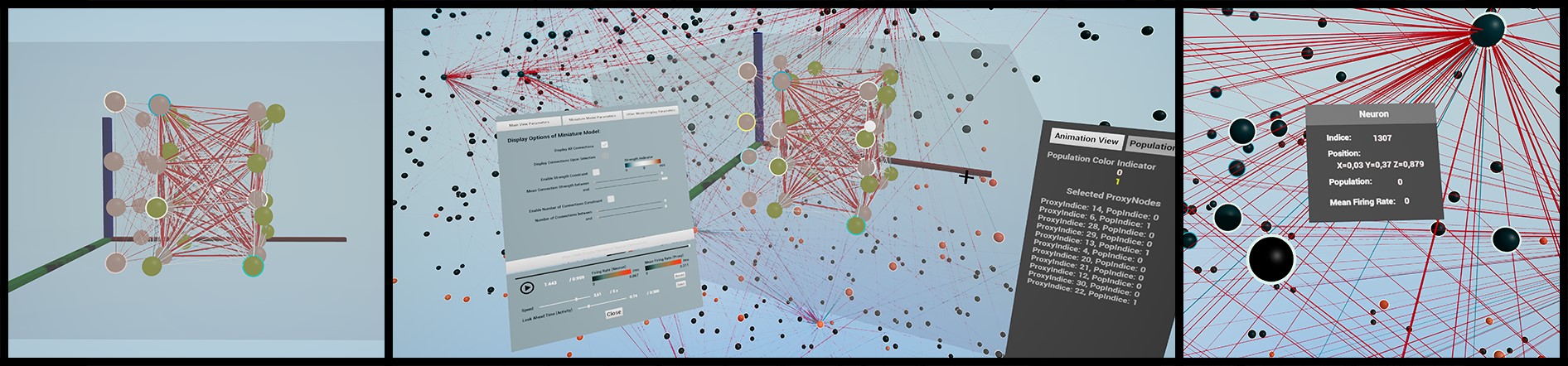

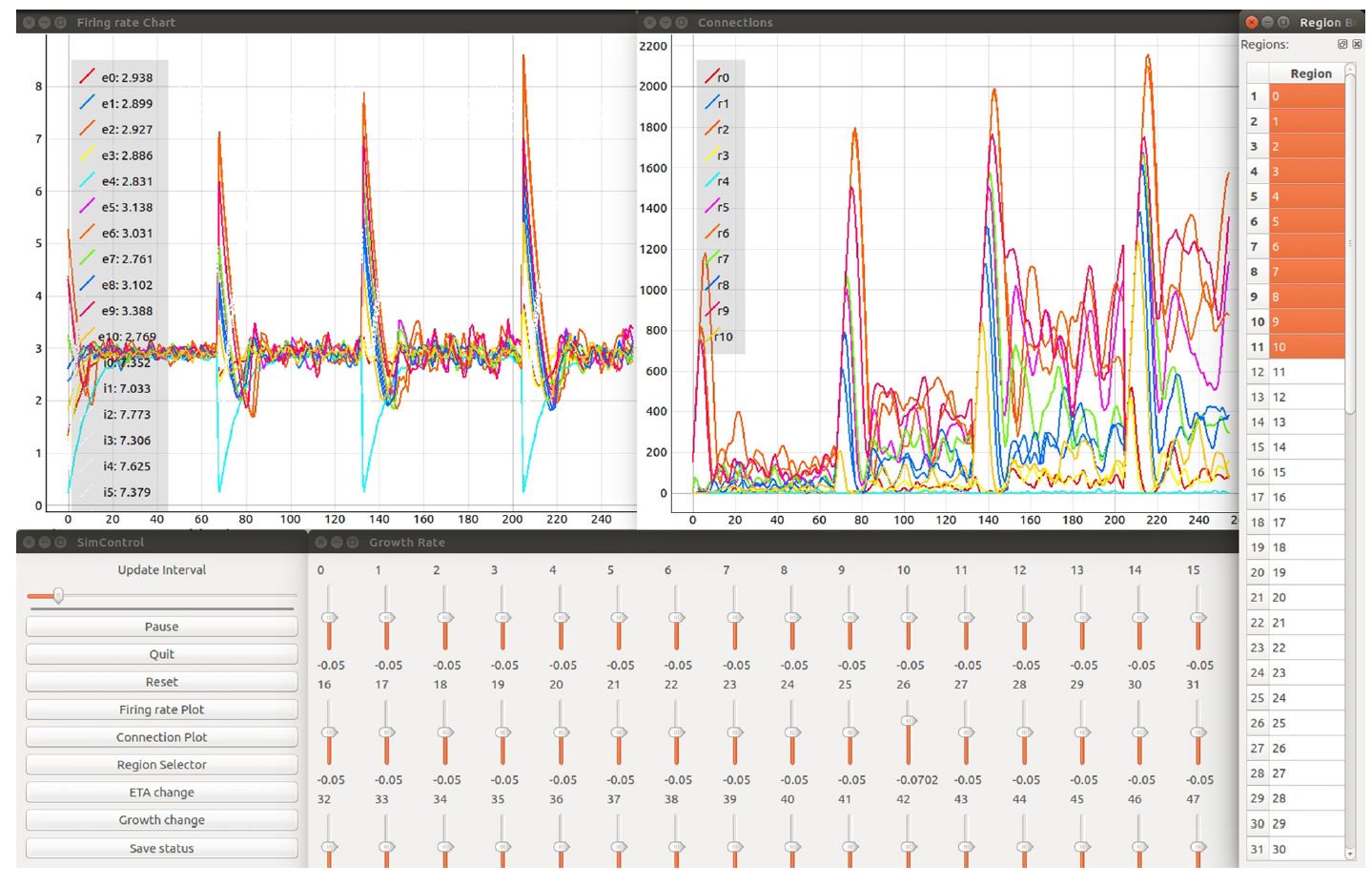

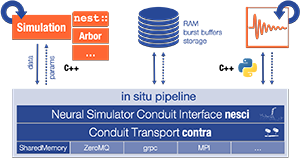

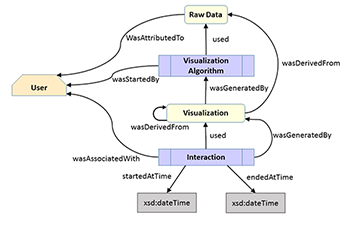

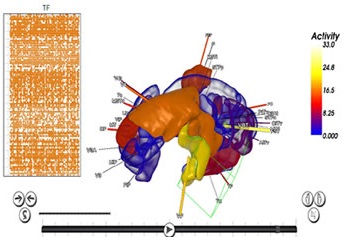

A Case Study on Providing Accessibility-Focused In-Transit Architectures for Neural Network Simulation and Analysis

Due to the ever-increasing availability of high-performance computing infrastructure, developers can simulate increasingly complex models. However, the increased complexity comes with new challenges regarding data processing and visualization due to the sheer size of simulations. Exploring simulation results needs to be handled efficiently via in-situ/in-transit analysis during run-time. However, most existing in-transit solutions require sophisticated and prior knowledge and significant alteration to existing simulation and visualization code, which produces a high entry barrier for many projects. In this work, we report how Insite, a lightweight in-transit pipeline, provided in-transit visualization and computation capability to various research applications in the neuronal network simulation domain. We describe the development process, including feedback from developers and domain experts, and discuss implications.

@inproceedings{kruger2023case,

title={A Case Study on Providing Accessibility-Focused In-Transit Architectures for Neural Network Simulation and Analysis},

author={Kr{\"u}ger, Marcel and Oehrl, Simon and Kuhlen, Torsten Wolfgang and Gerrits, Tim},

booktitle={International Conference on High Performance Computing},

pages={277--287},

year={2023},

organization={Springer}

}

Towards Plausible Cognitive Research in Virtual Environments: The Effect of Audiovisual Cues on Short-Term Memory in Two Talker Conversations

When three or more people are involved in a conversation, often one conversational partner listens to what the others are saying and has to remember the conversational content. The setups in cognitive-psychological experiments often differ substantially from everyday listening situations by neglecting such audiovisual cues. The presence of speech-related audiovisual cues, such as the spatial position, and the appearance or non-verbal behavior of the conversing talkers may influence the listener's memory and comprehension of conversational content. In our project, we provide first insights into the contribution of acoustic and visual cues on short-term memory, and (social) presence. Analyses have shown that the memory performance varies with increasingly more plausible audiovisual characteristics. Furthermore, we have conducted a series of experiments regarding the influence of the visual reproduction medium (virtual reality vs. traditional computer screens) and spatial or content audio-visual mismatch on auditory short-term memory performance. Adding virtual embodiments to the talkers allowed us to conduct experiments on the influence of the fidelity of co-verbal gestures and turn-taking signals. Thus, we are able to provide a more plausible paradigm for investigating memory for two-talker conversations within an interactive audiovisual virtual reality environment.

@InProceedings{Ehret2023Audictive,

author = {Jonathan Ehret, Cosima A. Ermert, Chinthusa

Mohanathasan, Janina Fels, Torsten W. Kuhlen and Sabine J. Schlittmeier},

booktitle = {Proceedings of the 1st AUDICTIVE Conference},

title = {Towards Plausible Cognitive Research in Virtual

Environments: The Effect of Audiovisual Cues on Short-Term Memory in

Two-Talker Conversations},

year = {2023},

pages = {68-72},

doi = { 10.18154/RWTH-2023-08409},

}

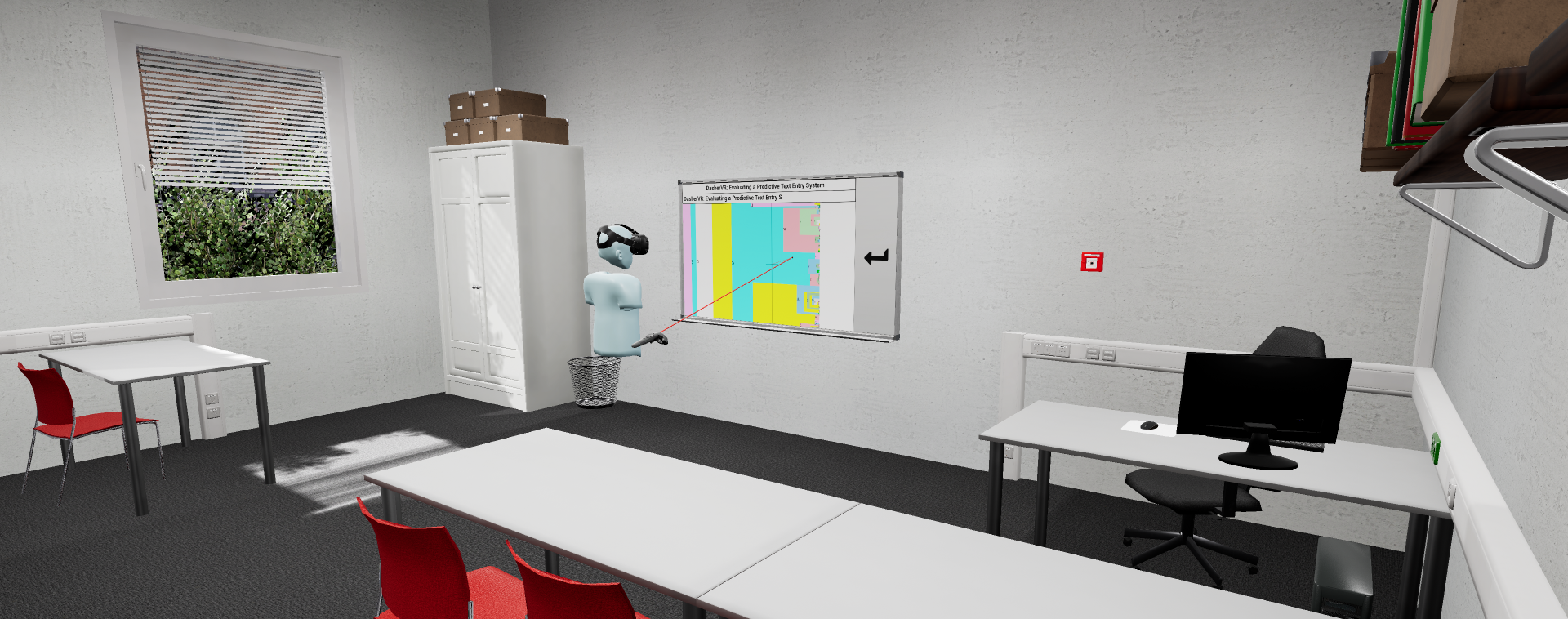

DasherVR: Evaluating a Predictive Text Entry System in Immersive Virtual Reality

Inputting text fluently in virtual reality is a topic still under active research, since many previously presented solutions have drawbacks in either speed, error rate, privacy or accessibility. To address these drawbacks, in this paper we adapted the predictive text entry system "Dasher" into an immersive virtual environment. Our evaluation with 20 participants shows that Dasher offers a good user experience with input speeds similar to other virtual text input techniques in the literature while maintaining low error rates. In combination with positive user feedback, we therefore believe that DasherVR is a promising basis for further research on accessible text input in immersive virtual reality.

» Show BibTeX

@inproceedings{pape2023,

title = {{{DasherVR}}: {{Evaluating}} a {{Predictive Text Entry System}} in {{Immersive Virtual Reality}}},

booktitle = {Towards an {{Inclusive}} and {{Accessible Metaverse}} at {{CHI}}'23},

author = {Pape, Sebastian and Ackermann, Jan Jakub and Weissker, Tim and Kuhlen, Torsten W},

doi = {https://doi.org/10.18154/RWTH-2023-05093},

year = {2023}

}

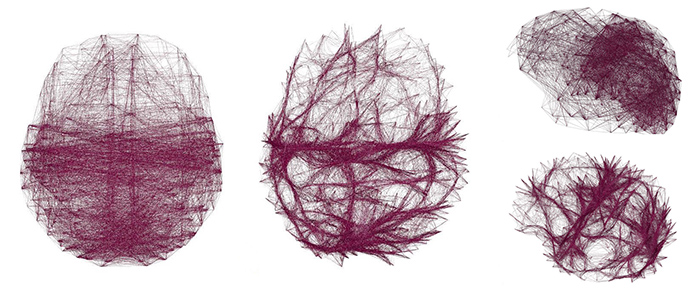

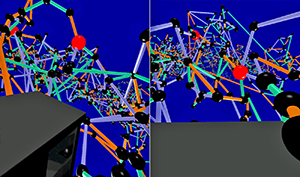

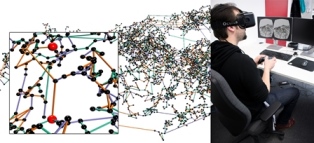

A Case Study on Providing Immersive Visualization for Neuronal Network Data Using COTS Soft- and Hardware

COTS VR hardware and modern game engines create the impression that bringing even complex data into VR has become easy. In this work, we investigate to what extent game engines can support the development of immersive visualization software with a case study. We discuss how the engine can support the development and where it falls short, e.g., failing to provide acceptable rendering performance for medium and large-sized data sets without using more sophisticated features.

@INPROCEEDINGS{10108843,

author={Krüger, Marcel and Li, Qin and Kuhlen, Torsten W. and Gerrits, Tim},

booktitle={2023 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW)},

title={A Case Study on Providing Immersive Visualization for Neuronal Network Data Using COTS Soft- and Hardware},

year={2023},

volume={},

number={},

pages={201-205},

doi={10.1109/VRW58643.2023.00050}}

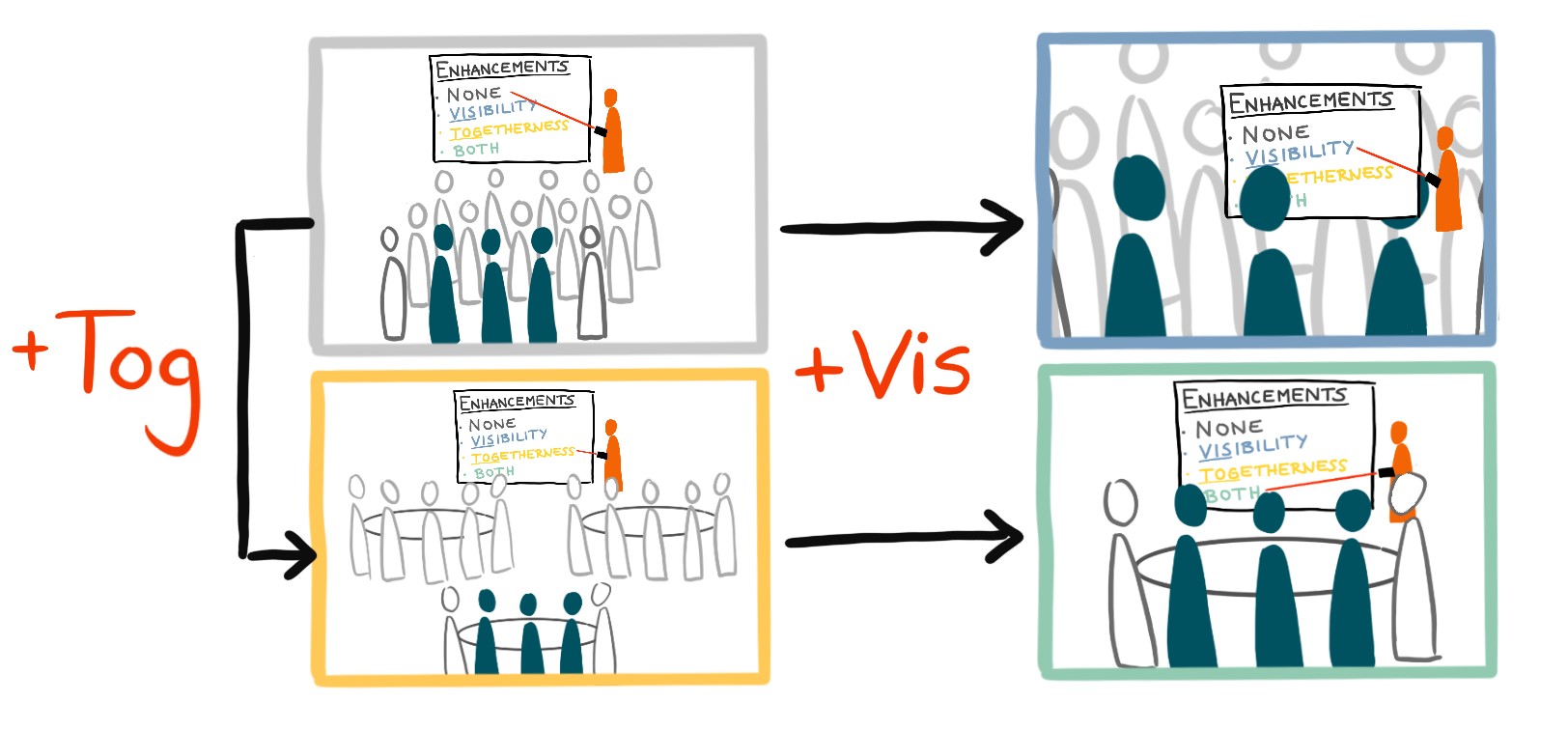

Enhanced Auditoriums for Attending Talks in Social Virtual Reality

Replicating traditional auditorium layouts for attending talks in social virtual reality often results in poor visibility of the presentation and a reduced feeling of being there together with others. Motivated by the use case of academic conferences, we therefore propose to display miniature representations of the stage close to the viewers for enhanced presentation visibility as well as group table arrangements for enhanced social co-watching. We conducted an initial user study with 12 participants in groups of three to evaluate the influence of these ideas on audience experience. Our results confirm the hypothesized positive effects of both enhancements and show that their combination was particularly appreciated by audience members. Our results therefore strongly encourage us to rethink conventional auditorium layouts in social virtual reality.

@inproceedings{10.1145/3544549.3585718,

author = {Weissker, Tim and Pieters, Leander and Kuhlen, Torsten},

title = {Enhanced Auditoriums for Attending Talks in Social Virtual Reality},

year = {2023},

isbn = {9781450394222},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3544549.3585718},

doi = {10.1145/3544549.3585718},

abstract = {Replicating traditional auditorium layouts for attending talks in social virtual reality often results in poor visibility of the presentation and a reduced feeling of being there together with others. Motivated by the use case of academic conferences, we therefore propose to display miniature representations of the stage close to the viewers for enhanced presentation visibility as well as group table arrangements for enhanced social co-watching. We conducted an initial user study with 12 participants in groups of three to evaluate the influence of these ideas on audience experience. Our results confirm the hypothesized positive effects of both enhancements and show that their combination was particularly appreciated by audience members. Our results therefore strongly encourage us to rethink conventional auditorium layouts in social virtual reality.},

booktitle = {Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems},

articleno = {101},

numpages = {7},

keywords = {Audience Experience, Head-Mounted Display, Multi-User, Social Interaction, Virtual Presentations, Virtual Reality},

location = {<conf-loc>, <city>Hamburg</city>, <country>Germany</country>, </conf-loc>},

series = {CHI EA '23}

}

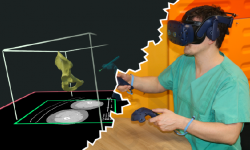

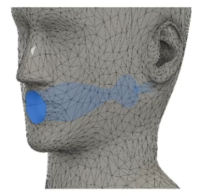

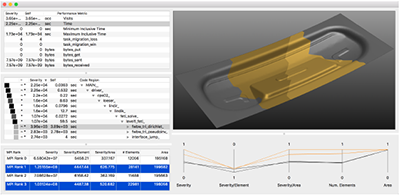

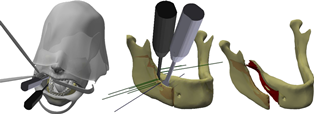

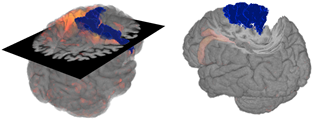

Advantages of a Training Course for Surgical Planning in Virtual Reality in Oral and Maxillofacial Surgery

Background: As an integral part of computer-assisted surgery, virtual surgical planning(VSP) leads to significantly better surgery results, such as for oral and maxillofacial reconstruction with microvascular grafts of the fibula or iliac crest. It is performed on a 2D computer desktop (DS) based on preoperative medical imaging. However, in this environment, VSP is associated with shortcomings, such as a time-consuming planning process and the requirement of a learning process. Therefore, a virtual reality VR)-based VSP application has great potential to reduce or even overcome these shortcomings due to the benefits of visuospatial vision, bimanual interaction, and full immersion. However, the efficacy of such a VR environment has not yet been investigated.

Objective: Does VR offer advantages in learning process and working speed while providing similar good results compared to a traditional DS working environment?

Methods: During a training course, novices were taught how to use a software application in a DS environment (3D Slicer) and in a VR environment (Elucis) for the segmentation of fibulae and os coxae (n = 156), and they were askedto carry out the maneuvers as accurately and quickly as possible. The individual learning processes in both environments were compared usingobjective criteria (time and segmentation performance) and self-reported questionnaires. The models resulting from the segmentation were compared mathematically (Hausdorff distance and Dice coefficient) and evaluated by two experienced radiologists in a blinded manner (score).

Results: During a training course, novices were taught how to use a software application in a DS environment (3D Slicer) and in a VR environment (Elucis)for the segmentation of fibulae and os coxae (n = 156), and they were asked to carry out the maneuvers as accurately and quickly as possible. The individual learning processes in both environments were compared using objective criteria (time and segmentation performance) and self-reported questionnaires. The models resulting from the segmentation were compared mathematically (Hausdorff distance and Dice coefficient) and evaluated by two experienced radiologists in a blinded manner (score).

Conclusions: The more rapid learning process and the ability to work faster in the VR environment could save time and reduce the VSP workload, providing certain advantages over the DS environment.

@article{Ulbrich2022,

title={Advantages of a Training Course for Surgical Planning in Virtual

Reality in Oral and Maxillofacial Surgery },

author={ Ulbrich, M., Van den Bosch, V., Bönsch, A., Gruber, L.J., Ooms,

M., Melchior, C., Motmaen, I., Wilpert, C., Rashad, A., Kuhlen, T.W.,

Hölzle, F., Puladi, B.},

journal={JMIR Serious Games},

volume={ 28/11/2022:40541 (forthcoming/in press) },

year={2022},

publisher={JMIR Publications Inc., Toronto, Canada}

}

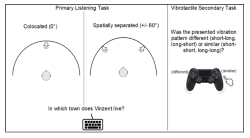

Poster: Memory and Listening Effort in Two-Talker Conversations: Does Face Visibility Help Us Remember?

Listening to and remembering conversational content is a highly demanding task that requires the interplay of auditory processes and several cognitive functions. In face-to-face conversations, it is quite impossible that two talker’s’ audio signals originate from the same spatial position and that their faces are hidden from view. The availability of such audiovisual cues when listening potentially influences memory and comprehension of the heard content. In the present study, we investigated the effect of static visual faces of two talkers and cognitive functions on the listener’s short-term memory of conversations and listening effort. Participants performed a dual-task paradigm including a primary listening task, where a conversation between two spatially separated talkers (+/- 60°) with static faces was presented. In parallel, a vibrotactile task was administered, independently of both visual and auditory modalities. To investigate the possibility of person-specific factors influencing short-term memory, we assessed additional cognitive functions like working memory. We discuss our results in terms of the role that visual information and cognitive functions play in short-term memory of conversations.

@InProceedings{ Mohanathasan2023ESCoP,

author = { Chinthusa Mohanathasan, Jonathan Ehret, Cosima A. Ermert, Janina Fels, Torsten Wolfgang Kuhlen and Sabine J. Schlittmeier},

booktitle = { 23. Conference of the European Society for Cognitive Psychology , Porto , Portugal , ESCoP 2023},

title = { Memory and Listening Effort in Two-Talker Conversations: Does Face Visibility Help Us Remember?},

year = {2023},

}

Towards More Realistic Listening Research in Virtual Environments: The Effect of Spatial Separation of Two Talkers in Conversations on Memory and Listening Effort

between three or more people often include phases in which one conversational partner is the listener while the others are conversing. In face-to-face conversations, it is quite unlikely to have two talkers’ audio signals come from the same spatial location - yet monaural-diotic sound presentation is often realized in cognitive-psychological experiments. However, the availability of spatial cues probably influences the cognitive processing of heard conversational content. In the present study we test this assumption by investigating spatial separation of conversing talkers in the listener’s short-term memory and listening effort. To this end, participants were administered a dual-task paradigm. In the primary task, participants listened to a conversation between two alternating talkers in a non-noisy setting and answered questions on the conversational content after listening. The talkers’ audio signals were presented at a distance of 2.5m from the listener either spatially separated (+/- 60°) or co-located (0°; within-subject). As a secondary task, participants worked in parallel to the listening task on a vibrotactile stimulation task, which is detached from auditory and visual modalities. The results are reported and discussed in particular regarding future listening experiments in virtual environments.

@InProceedings{Mohanathasan2023DAGA,

author = {Chinthusa Mohanathasan, Jonathan Ehret, Cosima A.

Ermert, Janina Fels, Torsten Wolfgang Kuhlen and Sabine J. Schlittmeier},

booktitle = {49. Jahrestagung für Akustik , Hamburg , Germany ,

DAGA 2023},

title = {Towards More Realistic Listening Research in Virtual

Environments: The Effect of Spatial Separation of Two Talkers in

Conversations on Memory and Listening Effort},

year = {2023},

pages = {1425-1428},

doi = { 10.18154/RWTH-2023-05116},

}

Towards Discovering Meaningful Historical Relationships in Virtual Reality

Traditional digital tools for exploring historical data mostly rely on conventional 2D visualizations, which often cannot reveal all relevant interrelationships between historical fragments. We are working on a novel interactive exploration tool for historical data in virtual reality, which arranges fragments in a 3D environment based on their temporal, spatial and categorical proximity to a reference fragment. In this poster, we report on an initial expert review of our approach, giving us valuable insights into the use cases and requirements that inform our further developments.

@INPROCEEDINGS{Derksen2023,

author={Derksen, Melanie and Weissker, Tim and Kuhlen, Torsten and Botsch, Mario},

booktitle={2023 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW)},

title={Towards Discovering Meaningful Historical Relationships in Virtual Reality},

year={2023},

volume={},

number={},

pages={697-698},

doi={10.1109/VRW58643.2023.00191}}

Gaining the High Ground: Teleportation to Mid-Air Targets in Immersive Virtual Environments

Most prior teleportation techniques in virtual reality are bound to target positions in the vicinity of selectable scene objects. In this paper, we present three adaptations of the classic teleportation metaphor that enable the user to travel to mid-air targets as well. Inspired by related work on the combination of teleports with virtual rotations, our three techniques differ in the extent to which elevation changes are integrated into the conventional target selection process. Elevation can be specified either simultaneously, as a connected second step, or separately from horizontal movements. A user study with 30 participants indicated a trade-off between the simultaneous method leading to the highest accuracy and the two-step method inducing the lowest task load as well as receiving the highest usability ratings. The separate method was least suitable on its own but could serve as a complement to one of the other approaches. Based on these findings and previous research, we define initial design guidelines for mid-air navigation techniques.

@ARTICLE{10049698,

author={Weissker, Tim and Bimberg, Pauline and Gokhale, Aalok Shashidhar and Kuhlen, Torsten and Froehlich, Bernd},

journal={IEEE Transactions on Visualization and Computer Graphics},

title={Gaining the High Ground: Teleportation to Mid-Air Targets in Immersive Virtual Environments},

year={2023},

volume={29},

number={5},

pages={2467-2477},

keywords={Teleportation;Navigation;Avatars;Visualization;Task analysis;Floors;Virtual environments;Virtual Reality;3D User Interfaces;3D Navigation;Head-Mounted Display;Teleportation;Flying;Mid-Air Navigation},

doi={10.1109/TVCG.2023.3247114}}

Poster: Enhancing Proxy Localization in World in Miniatures Focusing on Virtual Agents

Virtual agents (VAs) are increasingly utilized in large-scale architectural immersive virtual environments (LAIVEs) to enhance user engagement and presence. However, challenges persist in effectively localizing these VAs for user interactions and optimally orchestrating them for an interactive experience. To address these issues, we propose to extend world in miniatures (WIMs) through different localization and manipulation techniques as these 3D miniature scene replicas embedded within LAIVEs have already demonstrated effectiveness for wayfinding, navigation, and object manipulation. The contribution of our ongoing research is thus the enhancement of manipulation and localization capabilities within WIMs, focusing on the use case of VAs.

@InProceedings{Boensch2023c,

author = {Andrea Bönsch, Radu-Andrei Coanda, and Torsten W.

Kuhlen},

booktitle = {{V}irtuelle und {E}rweiterte {R}ealit\"at, 14.

{W}orkshop der {GI}-{F}achgruppe {VR}/{AR}},

title = {Enhancing Proxy Localization in World in

Miniatures Focusing on Virtual Agents},

year = {2023},

organization = {Gesellschaft für Informatik e.V.},

doi = {10.18420/vrar2023_3381}

}

Poster: Whom Do You Follow? Pedestrian Flows Constraining the User’s Navigation during Scene Exploration

In this work-in-progress, we strive to combine two wayfinding techniques supporting users in gaining scene knowledge, namely (i) the River Analogy, in which users are considered as boats automatically floating down predefined rivers, e.g., streets in an urban scene, and (ii) virtual pedestrian flows as social cues indirectly guiding users through the scene. In our combined approach, the pedestrian flows function as rivers. To navigate through the scene, users leash themselves to a pedestrian of choice, considered as boat, and are dragged along the flow towards an area of interest. Upon arrival, users can detach themselves to freely explore the site without navigational constraints. We briefly outline our approach, and discuss the results of an initial study focusing on various leashing visualizations.

@InProceedings{Boensch2023b,

author = {Andrea Bönsch, Lukas B. Zimmermann, Jonathan Ehret, and Torsten W.Kuhlen},

booktitle = {ACM International Conferenceon Intelligent Virtual Agents (IVA ’23)},

title = {Whom Do You Follow? Pedestrian Flows Constraining the User’sNavigation during Scene Exploration},

year = {2023},

organization = {ACM},

pages = {3},

doi = {10.1145/3570945.3607350},

}

Poster: Where Do They Go? Overhearing Conversing Pedestrian Groups during Scene Exploration

On entering an unknown immersive virtual environment, a user’s first task is gaining knowledge about the respective scene, termed scene exploration. While many techniques for aided scene exploration exist, such as virtual guides, or maps, unaided wayfinding through pedestrians-as-cues is still in its infancy. We contribute to this research by indirectly guiding users through pedestrian groups conversing about their target location. A user who overhears the conversation without being a direct addressee can consciously decide whether to follow the group to reach an unseen point of interest. We outline our approach and give insights into the results of a first feasibility study in which we compared our new approach to non-talkative groups and groups conversing about random topics.

@InProceedings{Boensch2023a,

author = {Andrea Bönsch, Till Sittart, Jonathan Ehret, and Torsten W. Kuhlen},

booktitle = {ACM International Conference on Intelligent VirtualAgents (IVA ’23)},

title = {Where Do They Go? Overhearing Conversing Pedestrian Groups duringScene Exploration},

year = {2023},

pages = {3},

publisher = {ACM},

doi = {10.1145/3570945.3607351},

}

AuViST - An Audio-Visual Speech and Text Database for the Heard-Text-Recall Paradigm

The Audio-Visual Speech and Text (AuViST) database provides additional material to the heardtext-recall (HTR) paradigm by Schlittmeier and colleagues. German audio recordings in male and female voice as well as matching face tracking data are provided for all texts.

Poster: Memory and Listening Effort in Conversations: The Role of Spatial Cues and Cognitive Functions

Conversations involving three or more people often include phases where one conversational partner listens to what the others are saying and has to remember the conversational content. It is possible that the presence of speech-related auditory information, such as different spatial positions of conversing talkers, influences listener's memory and comprehension of conversational content. However, in cognitive-psychological experiments, talkers’ audio signals are often presented diotically, i.e., identically to both ears as mono signals. This does not reflect face-to-face conversations where two talkers’ audio signals never come from the same spatial location. Therefore, in the present study, we examine how the spatial separation of two conversing talkers affects listener’s short-term memory of heard information and listening effort. To accomplish this, participants were administered a dual-task paradigm. In the primary task, participants listened to a conversation between a female and a male talker and then responded to content-related questions. The talkers’ audio signals were presented via headphones at a distance of 2.5m from the listener either spatially separated (+/- 60°) or co-located (0°). In parallel to this listening task, participants performed a vibrotactile pattern recognition task as a secondary task, that is independent of both auditory and visual modalities. In addition, we measured participants’ working memory capacity, selective visual attention, and mental speed to control for listener-specific characteristics that may affect listener’s memory performance. We discuss the extent to which spatial cues affect higher-level auditory cognition, specifically short-term memory of conversational content.

@InProceedings{ Mohanathasan2023TeaP,

author = { Chinthusa Mohanathasan, Jonathan Ehret, Cosima A.

Ermert, Janina Fels, Torsten Wolfgang Kuhlen and Sabine J. Schlittmeier},

booktitle = { Abstracts of the 65th TeaP : Tagung experimentell

arbeitender Psycholog:innen, Conference of Experimental Psychologists},

title = { Memory and Listening Effort in Conversations: The

Role of Spatial Cues and Cognitive Functions},

year = {2023},

pages = {252-252},

}

Audio-Visual Content Mismatches in the Serial Recall Paradigm

In many everyday scenarios, short-term memory is crucial for human interaction, e.g., when remembering a shopping list or following a conversation. A well-established paradigm to investigate short-term memory performance is the serial recall. Here, participants are presented with a list of digits in random order and are asked to memorize the order in which the digits were presented. So far, research in cognitive psychology has mostly focused on the effect of auditory distractors on the recall of visually presented items. The influence of visual distractors on the recall of auditory items has mostly been ignored. In the scope of this talk, we designed an audio-visual serial recall task. Along with the auditory presentation of the to-remembered digits, participants saw the face of a virtual human, moving the lips according to the spoken words. However, the gender of the face did not always match the gender of the voice heard, hence introducing an audio-visual content mismatch. The results give further insights into the interplay of visual and auditory stimuli in serial recall experiments.

@InProceedings{Ermert2023DAGA,

author = {Cosima A. Ermert, Jonathan Ehret, Torsten Wolfgang

Kuhlen, Chinthusa Mohanathasan, Sabine J. Schlittmeier and Janina Fels},

booktitle = {49. Jahrestagung für Akustik , Hamburg , Germany ,

DAGA 2023},

title = {Audio-Visual Content Mismatches in the Serial Recall

Paradigm},

year = {2023},

pages = {1429-1430},

}

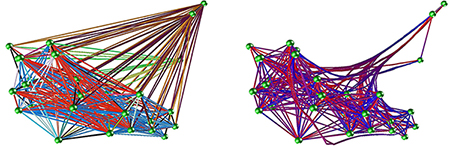

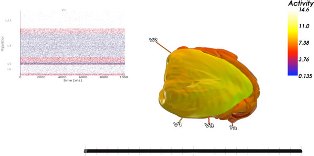

Poster: Insite Pipeline - A Pipeline Enabling In-Transit Processing for Arbor, NEST and TVB

Simulation of neuronal networks has steadily advanced and now allows for larger and more complex models. However, scaling simulations to such sizes comes with issues and challenges.Especially the amount of data produced, as well as the runtime of the simulation, can be limiting.Often, storing all data on disk is impossible, and users might have to wait for a long time until they can process the data.A standard solution in simulation science is to use in-transit approaches.In-transit implementations allow users to access data while the simulation is still running and do parallel processing outside the simulation.This allows for early insights into the results, early stopping of simulations that are not promising, or even steering of the simulations.Existing in-transit solutions, however, are often complex to integrate into the workflow as they rely on integration into simulators and often use data formats that are complex to handle.This is especially constraining in the context of multi-disciplinary research conducted in the HBP, as such an important feature should be accessible to all users.

To remedy this, we developed Insite, a pipeline that allows easy in-transit access to simulation data of multiscale simulations conducted with TVB, NEST, and Arbor.

@misc{kruger_marcel_2023_7849225,

author = {Krüger, Marcel and

Gerrits, Tim and

Kuhlen, Torsten and

Weyers, Benjamin},

title = {{Insite Pipeline - A Pipeline Enabling In-Transit

Processing for Arbor, NEST and TVB}},

month = mar,

year = 2023,

publisher = {Zenodo},

doi = {10.5281/zenodo.7849225},

url = {https://doi.org/10.5281/zenodo.7849225}

}

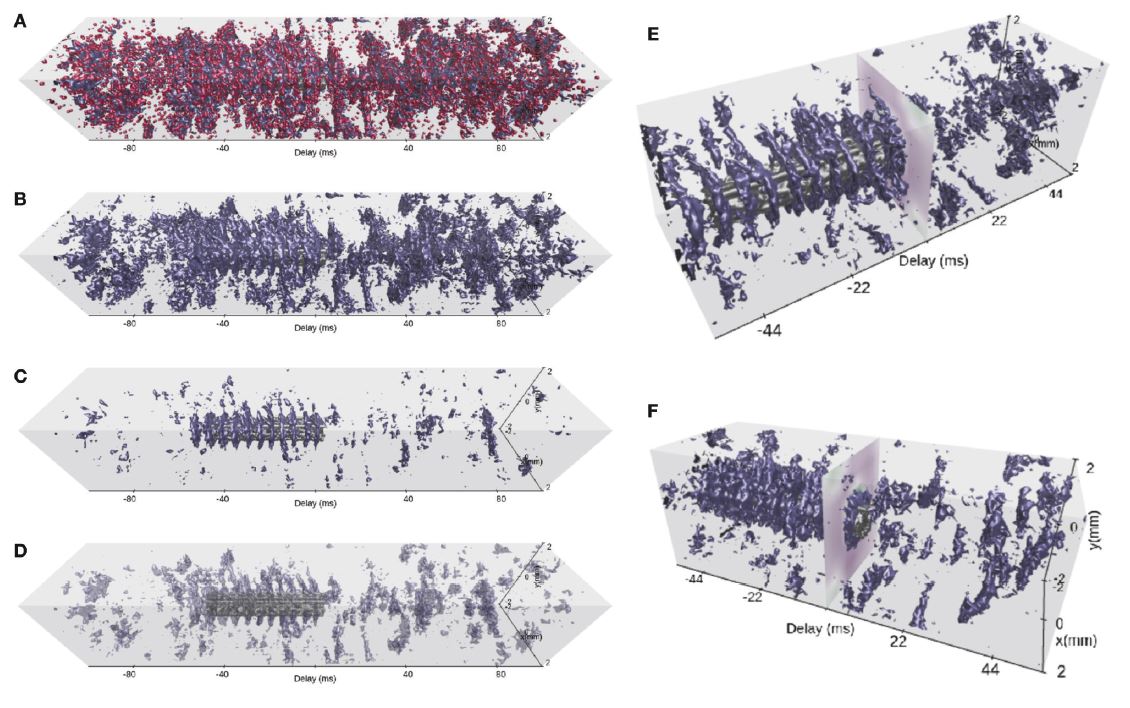

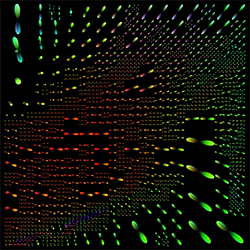

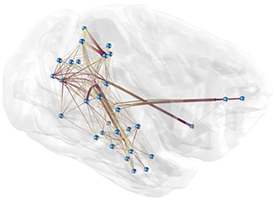

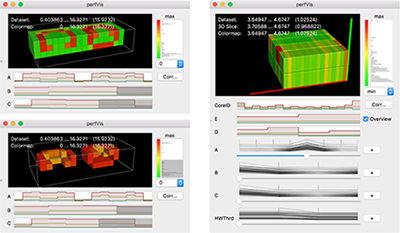

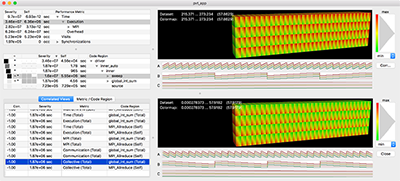

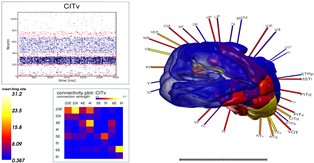

Insite: A Pipeline Enabling In-Transit Visualization and Analysis for Neuronal Network Simulations

Neuronal network simulators are central to computational neuroscience, enabling the study of the nervous system through in-silico experiments. Through the utilization of high-performance computing resources, these simulators are capable of simulating increasingly complex and large networks of neurons today. Yet, the increased capabilities introduce a challenge to the analysis and visualization of the simulation results. In this work, we propose a pipeline for in-transit analysis and visualization of data produced by neuronal network simulators. The pipeline is able to couple with simulators, enabling querying, filtering, and merging data from multiple simulation instances. Additionally, the architecture allows user-defined plugins that perform analysis tasks in the pipeline. The pipeline applies traditional REST API paradigms and utilizes data formats such as JSON to provide easy access to the generated data for visualization and further processing. We present and assess the proposed architecture in the context of neuronal network simulations generated by the NEST simulator.

@InProceedings{10.1007/978-3-031-23220-6_20,

author="Kr{\"u}ger, Marcel and Oehrl, Simon and Demiralp, Ali C. and Spreizer, Sebastian and Bruchertseifer, Jens and Kuhlen, Torsten W. and Gerrits, Tim and Weyers, Benjamin",

editor="Anzt, Hartwig and Bienz, Amanda and Luszczek, Piotr and Baboulin, Marc",

title="Insite: A Pipeline Enabling In-Transit Visualization and Analysis for Neuronal Network Simulations",

booktitle="High Performance Computing. ISC High Performance 2022 International Workshops",

year="2022",

publisher="Springer International Publishing",

address="Cham",

pages="295--305",

isbn="978-3-031-23220-6"

}

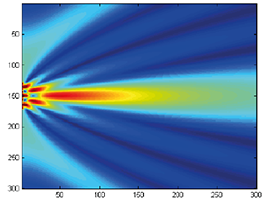

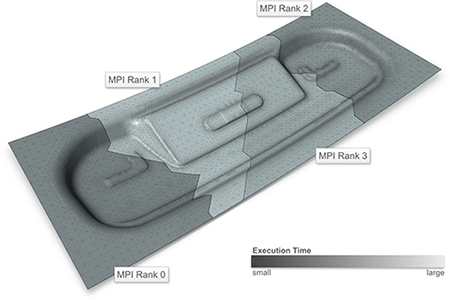

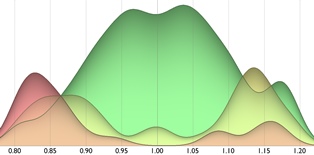

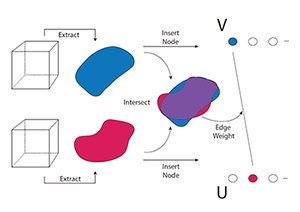

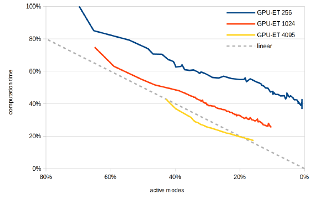

Performance Assessment of Diffusive Load Balancing for Distributed Particle Advection

Particle advection is the approach for the extraction of integral curves from vector fields. Efficient parallelization of particle advection is a challenging task due to the problem of load imbalance, in which processes are assigned unequal workloads, causing some of them to idle as the others are performing computing. Various approaches to load balancing exist, yet they all involve trade-offs such as increased inter-process communication, or the need for central control structures. In this work, we present two local load balancing methods for particle advection based on the family of diffusive load balancing. Each process has access to the blocks of its neighboring processes, which enables dynamic sharing of the particles based on a metric defined by the workload of the neighborhood. The approaches are assessed in terms of strong and weak scaling as well as load imbalance. We show that the methods reduce the total run-time of advection and are promising with regard to scaling as they operate locally on isolated process neighborhoods.

Astray: A Performance-Portable Geodesic Ray Tracer